Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

⚖️ Is this Big Tech’s Big Tobacco moment? A landmark social media addiction trial is happening right now in Los Angeles. The trial centers on a 20-year-old woman who alleges that endless scrolling and other design features worsened her depression and suicidal thoughts. Snap and TikTok settled before the trial; Meta and YouTube are fighting the claims. Some observers are calling this Big Tech’s Big Tobacco moment — a reference to the tobacco litigation in the ‘90s that exposed internal documents, led to warning labels, and reshaped public health policy.

Meta CEO Mark Zuckerberg and Instagram chief Adam Mosseri have testified so far. Internal documents shown in court suggest Meta knew minors were using its apps below the age minimum, the company prioritized maximizing time spent scrolling, and safety recommendations from experts were sometimes disregarded. Meta disputes the characterization, arguing the documents are cherry-picked and outdated.

What’s striking is that Meta’s own internal research found that parental supervision tools did not meaningfully curb teens’ compulsive use. Even when parents use the tools the platforms provide, behaviors don’t significantly change — a finding that reinforces something we’ve talked about often: screen time limits and parental controls are not set-it-and-forget-it solutions.

They’re tools. Helpful and necessary ones. But tools alone don’t teach judgment, emotional regulation, or resilience.

The timing of the trial is especially notable. The day after Adam Mosseri testified that heavy social media use may be “problematic” but not clinically addictive, a new longitudinal study published in Nature found that teens who struggled to describe their feelings or avoid unpleasant emotions were more vulnerable to developing social media addiction over time.

What does it all mean? This trial is ongoing. Researchers and lawmakers around the world are increasingly worried about compulsive use. Hundreds of families and school districts are suing major platforms. And more bellwether cases are coming. If juries consistently find that addictive design harmed minors, the financial and regulatory consequences could be enormous.

For parents, this is a reminder that:

We designed BrightCanary to help parents stay involved and curious in their children’s digital lives. Because technology safety is a skill, not a setting.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Believe it or not, we’re about halfway through the academic year. This is a great time to zoom out and reset goals — both academic and personal. These conversation-starters help teens connect their daily habits to their bigger ambitions.

🧍♀️ What is the internet like for a 15-year-old girl? In this evocative essay, an anonymous teen describes being inundated with misogyny online. (Language warning.) It’s a sobering reminder that algorithms don’t just show content — they shape culture.

🧸 The villain of Toy Story 5 is … tablets. Pixar’s most nostalgic franchise is confronting “iPad kid” culture head-on. The new trailer shows Woody, Buzz, and the gang competing with iPads for kids’ attention. Art imitates life, after all. What do you think about the trailer?

👾 Discord is rolling out age verification for users. What does it mean, and why is your teen so upset about it? We explain.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🎰 Gambling is becoming alarmingly common among boys: A new report from Common Sense Media is a wake-up call for parents: 36% of boys ages 11–17 gambled in the past year. And we aren’t talking about slots or poker — the report looked at sports betting apps, loot boxes, skin cases, gacha-style rewards inside video games, and social media feeds that normalize betting. Nearly one in four boys have engaged in gaming-related gambling, and most spent real money doing it. Some stats:

While many boys describe gambling as “low-stakes” or just part of bonding with friends or family (one-third have gambled with family members), 27% of boys who gamble report negative effects like stress or conflict. The report also highlights a major loophole: while gambling is illegal for minors, in-game gambling mechanics often aren’t regulated the same way, making it easy for kids to spend (or lose) real money.

What parents can do: Start conversations early, recognize that gambling comes in many forms, set clear rules around spending and games, monitor influences (friends, online activity, and games), and watch for warning signs like secrecy or emotional changes.

🤖 The risks of AI in schools may outweigh the benefits: A new study from the Brookings Institution suggests that while AI tools are being rapidly adopted in classrooms, the risks currently outweigh the benefits — especially for kids’ cognitive and social development. Researchers warn of a “doom loop” where students offload thinking to AI, weakening problem-solving and learning skills over time. There are also concerns about kids developing social and emotional habits through chatbots designed to agree with them, making real-world disagreement and collaboration harder.

UNICEF recommends that parents talk to kids early about what AI is, warn against sharing personal information with AI tools, watch for signs of overuse or behavioral changes, and stay involved in how AI is used for school and beyond. Not sure where to start? Check out our free AI safety toolkit for parents (plus a free code for BrightCanary — send it to another parent!).

📵 Why screen time limits alone aren’t enough anymore: The American Academy of Pediatrics says it’s time to rethink how we manage kids’ screen use. New guidance emphasizes that time limits alone don’t address the real issue: digital platforms are intentionally designed to keep kids engaged through autoplay, notifications, and algorithmic feeds.

Screen time doesn’t tell the whole story anymore. Instead of rigid rules, parents are encouraged to focus on how screens are used, what content kids are engaging with, and how digital life affects sleep, learning, and mental health. Think less stopwatch, more strategy. BrightCanary is designed to help parents stay informed about their child’s activity across all the apps they use — so you know not only what apps your kiddo is using, but also what they encounter. Here’s how to start monitoring (without breaking trust).

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Many kids don’t think they’re gambling … even when they absolutely are. Your goal is to help kids recognize risks before habits form. These conversation-starters can help you open the door without judgment:

📱 TikTok gets an American makeover: TikTok officially has US-based owners. So, the app isn’t going anywhere — but the experience won’t stay the same. Experts say changes will likely show up first in moderation and data practices, not features. If your child uses TikTok, use the Family Pairing feature to set guardrails around their use.

🧹 YouTube takes down major AI slop channels: Following a report showing massive growth in low-quality AI-generated content, YouTube appears to have removed several top “AI slop” channels with millions of subscribers.

🪪 Discord rolls out global age verification: Starting next month, Discord will require face scans or ID for full access. Accounts default to a teen-safe experience unless verified as adult — with stricter filters and protections baked in.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

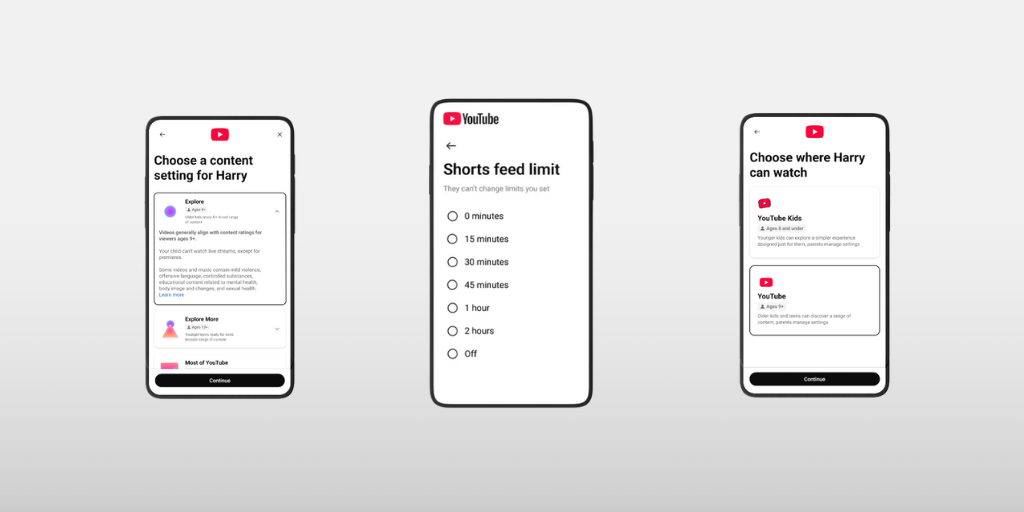

📵 Parental controls are coming to YouTube Shorts: If YouTube Shorts have quietly taken over your teen’s free time, this update’s for you. YouTube just announced new parental controls that let parents set limits on how much time teens spend scrolling short-form videos, calling it an “industry-first feature” that puts parents firmly in control. Parents of supervised teen accounts will soon be able to set daily Shorts limits (yes, even zero), customize limits by situation (homework vs. road trips), and add Bedtime or Take a Break reminders to help teens build healthier habits.

YouTube is also rolling out new quality standards for teen content, developed with child development experts. These standards aim to promote more age-appropriate, enriching videos (like Khan Academy, CrashCourse, and TED-Ed) while reducing brain rot content in teen feeds. Our take: Major companies like Google and Meta have shown that their parental control settings aren’t foolproof, and it’s still possible for teens to get around age verifications. Parents need to stay informed about not only when their kids are watching short-form videos, but also what they’re watching. Here’s how to set parental controls on YouTube.

👻 Snapchat announces expanded parental controls in Family Center: It’s so interesting that so many major platforms are releasing common-sense parental controls, all around the same time, while they’re under intense legal scrutiny and ongoing lawsuits. Anyway! Snapchat’s new parental controls allow parents to see how much time they’re spending on Snapchat, including how that time breaks down across different features (like sending Snaps, exploring the Snap Map, or watching content on Spotlight and Stories). Parents can also see how their teen might know new friends they add. However, you can’t view anything about what they’re sending (you need BrightCanary for that). If your teen uses Snapchat, here’s how to set up Family Center and make the most of those parental controls.

🎮 Can video games actually be good for kids’ brains? New research suggests that certain types of video games, especially action games, may help sharpen attention, learning, and cognitive flexibility, particularly when played in short, moderate sessions. Experts emphasize that most benefits showed up with 30–60 minute sessions, not marathon gaming. Gaming works best as one layer of a healthy life, alongside physical activity, creativity, sleep, and real-world socializing. So, no, this isn’t permission for your kid to play Minecraft overnight for their brain health. Video games aren’t necessarily the enemy, but it’s still a good idea to understand what your child is playing, how often they play, and what interactions they have online. Not sure where to start? Check out our guide to parental controls on Roblox.

🍽️ Screens are hurting kids’ conversation skills, but there’s an easy fix: Recent research shows that the use of phones negatively impacts our in-person interactions, and that’s a problem for kids. Constant phone use is directly eroding kids’ ability to hold face-to-face conversations. Kids in screen-saturated households struggle more with reading nonverbal cues, activate fewer mirror neurons (linked to empathy), and feel anxious about real, unedited conversations. The good news is that you don’t need a full digital detox to help. One of the simplest interventions: device-free family meals to model healthy conversations at home.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

At some point, your child will come across something online that’s upsetting: a violent video, graphic news footage, or content they weren’t emotionally prepared to see. How you respond matters more than the content itself. These conversation-starters can help you keep the door open without making things scarier or shutting them down:

🏛️ It’s falling on states to regulate the platforms that put kids most at risk, and New York is stepping up to the challenge. Gov. Kathy Hochul announced a sweeping plan to expand online parental controls and age verification, encompassing online platforms like Roblox. The proposed protections include setting kids’ accounts to the highest privacy settings by default and disabling AI chatbot features for kids.

🔒 Google is shifting its policy for teen accounts, instead of automatically removing parental controls at 13: Under the company’s planned policy update, any supervised minor will have to get parental approval before they can turn off supervision.

🎨 The ultimate list of 102 screen-free activities for kids (and adults) of all ages.

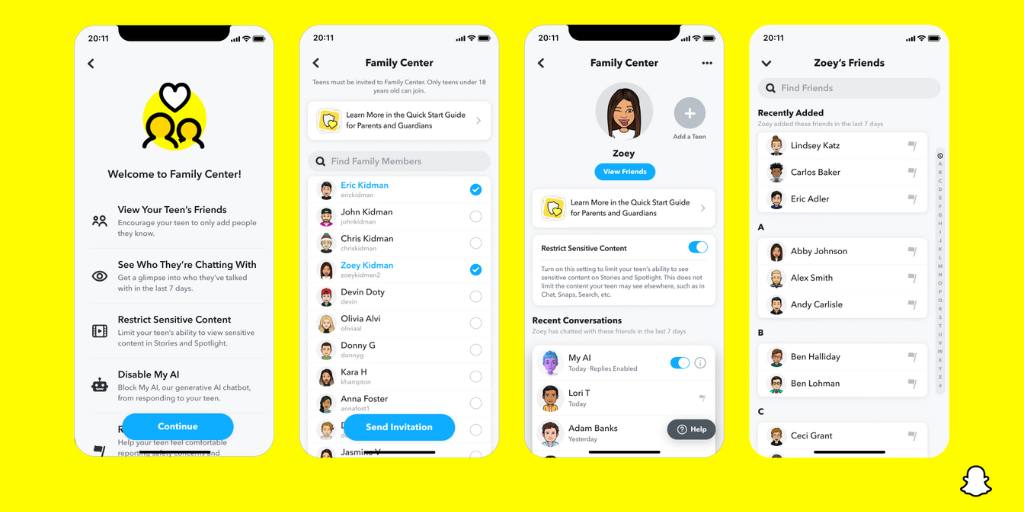

Did you know that Snapchat has free parental control features within the app? If you didn’t know, you’re not alone. Although 20 million teenagers use Snapchat in the United States, only around 200,000 parents use Snapchat Family Center to supervise their accounts. If you’re wondering how to use Snapchat Family Center, this guide explains what parental controls Snapchat offers, how to set it up, and how to use it effectively to keep your teen safe.

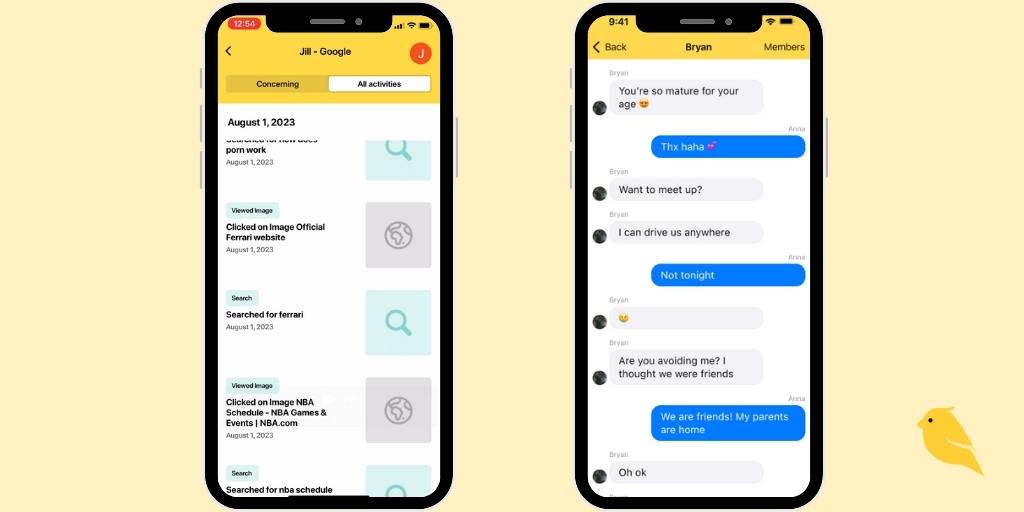

Snapchat Family Center helps parents keep tabs on their child’s contacts and conversations, as well as how much time they spend on the app. Parents can see who their child is communicating with and how frequently, but it doesn’t reveal the content of their messages.

Family Center also allows parents to restrict sensitive content in Snapchat’s Stories and Spotlight sections, which can potentially expose kids to inappropriate material because they’re posted by other users and publishers.

This suite of Snapchat parental controls also makes it easy to report suspicious accounts or concerning behaviors directly through the app. If your child connects with someone unfamiliar or if any interactions raise red flags, you can immediately take action.

In the Family Center, parents can see:

It’s important to note that Family Center doesn’t provide access to your child’s Snaps. The tool is more about awareness, rather than monitoring the content of their messages for topics like explicit content or drug references.

It’s free and simple to set up Snapchat Family Center. First, you’ll need a Snapchat account. Then, follow these steps:

That’s it! Once your teen accepts the invitation, you’ll be able to see their most recent interactions and set content limits.

Snapchat Family Center doesn’t allow parents to see the content of their child’s messages. Parents who want more visibility can use child safety apps. The benefit of these third-party apps is that they allow parents to monitor messages for red flags, such as conversations about self-harm or drug use. The downside is that most of these apps don’t work with Snapchat on Apple devices ... except BrightCanary.

BrightCanary is a child safety app that allows parents to monitor what their kids message on Snapchat, Instagram, TikTok, and every other social media apps they use. Download it today and try it for free.

Even the best parental control features don’t work if your child doesn’t agree to them. If your child pushes back on using Snapchat Family Center, start by having an open and honest conversation about your concerns.

Start by explaining that you’re not trying to spy on them. Instead, you’re trying to ensure they’re interacting safely and responsibly online. Talk about some of the risks associated with Snapchat, like getting added to group chats with strangers, seeing inappropriate content on their Spotlight feed, or even the risk of getting approached by drug dealers, who regularly use Snapchat and other social media platforms to sell illicit substances to minors.

You can also approach this conversation as a team effort. Ask your child why they feel uncomfortable using parental controls, and try to understand their perspective. Acknowledge their need for independence, and then set some ground rules together.

For instance, you might position Family Center as a requirement for device use; if they want their own phone and Snapchat account, they need to agree to parental supervision. Maybe you all agree to use Family Center for a trial period, then revisit the conversation in a few months.

Snapchat’s Family Center is a step in the right direction for promoting safe digital habits for teens. While it doesn’t offer access to message content, it provides valuable insights into who your child is messaging. Most importantly, maintain open communication with your child about safe social media use.

To monitor what your child says to the people they message on Snapchat, you need BrightCanary. The app offers the most comprehensive monitoring across all apps on Apple devices, including Snapchat, text messages, and more. Try it for free today.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤖 More than 20% of YouTube is now AI slop: If your child’s YouTube feed feels … weird lately, you’re not imagining it. A new report from video editing firm Kapwing found that 21% of the first 500 YouTube Shorts shown to a brand-new account were AI-generated, and 33% qualified as “brain rot” content: hyper-stimulating, low-effort videos designed to farm views rather than inform or entertain. And it’s not just Shorts: The Guardian reports that nearly 1 in 10 of the fastest-growing YouTube channels globally now post only AI-generated videos.

Algorithms don’t care if content is junk — they care if it keeps kids watching. This is a good moment to talk with your child about how algorithmic recommendation systems work, why “popular” doesn’t always mean “good,” and how to recognize content that’s meant to hook, not help.

🤝 Teens prefer AI chatbots that feel like “best friends” — and that’s a red flag: New research raises concerns about AI chatbots designed to sound like emotionally supportive humans. Researchers found that most adolescents prefer AI that communicates like a “best friend,” rather than systems that make it clear they’re not human. Teens who preferred their AI BFF reported higher stress and anxiety, and lower-quality relationships with family and peers — indicating that they may be more emotionally vulnerable when it comes to befriending AI.

The authors argue that clear boundaries, repeated reminders that AI isn’t human, and stronger AI literacy should be treated as core safety features, not optional add-ons. If your child uses AI chatbots like ChatGPT or Polybuzz, reinforce that they shouldn’t replace real relationships or emotional support.

📱 Kids are spending over an hour of the school day on their phones: According to new research published in JAMA, American teens ages 13–18 spend an average of 70 minutes of the school day on their phones — mostly social media apps like TikTok, Instagram, and Snapchat. They also spent an average of nearly 15 minutes each day on gaming apps and almost 15 minutes on video apps such as YouTube, all during school hours.

If your school district isn’t one of the growing numbers of schools banning phones, experts recommend keeping phones out of reach during class time, such as in lockers or pouches. At the very least, have your child turn off their phone when they get to school or use Apple Screen Time to set Do Not Disturb limits. The goal isn’t punishment. You’re helping kids protect their attention while they’re still learning how.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Use these conversation-starters to spark meaningful discussions this week about attention and connection:

✍️ TikTok signed a deal creating a new U.S.-based joint venture backed by Oracle and other American investors. It’s still unclear whether U.S. users will need to migrate to a new app, but ByteDance says it won’t control U.S. user data or the algorithm.

📵 We’re one month into Australia’s social media ban for kids under 16. Some teens report feeling “free” and more present, while others quickly found workarounds using fake birthdays or switched to messaging apps like WhatsApp and Discord, the BBC reports.

💬 Character.AI and Google have agreed to settle lawsuits brought by families of teens who harmed themselves after interacting with AI chatbots. Character.AI has since banned users under 18 from open-ended chats.

📍 A Texas father used phone-based parental controls to track and help rescue his 15-year-old daughter after she was kidnapped. It’s a sobering reminder that safety tools matter — particularly in situations where time is of the essence.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

📊 Teens, social media, and AI: Pew Research Center recently released a new report on how teens are using social media and online platforms. Social media usage hasn’t shifted much — among teens ages 13–17, 92% report using YouTube, 68% use TikTok, 63% use Instagram, and 55% use Snapchat. But 21% of teens now say they use TikTok “almost constantly,” up from 16% in 2022.

This is also the first year Pew asked teens about AI chatbots. Survey says:

Earlier this year, Common Sense Media also reported that three in four teens use AI for companionship, including emotional and mental health support — but most popular chatbots fail to respond to red flags, like anxiety, depression, and eating disorders.

It’s clear that AI is an ingrained part of how kids experience digital spaces today. Parents need to have explicit conversations with kids about AI safety. Learn more about this topic, and how to protect your teen from the risks, with our guide to AI companions.

🐤 BrightCanary’s Year in Review: As we settle into the last few hours of 2025, we wanted to take a look in the rearview mirror. Here’s a snapshot of what happened at BrightCanary this year:

And more. Thank you for trusting us with something as important as your child’s digital safety. Here’s to an even better 2026!

📱 If your kid got a new device for the holidays, start here: Did they find an iPhone under the Christmas tree? Now’s the time to lay some ground rules and make sure your kids are set up for success (and safety). Here are a few guides for setting up parental monitoring:

If they got a new iPhone or iPad:

If they got an Apple Watch: How to monitor text messages on Apple Watch

These guides walk you through setup and the conversations that matter just as much as the parental control settings.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

As we head into 2026, many families are rethinking how phones and screens fit into everyday life. Instead of aiming for a total reset, try starting with a few honest conversations. Use these prompts to kick things off:

⚠️ New York Gov. Kathy Hochul signed a bill requiring warning labels on social media for younger users — a move that signals growing pressure on platforms to change default designs.

🎊 Do you have any parenting resolutions for 2026? Some of the ones we’ve heard around the Slack watercooler: trying one new screen-free hobby per month, being more present, and charging phones outside the bedroom at night.

It’s challenging to be a kid in today’s digital world. As many as 59% of teens have experienced cyberbullying, and 25% of teens think social media has a negative influence on people their age. Parental monitoring apps can help parents stay involved, protect their kids from online risks, and teach their kids how to use the internet safely. A majority of US teens use iPhones, which means it’s important to find the best parental monitoring app for iPhone that balances safety and independence. Here are a few of the best options for parents in 2025.

The benefit of a parental monitoring app is that it gives you a convenient and personalized way to learn more about your child’s online activities.

“Parental monitoring” is a little different from another term you may have heard, “parental controls.” We define parental controls as tools and settings that allow parents to set firm guardrails around their child’s internet use and access, such as screen time limits and website blocking. Parental monitoring refers to tools that allow parents to supervise what their kids see and send online.

To that end, the best iPhone monitoring apps for parents should give parents visibility into the apps their kids use the most.

Aura is an all-in-one platform that protects against identity theft and online threats. The service offers tiers based on your needs — the highest tier covers your entire family with identity, fraud, and child protection, while the lowest tier solely offers parental controls and child safety features.

Primarily an identity theft and fraud protection platform, it also allows parents to set website limits and restrictions, including popular platforms like YouTube and Discord. You can also set screen time limits, view reports on your child’s internet activity, and even temporarily disable internet access — helpful if your child has a tendency to scroll after dark. Aura is unique among parental monitoring apps for iPhone because it monitors online PC video games, alerting parents to threats like cyberbullying, scams, and predators.

Aura requires that you install an app on your child’s phone, and it doesn’t offer comprehensive parental monitoring across popular apps. For example, you can restrict Instagram, but you won’t get content alerts if your child gets a stranger in their direct messages. Aura also doesn’t monitor text messages on iPhone. Aura is best-suited for families who want a digital security solution that also monitors their child’s PC gaming.

Apple Screen Time is a free and robust suite of parental controls that are already loaded onto iOS. If you and your child have Apple devices, you can use Apple Screen Time to block apps and notifications during specific time periods (like school or bedtime), limit who your child can communicate with across phone calls, video calls, and messages, block inappropriate content, and — of course — set screen time limits.

However, Apple Screen Time doesn’t allow parents to monitor the content within specific apps. While Apple’s Communication Safety feature helps protect kids from sharing or receiving inappropriate photos and videos, that same protection doesn’t apply to other apps your child may use, like YouTube and Snapchat.

Apple Screen Time doesn’t require that you download anything on your child’s phone, but you will have to create an Apple ID for your child and add them to Family Sharing. This platform is best for families who want to set specific guardrails on their child’s device, such as purchase limits on the App Store and content restrictions. Because Apple Screen Time is free, we recommend that parents explore the settings and use it regardless of whatever other parental monitoring tools they use — it’s a good safety net for parents and kids on iPhones.

BrightCanary is one of the best parental monitoring apps for iPhone because it monitors what your child messages and sends across all their apps. Other apps only alert you when your child encounters something concerning, but BrightCanary gives you the option to view your child's latest keystrokes, as well as their emotional well-being, interests, and more.

The app works via a smart and secure keyboard, which you install on your child's device. BrightCanary's advanced AI monitors your child's activity, and updates in real-time with any concerning content alerts. For parents who want to monitor both sides of text conversations, images, and deleted texts, they can subscribe to Text Message Plus within the app.

The app includes a chatbot called Ask the Canary, which gives you helpful parenting guidance and conversation-starters 24/7. With a high rating in the App Store, parents have called BrightCanary the “best monitoring app I’ve found for iPhone.”

BrightCanary offer features like screen time limits or location sharing because those are freely available through Apple Screen Time. BrightCanary is ideal for parents who want to monitor the content of their child’s online activities, including text messaging.

OurPact can manage or block any iOS app, including messaging and social media apps, and set screen time limits based on the schedules you set. The app also offers geofencing alerts, so you’ll know when your kids arrive or leave locations like home or school. The Premium plan allows you to view automated screenshots of your child’s online activity, including texts, although you won’t receive alerts based on content monitoring.

Many of the app’s features are already freely available with Apple Screen Time, but OurPact’s well-designed interface may make these features more accessible for stressed-out parents.

Preference and accessibility are two big factors to consider when you’re weighing parental monitoring apps for iPhone. Some apps are on the higher end of the price range because you get access other features, like identity theft protection and location monitoring. Others have philosophical differences.

For example, BrightCanary is designed to encourage communication and independence; rather than blocking apps, BrightCanary gives parents visibility into what their kids are doing online, so they can talk about any red flags together. That insight, plus helpful summaries and AI-powered conversation starters, means that your child is learning how to use the internet safely with your support — rather than blocking everything until they graduate high school.

One key factor to consider is which apps you’ll be able to monitor on iOS. For example, Snapchat is a major concern for many parents, but most parental monitoring apps don’t include Snapchat on iOS. (You can monitor sent messages in Snapchat with BrightCanary, though.) If you have a specific concern in mind, double-check that the monitoring app covers it.

Apple has strict limitations on what third-party apps can access, so many parental monitoring apps are limited on what they can monitor in iOS. Some companies get around that by requiring that you install an extra app on your child’s device, but that can actually slow down your child’s phone and drain their battery over time. Weigh the pros and cons, have a frank conversation with your child about online safety, and go with the parental monitoring app for iPhone that works best for your family. You’ve got this, parent.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

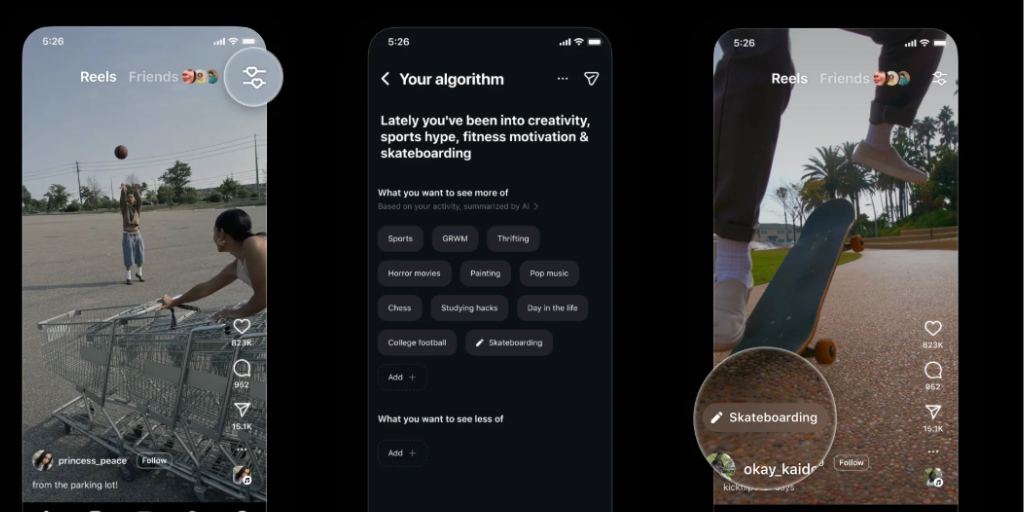

👉 Instagram will let your child pick what shows up in Reels: Instagram is doing something pretty unusual for a social media platform: explaining what’s under the hood. With a new feature called “Your Algorithm,” users can now see a summary of their recent interests and choose topics they want to see more or less of, like dialing up “jiu jitsu” and dialing down “AI cat videos.”

For parents, this product update is also a conversation-starter with your teen. Social media algorithms aren’t neutral. They learn from behavior, reward attention, and quietly shape what kids see day after day. This feature offers a rare moment to pause and scroll and ask:

Why do you think Instagram thinks this is your interest?

How do videos like this make you feel after watching them for a while?

What would you want to see more of (or less of) if you had the choice?

Our take: Tools like this don’t “fix” social media, but they do help kids understand that feeds are designed to hook you based on your interests. The more teens understand how algorithms work, the better equipped they are to use platforms intentionally instead of getting pulled along for the ride. For more on this, browse our parent’s guide to social media algorithms, and learn how to reset your child’s algorithm on popular platforms.

🎁 Thinking about a smartphone for the holidays? Read this first: If a phone is on your child’s holiday wishlist, new research suggests it’s worth waiting. A large study published in Pediatrics found that kids who got their first smartphone before age 13 had significantly worse health outcomes than peers without phones:

Additionally, a new study from the American Psychological Association now directly ties short form video content with significantly diminished mental health and poor attention spans.

The median age for getting a phone in the U.S. is now 11, which means many kids are entering middle school with a powerful device and very few guardrails. However, the takeaway from experts isn’t panic: it’s constraints. Use parental controls like Apple Screen Time to set restrictions on device use, and use a monitoring app like BrightCanary to stay informed about what your child encounters online.

One simple, high-impact step? Keep phones out of bedrooms overnight. It’s not a cure-all, but it’s one of the easiest ways to protect sleep and manage device boundaries, even if your child already has a phone.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

A few questions to help kids think critically about feeds, phones, and habits:

📰 We were included in Wirecutter’s roundup of best parental control apps! Check us out under "Other parental control apps worth considering."

🚫 “It was kind of scary, because social media is so present in my life, and to think it could be taken away like that so suddenly felt weird.” Australia’s social media ban kicked in last week, effectively banning teens under age 16 from using Instagram, YouTube, TikTok, and other major platforms. Here’s how teens are responding.

🤖 Researchers warn that popular AI tools are offering dieting advice, tips for hiding disordered eating, and even generating hyper-personalized “thinspiration” images. Experts say this content can be especially dangerous for vulnerable teens — and much harder to spot than traditional social media posts.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🇸🇪 Sweden pulls the plug on screens in childhood: Sweden — home of Spotify, Minecraft, and a very tasty Christmas soda called Julmust — has long embraced the idea that kids thrive with freedom. Many parents and educators extended that same philosophy to screens, allowing kids free digital access with limited oversight. In 2019, digital tools were even mandated in the national curriculum for 1- to 5-year-olds, fueled by concerns that Swedish kids would fall behind in an AI-driven future.

Then came the data. In 2022, Swedish 15-year-olds recorded their lowest math and reading scores in a decade, and more than a quarter performed poorly in math. The country’s education agency found that students who used digital media for things other than learning performed the worst. Additionally, nearly 9 in 10 teachers said smartphones were harming students’ learning, stamina, and attention spans.

Sweden course-corrected with its first-ever national screen time guidelines in 2024:

The country also banned phones from classrooms and boosted physical textbooks and library funding. Sweden’s actions illustrate that we can’t expect our kids to be prepared for the digital future if they don’t learn how to use those devices safely and responsibly. Following strict screen time limits is one method. The other is staying involved in what they do online and setting guardrails in and out of the home. What do you think about Sweden’s screen time guidelines?

🧸 OpenAI blocks toymaker after AI toy crosses the line with kids: AI toys are everywhere right now, but not all of them are safe for kids. A new report from the Public Interest Research Group found that some AI-enabled toys were quick to discuss inappropriate or dangerous topics with young children. One AI teddy bear gave minors instructions on how to light matches or find knives in the home, along with explicit advice. (OpenAI has since suspended the toymaker, FoloToy, following the investigation.)

“It’s great to see these companies taking action on problems we’ve identified. But AI toys are still practically unregulated, and there are plenty you can still buy today,” report coauthor RJ Cross, director of PIRG’s Our Online Life Program, said in a new statement. “Removing one problematic product from the market is a good step, but far from a systemic fix.”

If you’re shopping for young children this season, advocacy groups urge parents to avoid buying AI-enabled toys right now.

🤖 Parents, turn on this new setting if your child uses TikTok: AI-generated videos, filters, and characters are flooding TikTok — but now you can control how much of it appears on your child’s For You page. TikTok’s new AI-generated content control lets users dial AI content up or down directly in settings. To adjust it, sit down with your child and go to their TikTok settings > Content Preferences > Manage Topics. Then, adjust the slider for AI-generated content (we recommend dialing this all the way down). TikTok is rolling out this new feature to accounts over the coming weeks.

Pair this with conversations about what’s real vs. AI-created — and why they shouldn’t always trust everything they see online. Not sure how to start the conversation? Check out our guide about how to talk to your kid about AI-generated deepfakes.

🏛️ Congress unveils major kids’ online safety package: The House Energy and Commerce Committee released 19 bills focused on protecting kids online. The package includes a revised version of KOSA, though without the broad “duty of care” language that sparked First Amendment concerns. Instead, platforms would need “reasonable policies and procedures” to address four harms: physical violence, sexual exploitation, drug/alcohol/tobacco-related risks, and financial harm and scams. Advocates say its progress, while critics say the new version of KOSA won’t do enough. We’ll keep you posted.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Let’s talk about screen balance, Sweden-style. With new global conversations around screen time and well-being, this is a great week to check in with your child about how screens fit into their everyday life. Here are conversation-starters to help your kids reflect on their own habits:

📉 Social media breaks really do help. A new JAMA study found that young adults who took a one-week social media detox had lower depression, anxiety, and insomnia — especially those who struggled most beforehand. On average, symptoms of anxiety dropped by 16.1%; symptoms of depression by 24.8%; and symptoms of insomnia by 14.5%. The improvement was most pronounced in subjects with more severe depression.

🇦🇺 Meta begins shutting down under-16 accounts in Australia. Ahead of the country’s new teen social media ban, Meta is revoking access for users under 16 and blocking new accounts. Age verification remains the biggest challenge, though.

🔥 Oxford’s Word of the Year is … “rage bait.” Rage bait is defined as “online content deliberately designed to elicit anger or outrage by being frustrating, provocative, or offensive, typically posted in order to increase traffic to or engagement with a particular web page or social media content.” Usage has tripled this year, as platforms struggle with content designed to provoke outrage for clicks — another reminder to help kids spot manipulation online.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤖 Character.ai to ban teens from talking to its AI chatbots: The chatbot platform recently announced that, beginning November 25, users under 18 won’t be allowed to interact with its online companions. The change comes after mounting scrutiny over how AI companions impact users’ mental health. In 2024, Character.ai was sued by the Setzer family, who accused the company of being responsible for his death. Character.ai also announced the rollout of new age verification measures and the funding of a new AI safety research lab.

Teens will still be able to use Character.ai to generate AI videos and images through specific prompts, and there’s no guarantee that the age verification measures will prevent teens from finding ways around them. If your teen uses AI companion apps: talk to them about the safety risks, use any available parental controls, and stay informed about how they interact with AI chatbots. And remember: for every app like Character.ai, there are countless others that aren’t taking the same steps to protect younger users.

Learn more about Character.ai on our blog, and use BrightCanary to monitor their interactions across every app they use — including AI.

🚫 Instagram shows more disordered eating content to vulnerable teens: According to an internal document reviewed by Reuters, teens who said Instagram made them feel worse about their bodies were shown nearly three times more “eating disorder–adjacent” content. Posts included idealized body types, explicit judgment about appearance, and references to disordered eating.

Meta also admitted that their current safety systems failed to detect 98.5% of the sensitive material that likely shouldn’t have been shown to teens at all. While Meta says it’s now cutting teen exposure to age-restricted content by half and introducing a PG-13 standard for teen accounts, these findings highlight a major gap between company promises and real-world outcomes.

Parents shouldn’t wait for algorithms to get it right. If your teen uses Instagram:

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Let’s talk about fandoms and why your teen might feel really attached to someone they’ve never met. Whether it’s a YouTuber who “gets them,” a favorite pop star, or an AI companion that feels like a friend, these relationships can make kids feel seen and part of a community. But they can also blur the line between admiration and obsession.

Use these conversation-starters to help your teen think critically about their online relationships:

👀 Elon Musk has launched Grokipedia, a crowdsourced online encyclopedia that is positioned as a rival to Wikipedia — but it’s still unclear how it works. Users have reported factual inconsistencies with Grokipedia’s articles, so now’s a good time to chat with your child about checking their sources.

😔 High schoolers are so scared of getting filmed that they’ve stopped dating. This piece from the Rolling Stone explains how the unchecked culture of public humiliation on social media is fueling mistrust among young men, making them hesitant to pursue relationships.

👋 We share even more parenting tips and resources on our Instagram. Say hi!