Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

📵 Predators are using TikTok to exploit minors. Minors are using TikTok’s live feature to perform sexually suggestive acts on camera in exchange for money and gifts, according to a report by Forbes and documentation from TikTok’s own internal investigation. NPR and Kentucky Public Radio also found that TikTok tweaked its algorithm to more prominently show attractive people, and the platform quantified how much time it takes for viewers to become addicted to the platform: 260 videos, or under 35 minutes. Even though minors aren’t allowed to livestream or receive gifts, it’s relatively easy for children to fib about their age when they sign up. Performing suggestive acts on camera in exchange for gifts is just one way predators can groom targets for sexual abuse and sextortion. TikTok says it has a zero tolerance policy for child sexual abuse material, and the platform does have parental controls — but they only work if your child sets their correct birthdate.

🤖 Social media companies aren’t doing enough to stop AI bots. That’s according to new research from the University of Notre Dame, which analyzed the AI bot policies and mechanisms of eight social media platforms, including Reddit, TikTok, X, and Instagram. Harmful artificial intelligence bots can be used to spread misinformation, hate speech, and enact fraud or scams. Although the platforms say they have policy enforcement mechanisms in place to limit the prevalence of bots, the researchers were able to get bots up and working on all the platforms studied. If you haven’t talked to your child about the risks of bots, misinformation, and online scams, now’s the time — if your child has used any social platform, odds are high that they’ve encountered a bot already.

😩 Teens are stressed about their future, appearance, and relationships. A team of researchers surveyed US teens about what stressors today’s teens are feeling. A majority (56%) of teens are stressed about the pressure to have their future figured out, 51% felt pressure to look a certain way, and 44% felt like they needed to have an active social life. While adults drove teen’s pressures to have their futures planned out and achieve the most, the pressure to have an active social life and keep up with appearances were driven by social media, the teens themselves, and peers. Teens are struggling to reduce those stressors, too — time constraints, difficulty putting tech away, and feeling like rest isn’t “productive” enough were all blockages to practicing more self-care. Techno Sapiens breaks down things parents can do to help their stressed-out teen.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Helping your teen manage stress starts with open and honest conversations. Here are five conversation-starters designed to prompt meaningful chats about self-care, stress management, and healthy ways to navigate the pressures they face:

It’s spooky season! A Good Girl’s Guide to Murder is a popular young adult mystery thriller (and Netflix series) — but is it safe for kids? If your child is interested in this series, read this guide first.

Are your child’s group chats causing major drama in their friend group? Here’s what parents need to watch for when their child starts texting independently — and how to help your child handle it.

🤳 Instagram remains the most used social app among teens, followed by TikTok, according to a new report by Piper Sandler.

🎃 Halloween is next week! In Washington, where BrightCanary is based, the most popular Halloween candy is Reese’s Peanut Butter Cups. What’s the most popular treat in your state?

📍 We’re on Pinterest! Follow BrightCanary to keep up with our latest parenting tips, infographics, and resources.

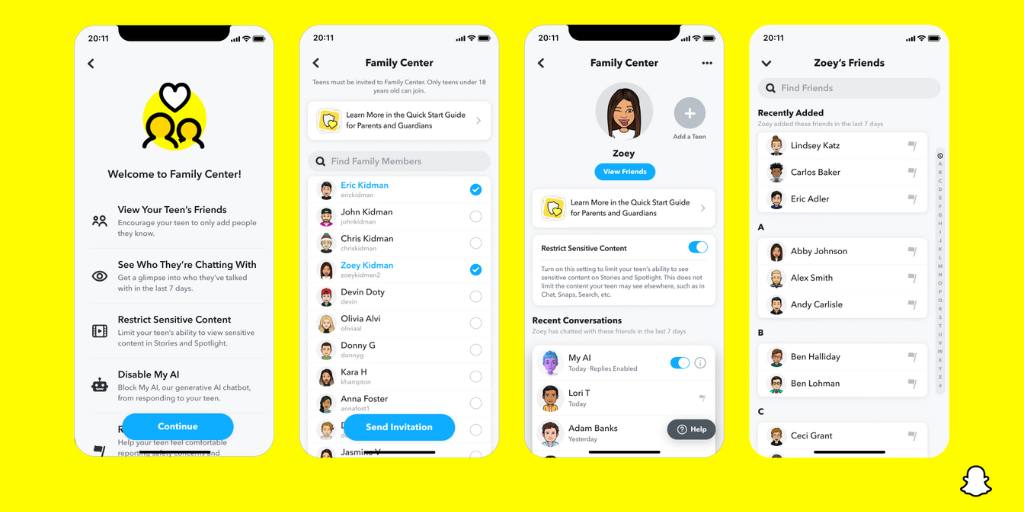

Did you know that Snapchat has free parental control features within the app? If you didn’t know, you’re not alone. Although 20 million teenagers use Snapchat in the United States, only around 200,000 parents use Snapchat Family Center to supervise their accounts. If you’re wondering how to use Snapchat Family Center, this guide explains what parental controls Snapchat offers, how to set it up, and how to use it effectively to keep your teen safe.

Snapchat Family Center helps parents keep tabs on their child’s contacts and conversations. Parents can see who their child is communicating with and how frequently, but it doesn’t reveal the content of their messages.

Family Center also allows parents to restrict sensitive content in Snapchat’s Stories and Spotlight sections, which can potentially expose kids to inappropriate material because they’re posted by other users and publishers.

This suite of Snapchat parental controls also makes it easy to report suspicious accounts or concerning behaviors directly through the app. If your child connects with someone unfamiliar or if any interactions raise red flags, you can immediately take action.

In the Family Center, parents can see:

It’s important to note that Family Center doesn’t provide access to your child’s Snaps. The tool is more about awareness, rather than monitoring the content of their messages for topics like explicit content or drug references.

It’s free and simple to set up Snapchat Family Center. First, you’ll need a Snapchat account. Then, follow these steps:

That’s it! Once your teen accepts the invitation, you’ll be able to see their most recent interactions and set content limits.

Snapchat Family Center doesn’t allow parents to see the content of their child’s messages. Parents who want more visibility can use child safety apps. The benefit of these third-party apps is that they allow parents to monitor messages for red flags, such as conversations about self-harm or drug use. The downside is that most of these apps don’t work with Snapchat on Apple devices ... except BrightCanary.

BrightCanary is a child safety app that allows parents to monitor what their kids message on Snapchat, Instagram, TikTok, and every other social media apps they use. Download it today and try it for free.

Even the best parental control features don’t work if your child doesn’t agree to them. If your child pushes back on using Snapchat Family Center, start by having an open and honest conversation about your concerns.

Start by explaining that you’re not trying to spy on them. Instead, you’re trying to ensure they’re interacting safely and responsibly online. Talk about some of the risks associated with Snapchat, like getting added to group chats with strangers, seeing inappropriate content on their Spotlight feed, or even the risk of getting approached by drug dealers, who regularly use Snapchat and other social media platforms to sell illicit substances to minors.

You can also approach this conversation as a team effort. Ask your child why they feel uncomfortable using parental controls, and try to understand their perspective. Acknowledge their need for independence, and then set some ground rules together.

For instance, you might position Family Center as a requirement for device use; if they want their own phone and Snapchat account, they need to agree to parental supervision. Maybe you all agree to use Family Center for a trial period, then revisit the conversation in a few months.

Snapchat’s Family Center is a step in the right direction for promoting safe digital habits for teens. While it doesn’t offer access to message content, it provides valuable insights into who your child is messaging. Most importantly, maintain open communication with your child about safe social media use.

To monitor what your child says to the people they message on Snapchat, you need BrightCanary. The app offers the most comprehensive monitoring across all apps on Apple devices, including Snapchat, text messages, and more. Try it for free today.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🚫 More kids are contending with AI-generated explicit photos: The Center for Democracy and Technology (CDT), a nonprofit focused on digital rights and privacy, recently reported a concerning rise in intimate images being shared without consent in American schools. During the past school year, 15% of high school students heard about a sexually explicit deepfake (an AI-generated image) of someone associated with their school, and 11% of kids ages 9–17 knew a peer who used AI to generate nude images of others.

This alarming trend can ruin a child’s self esteem, social life, and well-being. It’s fueled by the widespread availability of generative AI apps, which some students may use without fully understanding the risks and consequences. According to CDT’s survey, less than 20% of high school students said their school had explained what a deepfake nude is, and a majority of parents reported that their child’s school provided no guidance about authentic or AI-generated NCII. For more information and tips on how parents can reduce the risk for their kids, check out our blog.

👀 California Governor Gavin Newsom signed SB 976, also known as the Protecting Our Kids from Social Media Addiction Act. The bill prohibits online platforms from knowingly providing an “addictive feed” to a minor without parental consent. The bill also prohibits social media platforms from sending minors notifications during school hours and late night hours. The regulations won’t go into effect until January 1, 2027, to make time for the very likely court challenges from opposition groups, such as tech lobbyists and the Meta legal team.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

There’s an alarming trend on the rise: AI-generated explicit images circulated among high schoolers. What are deepfake nudes, and how can parents prevent it?

Looking for a new read to help you navigate parenting in the age of social media and smartphones? We’ve got you covered with this list.

When was the last time you had a really meaningful conversation with your child? Maybe you tried, but they clammed up or just repeated a series of “I don’t know”s and noncommittal grunts. Here are some conversation-starters to have better chats with your child, based on advice from psychotherapist Anna Marcolin, LCSW. Remember, approach the conversation with curiosity — not judgment.

🦄 Fortnite now allows parents to set time limits for their kids. If you’re curious about other Fortnite parental controls, we’ve got you covered on the BrightCanary blog.

😮💨 Women tend to do more of the day-to-day online work for the family, such as ordering essentials online, managing digital communications with schools and doctors, and planning events with other parents — all of which contribute to digital overload and even burnout, BBC reports.

🫶 How do you raise a well-adjusted adult? That’s the million-dollar question. Dr. Cara Goodwin of Parenting Translator breaks down the factors supported by research: a warm and loving parent-child relationship, and encouraging kids to take control of their thoughts and feelings.

💁 Preventing online addiction in kids requires a multi-pronged approach: teach kids about digital literacy, set rules and limits on devices, promote offline activities, and use digital monitoring tools alongside regular communication. Read more tips over at Psychology Today.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

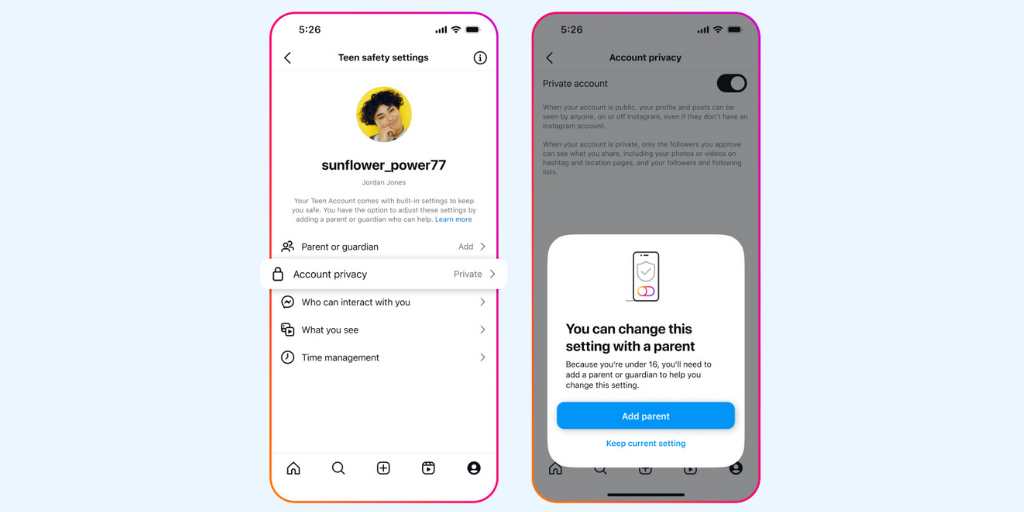

🛡️ Meta announces Teen Accounts: If your teen uses Instagram, their account will automatically convert to a Teen Account with added safety protections over the next few months. The update, which affects all new and existing accounts for teens ages 13–17, comes as social media companies face mounting pressure to do better for their youngest users. Many of the features have existed since 2021, but required users to opt in. Now, these protections will be automatically applied, along with improved age verification measures.

Some of the key features for teen accounts include:

Parents can also use updated supervision tools to see the topics their teen engages with and who they’re messaging, although you won’t be able to view the content of the messages. (To monitor those Instagram DMs, you’ll need BrightCanary.)

Our take: It’s about time. Instagram’s existing parental controls were confusing and difficult to find. This update applies common-sense safety measures to teen accounts, but parents still need to stay involved — and make sure their teen isn’t fibbing about their age online.

⚖️ KOSA and COPPA 2.0 advance in the House: Two major child online safety bills have moved out of the House Committee on Energy and Commerce: the Kids Online Safety Act (KOSA) and the Children and Teens’ Online Privacy Protection Act (COPPA 2.0). KOSA would hold social media accountable for harm caused to underage users, while COPPA 2.0 expands and updates the outdated 1998 COPPA law. KOSA had previously passed the Senate but faced some last-minute amendments in theHouse committee, including the removal of a provision that would have held platforms responsible for mitigating anxiety, depression, and eating disorders. While both bills are now set for a full vote in the House, it’s not clear whether they’ll gain enough support to pass.

😬 FTC finds that social media companies don’t adequately protect kids: A new FTC report found that large social media and video streaming companies surveilled their consumers in order to monetize their personal information, and they failed to protect young users online. The companies cited in the report include Amazon (which owns the gaming platform Twitch), Meta, Snap, Discord, and ByteDance, which owns TikTok. The companies’ data collection, minimization, and retention practices were designed as “woefully inadequate,” which is as harsh a burn from the FTC as you can get, and urged companies to provide greater safety measures for children. The FTC also urged Congress to pass stricter federal privacy legislation.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

If your child uses Snapchat, you’ve probably heard of the social clout Snapstreaks can have. But what is a Snap streak, how do they work, and should parents be worried?

Monitoring your child's texts can reveal warning signs of risky behavior or distress, but what should parents look for? We spoke with psychoanalyst and life coach Anna Marcolin for tips.

From the moment your child gets home, they’re thinking about what’s happening on Instagram or what their friends are sharing on Snapchat. If your child is hyper-focused on social media and dealing with FOMO (fear of missing out), it’s time to help them refocus their energy on real-life hobbies, interests, and activities. Here’s how to start the conversation.

😤 What is the worst argument social media companies use to defend themselves? “Instead of acknowledging the damage their products have done to teens, tech giants insist that they are blameless and that their products are mostly harmless,” write Zach Rausch, Jon Haidt, and Lennon Torres for The Atlantic.

👎 Nearly half of Gen Zers wish social media was never invented, according to a new survey by The Harris Poll. Eight in 10 Gen Zers have taken steps to limit social media usage at some point, such as unfollowing or muting an account (42%) or deleting a social media app (40%).

📰 Around half of TikTok users (52%) now say they regularly get their news on the platform, up from 43% last year and just 22% in 2020.

📅 The Australian government is considering a minimum age limit for teens to use social media, citing mental health concerns.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

😩 Surgeon General says parental stress is a public health issue: In a new public health advisory, US Surgeon General Murthy called for policy changes that better support parents and caregivers. The advisory noted that 48% of parents report that their stress is completely overwhelming, compared to 26% of other adults. And even though the amount of time parents spend working has increased (+28% for moms, +4% for dads), the amount of time they spend engaged in primary child care has also increased (+40% among moms, +154% among dads). Murthy called for safe, affordable child safe programs, predictable workplaces and understanding workspace leadership, and community centers (such as playgrounds and libraries) that can give children space to play while fostering social connection among parents.

👎Snap and TikTok sued for failures with child safety: The attorney general of New Mexico filed a lawsuit against Snap, the parent company of Snapchat, alleging that the company’s design features (namely, disappearing messages and images) facilitates sexual abuse and fails to protect minors from predation. Additionally, a U.S. appeals court has ruled that TikTok must face a lawsuit over a 10-year-old girl’s death. The girl’s mother, Nylah Anderson, is pursuing claims that TikTok’s algorithm recommended a viral “blackout challenge” to her daughter.

📹YouTube introduces content rules and new supervisory tools for teens: YouTube is limiting content that could be problematic for teens if viewed repeatedly. This includes content that promotes weight loss, idealized physical appearance, and social aggression. The platform also introduced new parental controls for teen users, allowing parents to link their account to their teen’s in order to view their YouTube activity. Parents will be able to view their child’s uploads, subscriptions, and comments — but not their content. (For that, you’ll need a child safety app like BrightCanary.)

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Snapchat can be risky for kids because of how easily strangers can contact them and messages can disappear. Here’s what parents need to know about the platform.

Many apps promise to help you monitor your child’s texts, but finding one that actually works well is an uphill battle. We’ve done the research to find the best of the best.

Even when your child’s social media feed doesn’t explicitly promote eating disorders, content can still encourage unhealthy behaviors or unrealistic body standards in subtle ways. Here are some conversation-starters to help you talk to your kids about content that promotes disordered eating behaviors and body negativity.

📵 “Just like it is impossible to train your child to drive a car without supervising from the passenger seat, you cannot train your child to be smart online if you are not privy to what he is doing in that world,” writes Melanie Hemp of Be ScreenStrong. Read more about your teen “earning” smartphone privacy.

📱 If your kid keeps getting around Apple Screen Time limits, what are your options? On the BrightCanary blog, we explain common workarounds and how parents can prevent kids from sneaking past their screen time boundaries.

👻 We’re adding a new, much-requested platform to the BrightCanary app in the coming weeks — stay tuned!

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

As back-to-school season kicks off, the debate over cell phone bans in schools is heating up. From Los Angeles Unified to the entire state of Florida, more schools are telling kids to keep their devices locked up from bell to bell. So, why are schools banning phones — and should your local district ban them, too?

Why are schools banning phones? Cell phone restrictions are becoming more common as educators grapple with the impact of smartphones on learning. Over 70% of high school teachers say that student phone use is a major problem. According to the National Center for Education Statistics, 77% of U.S. public schools now prohibit non-academic use of cell phones during school hours.

The goal of these bans is to create an environment where students can focus on school, without the constant pull of devices. Some schools require students to put their phones in backpacks or special lockers that can only be opened at the end of the school day. Others force the teachers to fend for themselves, leading to an inconsistent mishmash of cell phone rules between classrooms.

Should schools ban phones? Cell phones are disruptive, especially for kids. Students can take up to 20 minutes to refocus on what they were learning after being distracted (which is literally what phones were designed to do). Studies show that removing phones from classrooms can lead to better academic performance, test scores, and self-regulation skills.

Not everyone is on board. One major source of pushback is parents who are used to being in constant contact with their children throughout the school day. Some parents rely on texting or calling their kids during school hours (often for non-emergencies), which can disrupt the learning environment. Others will even FaceTime their kids to talk about assignments or quiz grades … in the middle of class.

But as Mercer Island School District in Washington demonstrates, there are ways to navigate this challenge.

MISD recently introduced a comprehensive phone-free policy in partnership with Yondr, a company that provides lockable phone pouches for students. With few exceptions, all students must have their own Yondr pouch and place their devices in it at the beginning of the day, and they can unlock it at the end of the day.

For emergencies, parents can call the school to deliver a message, or kids can come to the front office to use a phone … just like the days before iMessage. On an impressively detailed page on MI’s website, the district is clear: some people are very used to communicating schedule changes and practice/game/event schedules via cell phones. Those people (students, parents, and staff) will have to adjust.

With the right communication and planning, a phone-free school environment is achievable.

Parents, encourage your child to use their phone responsibly, and set an example by giving them space during the school day. After all, the goal is to help them develop healthy habits that will serve them well beyond their school years.

If your child’s school is considering a phone ban, advocate for policies that are logistically possible and enforceable. Away for the Day maintains an impressive list of policy examples across the country.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Did you know that BrightCanary's new AI feature summarizes text messages, detects concerning content, and even coaches you through parent-child talks? Hear from co-founder Steve Dossick about how the new feature works.

A Court of Thorns and Roses is the first volume in an ongoing and highly popular romantacy series by Sarah J. Maas. But is ACOTAR for kids? We break down what parents should know about this series.

Is your child struggling to stay off their phone during school hours? It’s time to talk to them about boundaries and possible solutions. Here are a few conversation-starters to talk to your child about swiping and scrolling during class and how to take more responsibility for their habits.

😮💨 About a quarter of young people use social media almost constantly throughout the day, mostly for entertainment and communicating with friends, according to a new survey. More than three quarters are aware that’s a problem and try to control their use: 67% curate their feeds to get rid of what they don’t want to see, and 63% take a break from their social media accounts.

🤗 How do you raise empathetic children? It comes down to empathetic parents: pay attention to what they’re feeling, try to understand their problems rather than minimizing them, and offer emotional support.

📺 Just dropped: A major systematic review of current research on screens for young kids (0 to 6). Some key takeaways, via Techno Sapiens: avoid using screens while interacting with kids, choose age-appropriate content, and avoid having TV on in the background — there’s evidence that it makes it more difficult for kids to focus their attention on whatever else they’re doing.

It’s harder than ever for teens to make friends in the digital age. Having strong friendships in adolescence is associated with better mental and emotional health as adults, but between the constant pressures of social media and the normal growing pains of being a teenager, your teen might feel isolated, lonely, and unsure what to do about it. If you’re wondering how to help your teenager make friends, here’s what you should know.

Kids are lonelier today than ever. Compared to those over 70, people aged between 16–29 are twice as likely to say they feel lonely “often or always.” What’s more, over 30% of young people say they don’t know how to make new friends.

Loneliness isn’t just a fleeting feeling — it can increase the risk of depression and anxiety among adolescents. That’s a problem, considering we’re already in the midst of a youth mental health crisis.

Positive relationships can help improve everything from school attendance to your child’s likelihood of graduating college. But the way teens make friends has changed over time. The rate of teens meeting up with their friends “almost every day” has decreased from 50% in the ‘90s to 25% today.

What changed? Although the percentage of teens meeting up with friends has been declining over the last few decades, there’s a steep dropoff in 2010 — when smartphones went mainstream. Text messages, social media, and Snapstreaks became the digital currency of social clout. It became easier for teens to feel connected online, even though offline friendships are more meaningful and intimate.

Being a teenager is radically different today than it was a few short years ago. That means making friends and maintaining strong friendships is radically different, too.

If you want to know how to help your teenager make friends, the biggest step is encouraging your teen to try new things. It’s hard to meet new people without going into new spaces, so you’ll want to work with them to discuss their interests and brainstorm ways they can use those interests to spark new connections. Here are some places to start:

You might feel super motivated to help your teen make friends, but keep in mind that you don’t always know the finer social dynamics of their world. Your teen might not even realize that they feel lonely. They might even feel overwhelmed if you throw a bunch of ideas at them at once.

Instead, get them talking. Here are some ways to spark a dialogue:

Be open, not judgmental. If you’ve noticed that your teen is spending more time alone than normal, ask them about it in a non-confrontational way, like, “I noticed you’re not hanging out with Sammy as often. Is everything okay?”

Show interest in their interests. Teens can easily pursue their passions and find niche communities online, but you wouldn’t know it unless you get involved. If your child mentions something they enjoy, ask them what they like about it. It might lend itself to an in-person activity. For example, if they’re really into a certain series, you might suggest inviting a friend and hosting a movie night.

Validate their feelings. If your teen says they feel like they don’t have any friends, you might be tempted to give them a million and one solutions to the problem. Instead, give them space to talk about their experiences. Questions like “That’s rough. Can you tell me more about how you feel?” or “What makes you think that?” can encourage your child to keep talking and give you space to examine what might be holding them back from making friends.

It can be difficult for parents to recognize when their teens are struggling, especially if they tend to isolate themselves behind screens. Monitoring your child’s online activity can help identify any red flags, like signs of depression or conflicts that might hinder their ability to make friends.

BrightCanary monitors what your child types across their text messages, social media, Google, YouTube activity, and more. The app uses the American Psychological Association’s emotional communication guidelines to categorize conversations, so you can identify anything concerning at a glance.

Plus, our AI-generated summaries make it easy to understand online messages, identify any potential issues early on, and have more informed check-ins with your child.

Look for signs of withdrawal, increased screen time, or reluctance to engage in social activities. Ask your child how they spend their free time—do they check in with friends during passing periods and participate in after-school activities, or do they spend most of their time alone?

Encourage open communication, help them explore new social groups, and monitor for any signs of bullying or depression. Recognize that you may not be able to solve their unique social dynamics, but you can give them space to work through their feelings and find different spaces for connection.

Yes, it's becoming more common, but it's important to ensure these online friendships are healthy and positive.

Focus on quality over quantity. Encourage them to pursue hobbies or activities where they can meet people with similar interests in smaller, more comfortable settings. Introverted teens may not want to attend large sporting events, but they may be interested in a book club or cooking class.

It's important to find a balance—be supportive and present, but allow them the independence to navigate social situations on their own. Remember that their social life includes the digital world, and you’ll want to find a way to stay involved that works for your family. We recommend starting with regular online safety check-ins.

While making friends can be challenging, it’s a crucial part of teenage development. As a parent, you can help your teen brainstorm different ways to expand their social circle online and offline based on their interests. Sometimes, the most important thing you can do is give them space to talk and find solutions on their own (with your encouragement and guidance, of course).

What can tech companies do to keep kids safe online? The Biden-Harris Administration’s Kids Online Health and Safety Task Force recently released a comprehensive report with key recommendations for kids to have safer experiences online and on social media. With input from youth advocates, civil society organizations, and academic researchers, the report lays out what parents, caregivers, and the tech industry can do to address the ongoing youth mental health crisis. Let’s discuss the report’s recommendations for Big Tech and what that means for parents today.

The Kids Online Health and Safety Task Force was established in 2023 as part of the Biden-Harris Administration’s broader effort to address the ongoing youth mental health crisis. While social media and technology haven’t caused the crisis, evidence suggests that the increasing prevalence of digital technology in children’s lives has exacerbated the issue — to the point that the U.S. Surgeon General has called for warning labels on social media, much like the ones you see on tobacco and alcohol products.

Co-led by the Substance Abuse and Mental Health Services Administration (SAMHSA) and the National Telecommunications and Information Administration (NTIA), the Task Force aims to advance the health, safety, and privacy of minors online.

The report, titled “Online Health and Safety for Children and Youth: Best Practices for Families and Guidance for Industry,” is a detailed analysis of both the risks and benefits that social media and digital platforms pose to our kids.

While digital technology can enhance education and social connection, it also exposes young users to serious dangers, including cyberbullying, misinformation, and exploitation. The report stresses the need for protective measures that safeguard kids’ mental and physical well-being. Some of those measures will require broader regulation at the federal level, like what we see happening with the Kids Online Safety Act. Other measures can (and should) be implemented sooner rather than later.

The report outlines 10 key recommendations for Big Tech companies to foster safer online environments for kids — here’s a high-level overview:

We hear a lot about the need for parents to protect their children online. While it’s true that parents can and should use monitoring tools and child safety apps to guide, protect, and support their kids online, it does parents a disservice to place the burden of accountability solely on them. A lot of parental control settings are difficult to find on devices and apps. It’s common sense that children should have the highest privacy settings enabled by default. And major tech companies have shown time and time again that they aren’t capable of putting children’s best interests ahead of their bottom line.

By designing platforms with youth in mind, companies can protect children from the adverse effects of social media, such as exposure to harmful content and addictive features. These changes can also promote a healthier digital environment where children can benefit from technology without falling victim to features that compromise their well-being. But in order to make these changes happen, tech companies must move forward with these recommendations.

While the tech industry plays a crucial role, parents also have a part in ensuring their children’s online safety today. Parents should:

Kids deserve a safer digital environment, but they’re growing up in a digital world that wasn’t designed with their best interests in mind. Tech companies should take a stronger stance on child online safety, but these changes take time — which is why it’s important for parents to stay informed and involved today.

Be the most informed parent in the room. Subscribe to our biweekly newsletter Parent Pixels.

Monitoring your child’s text messages can help you ensure their safety and well-being. But when your child receives thousands of texts per week, the practicality of reading their messages becomes overwhelming. What if you miss something important, like references to bullying, drugs, or self-harm? Good news: We’re parenting in the digital age, and there are tools available that can make text monitoring easier and more effective.

BrightCanary’s new AI-powered text message summary feature helps parents stay informed, without having to read every individual message. The app’s advanced technology not only summarizes text threads, but it also provides insights into your child’s emotional well-being and tips on how to have meaningful conversations. We sat down with BrightCanary CTO and co-founder Steve Dossick to talk about the new feature and how it sets BrightCanary apart from other child safety tools.

Rebecca Paredes: Can you explain how BrightCanary’s new text message summary feature works?

Steve Dossick: Our goal is to help parents stay informed about their children’s lives and alert them when there’s a concern. To do that, we first anonymize each conversation thread and ask AI to do a couple of things:

We use the latest Large Language Model (LLM) AIs and supply them with extensive parenting guidelines to tailor answers for parental support. So, we created a parenting-focused AI, and then asked it to help parents.

RP: How accurate is this feature in measuring emotions and summarizing texts?

SD: No computer system is perfect, but we were truly astonished at how accurately the latest LLMs are able to summarize content and interpret human emotions. It seems strange to ask a machine to report on human emotions, but these AI systems are incredibly capable of understanding and summarizing text written by humans.

RP: How does the AI handle potentially sensitive or alarming content in text messages?

SD: The AI is trained with vast amounts of internet content. It is able to recognize patterns in text messages and identify sensitive or alarming content. We also tell the AI the child’s age range, which helps maintain accuracy.

RP: How do parents feel about text message monitoring?

SD: Parents want to know that their kids are staying safe, especially in their first journeys online. We want to provide guardrails so they’re ready for all the positives and negatives the internet can throw at them as they grow older and more independent. At the same time, privacy remains important, particularly for older children and teens.

After launching our text message monitoring feature, we quickly decided that we wanted to use the power of AI to summarize text threads — so they don’t need to take the time to read every last message while maintaining a level of privacy.

Of course, if something truly concerning is going on with a child, parents can still read the full thread. Additionally, our Ask the Canary AI offers guidance on addressing specific issues, like handling bullying at school.

RP: How do you see this feature evolving in the future? Are there any additional functionalities you plan to add?

SD: We’ve deployed this feature for text message monitoring, but we plan to use it across all of our child monitoring features (YouTube, Google, Instagram, TikTok).

RP: What makes this feature different compared to other text monitoring tools available?

SD: Most existing text and image monitoring tools were designed for user-generated content sites like Yelp or TripAdvisor. These sites are concerned that people are sharing reviews with profanity or concerning references. However, children’s text message conversations are drastically different from what other monitoring tools have been trained for.

Kids send one-word replies, abbreviations (mb, wyd), and misspellings. Traditional content moderation models struggle with this. LMs, on the other hand, are able to use the context to generate real insights into meaning and emotional content, making them better suited for child safety.

Ready to get started with text message monitoring? BrightCanary offers the most comprehensive child safety app for Apple devices. Start your free trial today, or learn more about how to monitor your child’s texts on iPhone.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

Tech giants have some ‘splaining to do. First up: Google and Meta allegedly made a secret deal to target advertisements for Instagram to teens on YouTube, according to the Financial Times. The project, which began in early 2023, exploited a loophole to bypass Google’s own rules prohibiting ad targeting to users under 18.

The advertising agency Spark Foundry, working for Meta's marketing data science team, was tasked with attracting more Gen Z users to Instagram, which has been losing ground to rival apps like TikTok. Evidence suggests that Google and Spark Foundry took steps to disguise the campaign’s true intent, bypassing Google’s policy by targeting a group called “unknown”—which just so happened to skew toward users under 18.

Jeff Chester, executive director of the Center for Digital Democracy, which advocates for child privacy, said, “It shows you how both companies remain untrustworthy, duplicitous, powerful platforms that require stringent regulation and oversight.”

Speaking of oversight … the Justice Department is suing TikTok and parent company ByteDance for violating children’s privacy laws. According to a press release, ByteDance and its affiliates violated the Children’s Online Privacy Protection Act (COPPA), which prohibits website operators from knowingly collecting, using, or disclosing personal information from children under the age of 13 without parental consent.

The complaint alleges that from 2019 to the present, TikTok:

These allegations come amid ongoing legal battles over a TikTok ban in the U.S. To add to the controversy, the Justice Department recently accused TikTok of gathering sensitive data about U.S. users, including views on abortion and gun control. The Justice Department warned of the potential for “covert content manipulation” by the Chinese government, suggesting that the algorithm could be designed to influence the content that users receive.

That’s a lot to take in: Indeed. We often talk to parents about the balance between trust and monitoring. We can trust our kids, but we can’t always trust Big Tech companies to protect them or prioritize their well-being.

Taking an active role in your child’s digital life is about more than just supervising their online activity — it also involves considering how these companies use children's data and how they might influence what your child consumes.

If your child uses social media or YouTube, it's a good idea to periodically check their feeds together. A child safety app like BrightCanary can help make this easier, but nothing beats having open conversations with your child about what they share and what they see.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Unfortunately, the popularity of parental control apps has attracted scammers that want to swindle and frustrate people. Here’s how to identify and avoid parental control scams on iPhone and Android, plus tips to select a reputable app that does what it claims.

Did you know that your kid could be using private browsing to hide their online activity from you? Despite this workaround, parents still have options for monitoring their child online. Here’s what you should know and how to talk to your kid about incognito mode.

Tech giants don’t have our children’s best interests at heart. Privacy is important, but so is staying informed and keeping our kids safe — parents need to understand what their children are consuming, both in their algorithms and through ads. If you’re worried about the privacy conversation, here are some conversation starters:

🤖 Roblox recently released new resources to educate users about generative AI (think: ChatGPT, DALL-E, and Roblox’s own GenAI). Here’s the guide for families and one made for teens.

👑 Meghan Markle and Prince Harry have entered the child safety chat: The Parents’ Network, a new initiative from the Duke and Duchess of Sussex, is intended to assist families of children lost due to social media harm.

👻 Snapchat rolled our new safety features, including expanded in-app warnings, enhanced friending protections, and simplified location sharing. (We’re still not fans of Snapchat for younger kids, but if your teen uses Snap, it’s worth checking out the app’s parental controls.)

😔 Watching just eight minutes of TikTok focused on dieting, weight loss, and exercise content can harm body image in young women, according to a new study.