I’ll never forget when my 10-year-old niece sent her first text. It was a simple “Hi,” but it also marked the start of a new chapter for her — and a whole new set of safety concerns for her parents. How would they prevent her from texting strangers? What if someone bullied her in a group chat?

This is why a reliable app for parents to monitor text messages is essential. While Apple offers strong parental controls, they don’t let parents see their child’s actual text messages. Most monitoring apps don’t work well on iPhone, but BrightCanary was built for Apple devices — so it actually delivers.

Here’s how BrightCanary’s monitoring plans work, plus how to set it up and keep your child safe.

When parents think about online safety, they often forget about the risks associated with text messaging. Texting seems like a private form of one-to-one communication, but it really isn’t.

Texts can expose kids to risks:

Plus, anything your child sends in a text thread can be screenshotted, saved, and shared with others.

Text message monitoring is like asking your child about their school friends — who they’re talking to, what they discuss, and whether anyone makes them feel uncomfortable. Supervising texts helps parents stay involved and guide their kids through these interactions, especially as their social circle expands.

You can monitor texts in a few different ways, like spot-checking your child’s phone, scheduling weekly check-ins, or using a third-party monitoring app like BrightCanary. Combining methods generally works best because communication is really key at this point in your child’s digital literacy education — you want to give them the space they need to explore different social dynamics in healthy ways, while also staying on top of anything potentially concerning.

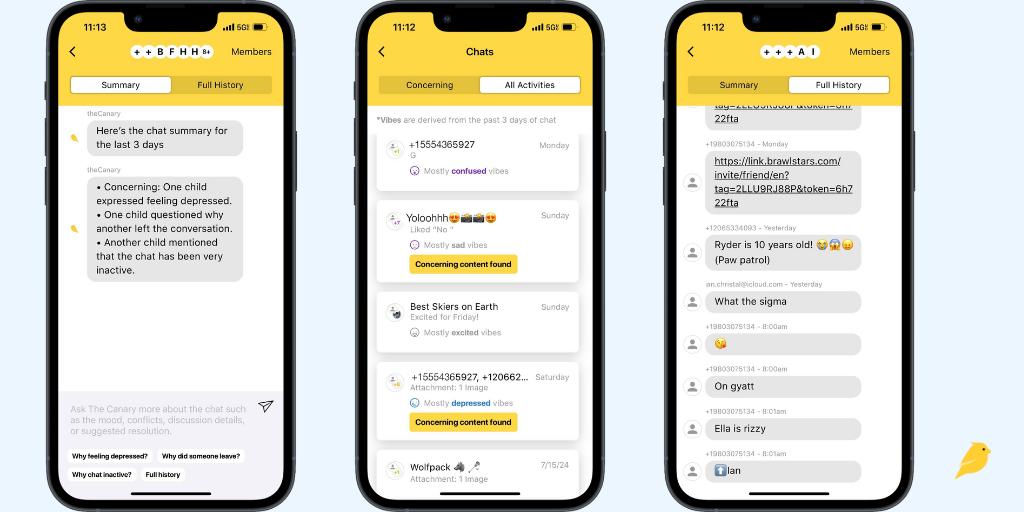

BrightCanary uses advanced AI technology designed for parents. The app’s AI is like a hall monitor, keeping track of what your child types across their favorite apps — including texts, searches, and social media — and flagging anything concerning. You have two monitoring options:

Protection plan

Text Message Plus

Everything in Protection, plus:

With either plan, BrightCanary helps you stay connected and informed — without needing to check every message manually.

To get started, download BrightCanary on your iPhone and set up your child’s profile. You’ll need your child’s device on hand to complete setup.

For Protection plan users, the BrightCanary Keyboard is the key to monitoring your child’s text activity across all the apps they use — including iMessage, Snapchat, TikTok, and more.

On your child’s iPhone or iPad:

Once installed, you’ll begin receiving transcripts and AI-powered insights on what your child types in real time.

To access both sides of your child’s iMessage and SMS conversations — including group chats, deleted texts, and shared images or videos — upgrade to Text Message Plus. This plan requires your child’s Apple ID login and a one-time setup using two-factor authentication.

What you’ll need:

Step-by-step setup:

It may take several hours to begin receiving texts while your child’s messages are processed.

Pro tip: If your child doesn’t have an Apple ID, you can create one using Apple Family Sharing. Apple has a handy guide on how to create an account for your child.

AI chatbots offer an anonymous, judgment-free way to ask questions and get more information about important topics. The BrightCanary app’s AI, Ask the Canary, is seamlessly integrated into the monitoring experience.

For example, you might see that your child is using the slang "sigma" in their texts, but you’re not sure what that is — so you use Ask the Canary for more details. All of that can happen in just a few taps in the BrightCanary app.

Ask the Canary is also helpful for general questions about digital parenting. You can access the chatbot in the app and ask your toughest digital parenting questions, like how to handle your child’s first bully or tips on talking to your child about online safety.

It’s normal for parents to feel a little worried about how their child will respond to text message monitoring. You might feel like you trust your child, but you also want to make sure they’re staying safe. It’s important to stay involved for the same reason that you wouldn’t just drop your child off in the middle of a crowded city without any guidance — you want to guide and protect them, while also helping them learn how to navigate any challenges that arise.

That’s why we recommend approaching your child with their safety in mind. Explain how BrightCanary works and why you want to use it. If you’ve already given them a device and they’ve been texting independently without any restrictions, you can always go back and add rules. Some of our parents make BrightCanary a condition for device use — if the child wants their own phone, they also need to agree to parental monitoring.

Set your rules, explain how you’ll work together on this, and put the rules in writing in a digital device contract.

The best app for parents to monitor text messages gives you flexibility — the ability to give your child their independence by only looking at concerning content, and the ability to look at more detailed conversations if the need pops up. After all, there’s no one way to parent in the digital age, and every family has different needs.

BrightCanary gives parents a comprehensive solution for iPhone text message monitoring, and it happens all on your phone. Download BrightCanary on the App Store today and start your free trial.

With its eye-catching cover and viral popularity on BookTok, it’s no surprise that A Court of Thorns and Roses (ACOTAR) has caught your child’s attention. Written by Sarah J. Mass, this romantic fantasy (romantasy) novel follows 19-year-old Feyre, a human who is pulled into the magical faerie world. But is A Court of Thorns and Roses for kids? Here’s what parents need to know before letting their child read ACOTAR.

Is ACOTAR for kids? Not exactly. The book is rated 16+ due to gore, violence, and mature romance.

This novel belongs to the romantic fantasy genre (also known as “romantacy”), and it leans heavily into both: the story’s world is filled with darker elements, such as torture and complex issues surrounding consent.

ACOTAR is sometimes shelved in the Young Adult section, but it’s better suited for older teens and adults. Additionally, the series becomes more explicit as it progresses. If the first book is too intense for your child, the rest of the series will be, too.

Parents who are concerned about language should know that ACOTAR contains:

There are also multiple mentions of Feyre’s “watery bowels,” which isn’t necessarily crude, but it happens often enough that it raises questions about her gut health.

Yes. ACOTAR is well-known for its “spicy” scenes, a term used to describe books with sexual content. Spice is denoted on social media with the hot pepper emoji: 🌶️

Parents should be aware that ACOTAR contains mature romance and explicit sexual themes not typically found in traditional YA books.

Heads up: If you’re worried about the content your child searches for online, monitor their activity with BrightCanary.

Yes. Violence is a major element of the ACOTAR series. Later books deal with the brutality of war, death, and serious injury.

While these actions are integral to the story’s plot, violence in various forms is a significant element of ACOTAR’s narrative. While these elements contribute to the novel’s atmosphere, they may not be appropriate for younger teens.

If your child wants to read A Court of Thorns and Roses and you feel they can handle its mature content, consider:

It's also worthwhile to know what the A Court of Thorns and Roses series is about. ACOTAR follows Feyre's journey through the fae world, but it also deals with war, deceit, and trauma.

The first book focuses largely on Feyre and Tamlin's love story and battle against Amarantha's influence, while the second and third books put Feyre and her found family against the invading forces that want to seize control of the fae land, Prythian. The fourth book focuses on Feyre's sister, Nesta, and her journey on a path of healing, but it's arguably one of the most explicit books in the series so far.

If your child likes fantasy books, romance, and stories about female protagonists who learn how to battle against all odds, they might enjoy ACOTAR — but you'll need to weigh that against the series' adult content. If you'd rather keep things more age-appropriate for younger readers, we recommend checking out these popular YA selections:

So, is A Court of Thorns and Roses for kids? Not really. While it features strong themes of self-discovery, perseverance, and personal growth, the novel also includes graphic violence, explicit sexual content, and mature themes that make it better suited for older teens and adults.

There’s plenty of fan-made content around A Court of Thorns and Roses, so if your child shows any interest in this series, they’ll likely search for related material online or talk about it with their friends. If you’re concerned about explicit and violent content, a child safety app like BrightCanary can help you monitor your child’s digital activity — so you can talk about any concerning topics together.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

😬 Instagram accidentally recommends a flood of graphic Reels: Meta, Instagram’s parent company, recently apologized for an “error” after Instagram users reported seeing back-to-back disturbing content on their feeds. The issue impacted Instagram users worldwide, who saw Reels showing gore, violence, and killings. The videos were marked with the “sensitive content” label, but were still recommended back-to-back. Meta recently changed its content moderation policies to be more lax — but an error at this scale is concerning, especially if it increased the likelihood of kids encountering inappropriate material. If your child uses Instagram, it’s worth checking in:

📲 Apple will let parents share kids’ ages to limit app access: Apple recently announced several new child safety initiatives, including letting parents share their kids’ age ranges with apps, improving the App Store’s age ratings system, and making it easier for parents to set up Child Accounts. The features will roll out later this year. Notably, parents will be able to share their child’s age range (not their birthdate), allowing app developers to provide age-appropriate content. This also impacts the App Store: in addition to more age thresholds (Age 4 plus, 9 plus, 13 plus, 16 plus, and 18 plus), product pages will be updated to include details for parents, like whether an app features user-generated content, ads, or parental controls. As for Child Accounts, one of the biggest changes there is that parents can now update their child’s age after it’s created. Curious about Child Accounts and why your child might need their own Apple ID? Check out our guide for more details.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Your child says they feel weird because they stumbled upon a really graphic video on Instagram. They didn’t mean to see it, but it just popped up on their feed. How do you handle the convo? Here are a few places to start:

😰 Is social media causing your teen’s anxiety? Here are seven steps you can take to help your teen detox from their phone, via Psychology Today.

👋 Are you following us on Instagram? Every week, we share digital parenting tips and advice you won’t want to miss.

👀 Did you know? BrightCanary comprehensively monitors text messages on Apple devices without requiring any extra software on your child’s phone. Get started for free today.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

📢 Advocates call for child online safety legislation: Last week’s Senate Judiciary Committee hearing on children’s online safety renewed important discussions about how tech companies have failed to protect young users. Hearings such as these are meant to help committee members gather information that can inform future policy discussions. Experts and advocates emphasized the need for a fundamental shift in how parental controls are designed and implemented, as opposed to outright bans. Stephen Balkam, founder and CEO of the Family Online Safety Institute, argued that parental controls should be “easy to find and easy to use” while also being "standardized, interoperable, and unified across apps, devices, and brands."

Currently, parents have to juggle different settings across multiple platforms, making it difficult to manage their children’s online safety effectively. (And that all depends on whether they can find those parental controls in the first place.) In recent years, lawmakers have pushed for stronger social media regulation — but efforts like the Kids Online Safety Act have consistently come up short. Meanwhile, families are left navigating digital spaces on their own. Parents, stay informed and monitor the apps and websites your child consistently uses. Child safety apps like BrightCanary are designed to help you supervise your child’s online activity, without the headache. Learn how to start monitoring social media today.

⚠️ Parents alarmed by the return of a deadly social media challenge: Nnamdi Ohaeri, Jr., known by family and friends as “Deuce,” was only 13 when his parents found him unresponsive in his room earlier this month. Deuce’s parents suspect the Southern California teen took part in a social media challenge that involved kids deliberately making themselves pass out. Although Deuce didn’t have social media on his phone and his device was secured with strict parental controls, his parents believe he learned about the challenge from other students at school.

Similar challenges have circulated on social media in recent years, including TikTok’s “Blackout Challenge.” Deuce’s tragic story underscores an unexpected concern for parents: negative influences from a child’s peer group and exposure to harmful content shared by classmates.

The family hopes that sharing Deuce’s story will serve as a warning to other parents to stay alert to what their children might encounter both on social media and through friends.

Ohaeri, Sr. said he’s always been “mindful of influences and talking about, ‘Don’t do drugs and make good decisions,’” to his children. “But we don’t talk about not following social media trends or playing social media games and maybe we need to,” he added. Parents, here’s a rundown of dangerous social media challenges and tips on how to discuss them with your children — even if they don’t have social media on their own device.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Let’s talk about social media challenges. They’re appealing to kids because they can increase their social clout. Some are harmless, but others are dangerous — and kids don’t always think ahead. Here’s how to start a conversation with your kid about safety and the challenges they see online.

🧠 What is “brain rot” content, and how is it actually altering our children’s brains? Find out why it’s damaging and how to help your child watch less of it.

🫠 Quitting social media can make us feel less stressed … but it can also make us feel lonelier. You can mitigate those effects (for yourself and your family) by boosting offline social ties, experimenting with social media detoxes, and finding other ways to stay current. (Like following newsletters!)

🫣 What is incognito mode, and what should you do if your child is browsing on private browsers? We break down what parents should know.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🍎 Teens spend over 1 hour on phones during school: Your teen is likely distracted in the classroom, according to a new study published in JAMA Pediatrics. Researchers found that half of teens (ages 13–18) use their smartphones for at least 66 minutes during school hours, and 25% logged in for more than two hours. While some teens use their phones for research or schoolwork, the majority of students used social media and messaging apps. (Gotta maintain that Snapstreak, after all.) This isn’t ideal for a bunch of reasons ranging from lost learning to missed opportunities to socialize with peers. Some schools are implementing phone bans during class hours, but if yours hasn’t, here are a few options: use parental controls to limit screen time and notifications during school hours, and talk to your child about why it’s important to limit their phone use at school. If they struggle with focus or forget assignments, keeping their phone off at school is an easy first step.

⚖️ Kids Off Social Media Act advances out of committee: There’s a new child safety bill on the block. The Kids Off Social Media Act (KOSMA) — a bipartisan bill that would ban kids under 13 from social media — was approved by the Senate Commerce Committee, setting it up for consideration by the full Senate. The bill builds on existing platform policies, as most social media companies already set their minimum age at 13. If passed, the bill would require social media platforms to enforce age verification and mandate that federally funded schools block access to social media on school networks and devices. The bill is gaining traction at a time when more people are becoming aware of social media’s negative effects on adolescents; a recent study by Sapien Labs links smartphone usage to increased aggression, hallucinations, and detachment from reality among teens, and 13-year-olds are experiencing more severe mental health issues compared to 17-year-olds — possibly because they received their phones at younger ages.

🙅 Most teens don’t trust AI or Big Tech: Adolescents feel sus about generative AI like ChatGPT and DeepSeek, according to a new research brief from Common Sense Media. Over a third of teens say they’ve been misled by fake content online, including AI-generated content (aka deepfakes). Over half (53%) of teens don’t think major tech companies will make ethical and responsible design decisions, either. They’re also aware that Big Tech tends to prioritize profits over safety: a majority (64%) don’t trust companies to care about their mental health and well-being, and 62% don’t think companies will protect their safety if it hurts profits. With the rise of misinformation and AI-generated content, now is a good time to check in with your teen about how to spot deepfakes and verify online information before they share it.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Back in high school, you probably learned the importance of citing reliable sources and distinguishing credible information from unreliable ones. Today’s kids face the same challenge, but in a digital world filled with AI-generated deepfakes and misinformation. Knowing how to evaluate online sources is an essential skill. Here are some conversation starters to help your child think critically about what they see online.

💕 Valentine’s Day is this Friday! If you’re looking for family-friendly movies to watch with your teen or tween, check out this roundup. We’ll take any excuse to rewatch Say Anything.

📱 Sharing content about your kids online is tempting, but “sharenting” has its downsides, too. We’re sitting with the lessons from this article on Psychology Today.

⏳ Curious about whatever’s happening with TikTok? The ban has been delayed for at least 75 days, but still needs an American buyer. YouTube personality MrBeast, the CEO of Roblox, and Microsoft are just some of the names that are eyeing a TikTok bid to keep it in the US.

🧐 In honor of Safer Internet Day, UNICEF debunks four myths about children’s online safety that are worth a read.

Delulu? Skibidi? Kids today are speaking a language all their own. These words, phrases, and acronyms might pop up in text messages and everyday conversations, but what do they even mean? Here’s your field guide to common teen and tween phrases, from Gen Alpha terms to Gen Z sayings.

Ate: Praise or admiration for someone’s actions, choices, or performance. For example, “she ate” in reference to a friend’s outfit means that she looks great.

Bussin: Something extremely good or excellent, such as food.

Cooked: A state of being in danger or doomed. This term is often used facetiously. For example, if your teen is anxious about the consequences of not doing their chores, they might say, “I didn’t put the dishes away like Mom asked. I’m cooked.”

Delulu: Short for “delusional.” It describes someone with unrealistic beliefs or optimistic expectations.

GOAT: This stands for the Greatest Of All Time. If someone describes you as the GOAT, it’s a compliment.

High key: Something is intense or over-the-top. High key (also styled “highkey”) can also mean “really” or “very much,” as in “She highkey wants you to ask her to the dance.”

Igh: Synonymous with “alright,” “I guess,” or “fine.”

It’s giving: Used to describe when something is giving a certain feeling or vibe. You might describe someone’s outfit as, “It’s giving Billie Eilish.”

Kms/kys: Acronyms that stand for “kill myself” and “kill yourself.” While it may be intended as a spot of dark humor, if you see kys in your child’s texts, treat it seriously.

Left no crumbs: Someone did something extremely well or perfectly. This phrase is often paired with “ate.” For example, the sentence “She ate and left no crumbs” might refer to someone’s perfect performance in a play.

Low key: Restrained, chill, or modest. Low key (or “lowkey”) is the opposite of high key. If someone is going to a low-key party, it’ll be a chill hangout.

Menty b: Short for “mental breakdown.” Menty b is meant to be a humorous way to describe someone’s feelings during periods of high stress.

Mew/mewing: The practice of placing your tongue on the roof of your mouth to improve jawline aesthetics.“Mewing” is a Gen Alpha trend that involves putting a finger to their lips like they’re shushing someone, then running their finger along their jawline. A tween might mew in response to a question — they can’t answer because they’re working on their jawline. (This one is a little convoluted.)

Ngl: This stands for “not gonna lie.” Someone might use this if they’re going to share their honest opinion about a topic.

Ohio: Used to describe someone as weird or cringey.

Ong: Expresses strong belief, intense emotion, or honesty. For example, if someone is concerned about nearly missing their final, they might text, “I slept through my alarm ong.”

Skibidi: This term can mean cool, bad, or dumb, depending on what it’s paired with. “Skibidi Ohio” means someone is really weird, while “skibidi rizz” means they’re good at flirting. The term comes from a YouTube series called Skibidi Toilet, in which toilets with human heads battle humanoids with electronics as heads. What a world.

Sigma: Means good, cool, or the best at something. Conversely, “what the sigma” doesn’t really mean anything — it’s another way of saying “what the heck.”

Smfh: Short for “shaking my f**king head.” It’s used to refer to something disappointing or upsetting.

Smth: An abbreviation for “something.”

Stan: To enthusiastically support something or someone.

Tea: Gossip or secrets. The phrase “spill the tea” means that someone wants to hear the latest juicy information.

Mid: Something is average, low-quality, or middle-of-the-road.

If you feel like you’re having your own menty b after trying to understand Gen Z sayings, we’re lowkey right there with you. But you’re not cooked, parents. Kids have glommed onto different words, phrases, and even emojis as part of their cultural consciousness for generations (see: on fleek, basic, cool beans, and feels). Staying informed and trying to translate your child’s messages are part of the experience of being a parent.

For extra help monitoring what matters — your child’s safety — there’s BrightCanary. The app uses advanced AI technology to monitor your child’s text messages, social media, and searches and flags concerning content, including explicit material, harassment, and drug references. Download on the App Store today to get started for free.

If you’re a parent of a tween or teen, you’re no stranger to unusual acronyms and slang. But while some terms are harmless, others are cause for concern. “KYS” is one example — the term is a red flag if your child sends or receives it. But what does KYS mean, and how should you talk to your child about it?

“KYS” stands for “kill yourself.” The term is used to make fun of someone after they do something embarrassing, or it can be used as a form of harassment.

The meaning largely depends on context, but the effect can be hurtful either way. For example, if your teen posts a video of them playing the guitar, a stranger might comment KYS to taunt them. In other instances, a bully might repeatedly text KYS to their victim with the intent to cause them mental or emotional harm.

If you or someone you know is thinking about attempting suicide, please call the toll-free, 24/7 National Suicide Prevention Lifeline at 1-800-273-8255.

KYS is a form of internet slang that has been around since the early 2000s, where it was used in places like message boards and forums. Today, kids communicate through social media and text messages — so acronyms like KYS have entered texts and inboxes.

KYS appears in places young adult males tend to frequent, like Reddit, Discord, and Steam. But the term can be used by anyone; it can be found on social media platforms such as Instagram and TikTok, as well as texts sent by cyberbullies.

Even if KYS is sent as a joke, it makes light of suicide and should be treated seriously. If you’re monitoring your child’s text messages and see this acronym in their threads, here’s what to do:

One of the more difficult things to communicate to kids is that even their jokes can have consequences. If they know what KYS means, they should also know that joking about suicide isn’t cool. And if you see the term pop up in their messages, take a moment to step in and have a conversation about it. Sure, it might be nothing — or it might not. To take a proactive approach to monitoring your child’s online activity and messages, start with BrightCanary. Download on the App Store today and start your free trial.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤖 Your teen has probably asked AI to solve for X: A new Pew Research Center poll asked US teens ages 13–17 whether they’ve used ChatGPT for schoolwork. Twenty-six percent said they had, double the number from two years ago. Teens in 11th and 12th grade were more likely than seventh and eighth graders to use AI as their study buddy (31% vs. 20%).

While some applications of AI can be helpful, like outlining a paper or identifying typos, it gets problematic when kids are using the technology to do their homework for them. Twenty-nine percent said it’s acceptable to use ChatGPT for math problems, even though a recent study found that the AI can only answer questions slightly more accurately than a person guessing. And, of course, when the robots write your papers for you, you don’t learn how to effectively write a compelling argument. On our blog, we covered tips to manage the potential downsides of ChatGPT and how to talk to your kiddo about it.

📲 Most kids ages 11–15 have a social media account: According to an analysis of a national sample of early adolescents in the US, a majority (65.9%) of kids have a social media account — even though social platforms say their minimum age is 13. In fact, under-13 social media users had an average of 3.38 social media accounts (mostly TikTok). Notably, just 6.3% of participants said they had a secret social media account hidden from their parents. We listen and we don’t judge, but social media isn’t great for younger kids — it can expose them to addictive algorithms, problematic content, and online harassment, among other concerns. If your child has a social profile, we recommend monitoring. Here’s how to do it.

😠 Social media is making us grumpier: A study published earlier this month investigated the relationship between social media use and irritability — aka feeling grumpy or feeling more bothered by things and people more than usual. Frequent use of social media was associated with significantly higher levels of irritability, especially for people who posted often. The findings were based on adults, but it’s worth considering how frequent social media use can impact your already-moody teens and tweens. Our advice: help your child replace constant social media use with better, more constructive ways to spend their leisure time, ideally away from screens. Save these tips to help your child make stronger offline friendships.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Believe it or not, we’ve reached the end of January. In terms of our digital lives, the end of the month is a great time to reflect on what went right, what didn’t, and where we can grow in the coming month. Here are some thought-starters to bring to your next roundtable with your child.

Beta testing? Cuffing? Teen dating slang is its own language. If your teen is starting to show interest in dating and relationships, here’s what all those terms (and weird emojis) mean.

Roughly 55% of kids ages 13 to 17 use Snapchat, and about half say they use the platform daily. While Snapchat offers fun features like face filters and easy ways to connect with friends, there are also hidden risks every parent should know about. Here are 10 bad things about Snapchat and how to navigate them for your child’s safety.

😊 Did you know that there’s a science behind making yourself happier? Some steps include valuing time over material possessions, expressing gratitude, and, yes, giving up social media for extended periods of time. Check out the full writeup via Parenting Translator.

⚖️ “Social media platforms are not neutral bystanders; they actively design systems that promote engagement at any cost, even if it means exposing children to harmful content. We urge Congress to prioritize this legislation — it’s a matter of life and death.” Read Laura Berman and Samuel Chapman’s op-ed about why we need social media regulation.

💻 In today’s day and age, how do you teach kids to be “good at the Internet”? Big fans of this Romper essay by Rebecca Ackermann.

Snapchat is one of the most popular apps among teens. Roughly 55% of kids ages 13 to 17 use Snapchat, and about half say they use the platform daily. While Snapchat offers fun features like face filters and easy ways to connect with friends, there are also hidden risks every parent should know about. Here are 10 bad things about Snapchat and how to navigate them for your child’s safety.

Snapchat’s disappearing messages are designed to vanish after they’ve been viewed or expired (after 24 hours). The problem is that vanishing messages can hide all sorts of concerning content, ranging from explicit messages to online harassment. And because they disappear off your child’s device, they’re difficult to track down and use as evidence of wrongdoing.

Disappearing messages can also encourage your child (and their friends) to engage in risky behavior, like sending inappropriate pictures. But just because something seems private doesn’t mean it is. Screenshots or third-party apps can still save Snaps without the sender’s knowledge or permission.

Did you know? BrightCanary monitors what your child types in all the apps they use, including Snapchat messages.

The Snapchat Snap Map (say that three times fast) allows people to see a user’s real-time location. This feature is disabled for teen accounts by default, but if it’s enabled, friends can use it to track your teen’s whereabouts.

Location sharing might be helpful if you’re a parent trying to track down your teen to pick them up after an event, but it’s concerning if your teen accepts friend requests from people they don’t actually know in real life. Even among people they do know, location sharing can expose kids to stalking risks and unintended privacy breaches.

For example, if your teen wants to hang out with a certain friend but not the other, the Snap Map might expose their location — and lead to some difficult conversations among their friend group.

Similar to TikTok and Instagram, Snapchat also has a curated collection of short video content from various publishers, creators, and news sources called “Discover.” Users can also view “Stories” on different topics. These features are personalized based on your interests viewing habits, but these features can also expose kids to adult content, including sexual or violent material.

On Reddit, parents have complained about the explicit material shown on Snapchat’s Discover feed. “I just don’t think a company should be running hog wild with sexual imagery and highly politicized or controversial articles/voices when they have minors that are on the app,” one user wrote.

While parents can report and block certain types of content from appearing, there isn’t a way to reliably set content filters around Snapchat’s Discover or Stories features.

Anonymity can encourage people to behave in ways they normally wouldn’t in real life — including harassing others through group chats and disappearing messages on Snapchat. The platform’s anonymous nature can expose your child to cyberbullying on social media, especially if they accept friend requests from people they don’t know.

Snapchat’s most recent transparency report underscores the scale of the problem. In 2024, the platform reported 6.5 million instances of harassment and bullying. Of those, just 36.5% were enforced by Snap, which means that a majority of reports went unaddressed.

Snap streaks are one of the ways Snapchat gamifies the user experience. A Snapstreak refers to the number of consecutive days two users send each other Snaps (pictures or videos). The streak expires if both users fail to send a Snap within a 24-hour window.

They might sound fun, but Snapstreaks can also lead to obsessive behavior and increased screen time, especially if your teen has streaks running with more than one friend. Maintaining a streak gives you social credibility, and a teen’s personality may even be influenced by the number of streaks they have going.

As if teens need more peer pressure in their lives, right?

The fear of missing out (FOMO) refers to a feeling of anxiety about being excluded from other friend groups or missing out on something more fun happening elsewhere. Remember, social media is a highlight reel — if your teen is constantly seeing their friends posting about going to exciting places, hanging out with people, and buying certain items, they might feel like their own life is boring or less-than in comparison.

FOMO isn’t unique to Snapchat, but the platform’s culture rewards people who are chronically online. That visibility can give your teen more insight into what their peers are doing around the clock, which may negatively impact their own sense of self-worth — especially if that’s all they consume online.

Users can easily receive friend requests or messages from unknown people, increasing the risk of dangers like grooming, harassment, and access to drugs.

In the past year, Snapchat has made efforts to improve teen safety by preventing teens from interacting with strangers. New teen safeguards have made it more difficult for strangers to find teens by not allowing them to show up in search results unless they have several mutual friends or are existing phone contacts.

However, those changes aren’t foolproof — it’s still possible for people to connect with strangers on Snapchat, especially if your child fibbed about their age when they signed up for their account.

In 2022, the Drug Enforcement Agency named Snapchat as one of the platforms which drug dealers are using to peddle illicit substances, which can be laced with deadly amounts of fentanyl. Across the country, the families of victims are suing Snapchat and campaigning for stricter regulations.

Snapchat has historically been used for illegal activities, and the platform is struggling to keep up with the scale of the problem. In 2024, the platform reported approximately 452,000 instances of drug content and accounts, but Snap enforced just 4.1% of the total reports.

Snapchat’s gamified features, like Snap Scores and Snapstreaks, are designed to maximize engagement on the platform. This isn’t unique for social platforms, but it’s especially problematic when the majority of users on Snapchat are between the ages of 15 to 25 — an age group that is developmentally prone to impulsive behaviors.

Without appropriate boundaries and screen time limits, it’s relatively easy for young people to excessively use Snapchat. And that’s already a trend — according to Pew Research Center, 13% of teens use Snapchat almost constantly, compared to 12% on Instagram and 16% on TikTok.

Snapchat recently improved its parental control settings, dubbed Family Center. Now, parents can see their child’s friend list and who they’ve contacted most recently, and they can more easily report suspicious behavior. However, Snapchat comes up short in a few key aspects: parents aren’t able to view what their teens are messaging, and there are no content filters to prevent kids from accessing inappropriate material.

Not every parent and child will need to have message monitoring. But parents should have the option to do so if they need it.

Some of the concerns with Snapchat, like location sharing and stranger danger, are also risks with other social media apps. But Snapchat’s vanishing message feature is particularly concerning, as well as its comparative lack of parental controls and content filters. So, is Snapchat safe for kids? It depends on how it’s used and how closely you’re able to supervise.

We recommend having a conversation with your child about the risks that are inherent with Snapchat. There’s nothing wrong with having them hold off on getting Snapchat. If you do decide to let them Snap, walk through their privacy settings together, set up Snapchat Family Center, and reiterate your expectations — for example, they’re only allowed to talk to a limited number of contacts, and they have to consent to periodic phone checks during the week.

If you set device rules, we recommend putting them down in writing with a digital device contract.

Snapchat is a popular app among teens, but it’s not really designed with the best interest of minors in mind. It’s important for parents to stay involved if they allow their kids to use Snapchat. Monitor their activity, set boundaries, and use parental control tools.

Snapchat has risks, including privacy concerns and exposure to harmful content. Parents should actively monitor their child’s activity if they allow Snapchat.

Set up Snapchat Family Center, use the strictest privacy settings, turn off Snap Map, and encourage your child to only accept friend requests from people they know in real life. If anyone makes them feel uncomfortable online, talk to them about how to handle it.

Instagram is a popular alternative with similar Snapchat features and stronger parental controls. Other messaging app alternatives include Messenger Kids and iMessage with BrightCanary monitoring.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

😅 Meta is getting rid of fact checkers. What that means for parents and kids: Meta, the parent company of Facebook and Instagram, recently announced that it is getting rid of its third-party fact-checking program. Meta CEO Mark Zuckerberg acknowledged that, as a result, the company will catch less “bad stuff” posted on its platforms — which is a red flag, considering that roughly 60% of kids in the US use Instagram alone. The company is switching to a “Community Notes” model, similar to X. However, the new policy means your child is more likely to see potentially incendiary content, such as hate speech and misinformation, especially if they like or share a post on their feed. If your child uses Insta, here’s what we recommend:

🔒 New social media and children’s device laws officially kick in: Although the Kids Online Safety Act fell flat last year, several major social media bills have taken effect at the state level. In Florida, children under 14 are no longer allowed to use social media, and minors aged 14 and 15 can only use it with parental consent. In Utah, SB 104 prevents children from accessing obscene material via Internet browsers or search engines. And, notably, most of the South can no longer access pornography websites due to pushback from age verification laws (which the Supreme Court will weigh in on this week).

⏳ Let’s talk about the impending TikTok ban: TikTok is on the clock. No, that’s not a Kesha lyric. The popular social media platform will shut down in the U.S. by Sunday, Jan. 19 if the ban is upheld. As a recap, TikTok was put on the chopping block last year due to national security concerns. TikTok’s parent company, ByteDance, has until the 19th to sell the app’s U.S. operations to an American owner or shut down entirely. At that point, TikTok will be removed from App Stores and won’t be available for new downloads. No, your phone won’t self-destruct: the app will likely still work on your phone for a little while, even after the 19th.

The reasons for the ban are controversial; peer-reviewed research suggests that TikTok is being used to shape the West’s public opinion of China. At the same time, TikTok’s many faults can also be applied to other popular social media platforms (have you seen Meta’s terms of service?). Incoming President Trump has signaled that he plans to prevent a full TikTok ban once he takes office next week, so this is still a very much developing story. We’ll keep you posted.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Did you know that the BrightCanary app includes an AI chatbot designed specifically for parents? It’s called Ask the Canary, and it’s designed to answer your toughest parenting questions 24/7. One of our writers ran some situations by the AI — you can read the full writeup on our blog. And if you want to try Ask the Canary yourself, just download BrightCanary on the App Store!

Question: “I got an alert on BrightCanary that people are sending hurtful texts to my child. What are some conversation starters I can use to talk to them about it?”

Ask the Canary’s response: “I noticed some of your friends have said hurtful things in your text messages. How does that make you feel?”

Question: “My child is posting inappropriate things online. What are some conversation starters I can use to talk to them about it?”

Ask the Canary’s response: “I noticed something that worried me in your posts. What was your intention when you posted this? How do you think these posts align with the values we cherish as a family?”

Question: “I want to know more about a show my child is watching. What are some conversation starters I can use to ask them about it?”

Ask the Canary’s response: “Can you tell me about the show you’re watching? How does this show compare to other shows you like?”

Writer Rebecca will never forget when her 10-year-old niece sent her first text. It was a simple “Hi,” but that word also signaled the start of a new chapter for her — and a whole new set of safety considerations for her parents. This is where a reliable app for parents to monitor text messages is essential.

Apple’s parental controls are impressive, but they don’t give you the ability to actually see what your child is texting. Other parental monitoring apps come up short on iPhone, but BrightCanary was specifically designed for Apple devices — so it actually works. Here’s what parents and family members should know about BrightCanary text monitoring, how to set it up, and tips to get the most of the app.

Ah, oversharing about your personal life online. We’ve all seen it, and most of us have done it a time or two as well. But when it comes to our kids, oversharing on social media can be particularly risky.

Kids may not be aware that they’re oversharing — they may simply want to talk about their experiences with their friends, without realizing that the information is public or can be easily screenshotted and shared. Here’s everything you need to know about the dangers of oversharing online and how to help your child avoid it.

🏛️ In California, a federal court upheld most of SB 976, also known as the Protecting Our Kids from Social Media Addiction Act. The bill prevents social media platforms from knowingly providing an addictive feed to minors without parental consent and takes effect on January 1, 2027. NetChoice, a powerful tech lobbying group, challenged the law on First Amendment grounds, and while the court partially blocked parts of the law, social media companies will still be expected to adjust their feeds for minors by 2027.

🎮 Is your child asking about the video game Marvel Rivals? The game is rated T for teens, but there’s a dearth of information about whether the game is appropriate for kids — so we wrote about it on our blog.

🤔 Want to be the most informed parent in the room? Subscribe to Parent Pixels and get this newsletter a day early!