As a parent, you want your child to surround themselves with good influences. That’s true not only for who they spend time with in real life, but also for the people and ideas they’re exposed to on social media.

If you or your child are concerned about the content appearing in their feed, one beneficial step you can take is to help them reset their social media algorithm. Here’s how to reset your child’s algorithm on TikTok, Instagram, and other platforms.

Social media algorithms are the complex computations that operate behind the scenes of every social media platform to determine what each user sees.

Everything on your child’s social media feed is likely the result of something they liked, commented on, or shared. (For a more comprehensive explanation, check out our Parent’s Guide to Social Media Algorithms.)

Social media algorithms have a snowball effect. For example, if your child “likes” a cute dog video, they’ll likely see more of that type of content. However, if they search for topics like violence, adult material, or conspiracy theories, their feed can quickly be overwhelmed with negative content.

Therefore, it’s vital that parents actively examine and reset their child’s algorithm when needed, and also teach them the skills to evaluate it for themselves.

Research clearly demonstrates the potentially negative impacts of social media on tweens and teens. How it affects your child depends a lot on what’s in their feed. And what’s in their feed has everything to do with algorithms.

Helping your child reset their algorithm is a wonderful opportunity to teach them digital literacy. Explain to them why it’s important to think critically about what they see on social media, and what they do on the site influences the content they’re shown.

Here are some steps you can take together to clean up their feed:

Resetting all of your child’s algorithms in one fell swoop can be daunting. Instead, pick the app they use the most and tackle that first.

If your kiddo follows a lot of accounts, you might need to break this step into multiple sessions. Pause on each account they follow and have them consider these questions:

If the answer “yes” to any of these questions, suggest they unfollow the account. If they’re hesitant — for example, if they’re worried unfollowing might cause friend problems — they can instead “hide” or “mute” the account so they don’t see those posts in their feed.

On the flip side, encourage your child to interact with accounts that make them feel good about themselves and portray positive messages. Liking, commenting, and sharing content that lifts them up will have a ripple effect on the rest of their feed.

After you’ve gone through their feed, show your child how to examine their settings. This mostly influences sponsored content, but considering the problematic history of advertisers marketing to children on social media, it’s wise to take a look.

Every social media app has slightly different options for how much control users have over their algorithm. Here's what you should know about resetting the algorithm on popular apps your child might use.

To get the best buy-in and help your child form positive long-term content consumption habits, it’s best to let them take the lead in deciding what accounts and content they want to see.

At the same time, kids shouldn't have to navigate the internet on their own. Social platforms can easily suggest content and profiles that your child isn't ready to see. A social media monitoring app, such as BrightCanary, can alert you if your child encounters something concerning.

Here are a few warning signs you should watch out for as you review your child's feed:

If you spot any of this content, it’s time for a longer conversation to assess your child’s safety. You may decide it’s appropriate to insist they unfollow a particular account. And if what you see on your child’s feed makes you concerned for their mental health or worried they may harm themselves or others, consider reaching out to a professional.

Algorithms are the force that drives everything your child sees on social media and can quickly cause their feed to be overtaken by negative content. Regularly reviewing your child’s feed with them and teaching them skills to control their algorithm will help keep their feed positive and minimize some of the negative impacts of social media.

Just by existing as a person in 2023, you’ve probably heard of social media algorithms. But what are algorithms? How do social media algorithms work? And why should parents care?

At BrightCanary, we’re all about giving parents the tools and information they need to take a proactive role in their children’s digital life. So, we’ve created this guide to help you understand what social media algorithms are, how they impact your child, and what you can do about it.

Social media algorithms are complex sets of rules and calculations used by platforms to prioritize the content that users see in their feeds. Each social network uses different algorithms. The algorithm on TikTok is different from the one on YouTube.

In short, algorithms dictate what you see when you use social media and in what order.

Back in the Wild Wild West days of social media, you would see all of the posts from everyone you were friends with or followed, presented in chronological order.

But as more users flocked to social media and the amount of content ballooned, platforms started introducing algorithms to filter through the piles of content and deliver relevant and interesting content to keep their users engaged. The goal is to get users hooked and keep them coming back for more.

Algorithms are also hugely beneficial for generating advertising revenue for platforms because they help target sponsored content.

Each platform uses its own mix of factors, but here are some examples of what influences social media algorithms:

Most social media sites heavily prioritize showing users content from people they’re connected with on the platform.

TikTok is unique because it emphasizes showing users new content based on their interests, which means you typically won’t see posts from people you follow on your TikTok feed.

With the exception of TikTok, if you interact frequently with a particular user, you’re more likely to see their content in your feed.

The algorithms on TikTok, Instagram Reels, and Instagram Explore prioritize showing you new content based on the type of posts and videos you engage with. For example, the more cute cat videos you watch, the more cute cat videos you’ll be shown.

YouTube looks at the creators you interact with, your watch history, and the type of content you view to determine suggested videos.

The more likes, shares, and comments a post gets, the more likely it is to be shown to other users. This momentum is the snowball effect that causes posts to go viral.

There are ways social media algorithms can benefit your child, such as creating a personalized experience and helping them discover new things related to their interests. But the drawbacks are also notable — and potentially concerning.

Since social media algorithms show users more of what they seem to like, your child's feed might quickly become overwhelmed with negative content. Clicking a post out of curiosity or naivety, such as one promoting a conspiracy theory, can inadvertently expose your child to more such content. What may begin as innocent exploration could gradually influence their beliefs.

Experts frequently cite “thinspo” (short for “thinspiration”), a social media topic that aims to promote unhealthy body goals and disordered eating habits, as another algorithmic concern.

Even though most platforms ban content encouraging eating disorders, users often bypass filters using creative hashtags and abbreviations. If your child clicks on a thinspo post, they may continue to be served content that promotes eating disorders.

Although social media algorithms are something to monitor, the good news is that parents can help minimize the negative impacts on their child.

Here are some tips:

It’s a good idea to monitor what the algorithm is showing your child so you can spot any concerning trends. Regularly sit down with them to look at their feed together.

You can also use a parental monitoring service to alert you if your child consumes alarming content. BrightCanary is an app that continuously monitors your child’s social media activity and flags any concerning content, such as photos that promote self-harm or violent videos — so you can step in and talk about it.

Keep up on concerning social media trends, such as popular conspiracy theories and internet challenges, so you can spot warning signs in your child’s feed.

Talk to your child about who they follow and how those accounts make them feel. Encourage them to think critically about the content they consume and to disengage if something makes them feel bad.

Algorithms influence what content your child sees when they use social media. Parents need to be aware of the potentially harmful impacts this can have on their child and take an active role in combating the negative effects.

Stay in the know about the latest digital parenting news and trends by subscribing to our weekly newsletter.

Fellow Millennial parents might assume Tumblr has gone by the wayside with other early-2000s social media sites like MySpace and LiveJournal. You might be surprised to learn the microblogging platform is enjoying a major resurgence, fueled by Gen Z. But is Tumblr safe for kids?

This guide discusses why kids like Tumblr, its risks, and what parents can do to help keep their child safe on the app.

Launched in 2007, Tumblr is a cross between a social media platform and a microblogging site. Users can create blogs and share them with friends and followers either on the Tumblr app or on other social media platforms.

Tumblr blogs span from fanfiction to art to memes, and everything in between.

The younger generation is flocking to Tumblr in record numbers. A whopping 50% of users are Gen Z. Here are some of the many reasons Tumblr is so popular with kids:

Like other social media platforms, users in the US must be at least 13. However, age verification relies on users self-reporting, so it’s very easy to subvert.

Tumblr has zero parental controls, so they get a big ol' F on this metric.

The App Store rates Tumblr 17+, and Common Sense Media advises it shouldn’t be used by kids under 15.

Tumblr poses significant risks for kids, including:

Here are some actions you can take to make your child’s experience on Tumblr safer:

Tumblr offers a creative space for users to gather around shared interests. However, the lack of parental controls, public accounts, and exposure to problematic content make the platform unsafe for younger teens. Kids under 15 shouldn’t be allowed to use Tumblr, and parents should take an active role in protecting their child on the app.

For parents who take online safety seriously, BrightCanary offers the most comprehensive monitoring on Apple devices. Monitor what your child sends on all the apps they use, including Tumblr, Discord, and even text messages. Download today and get started for free.

Is your teen begging to start an Instagram or Snapchat account? Introducing kids to social media is a big deal because it can expose them to the broader digital world — and all the risks associated with it.

In this article, we’ll discuss how to introduce kids to social media and tips for helping them stay safe.

There are two primary factors to consider when deciding if your child is ready for social media: age and maturity.

Aside from a handful of apps designed for younger kids, such as Kinzoo and Messenger Kids, most social media platforms require users to be at least 13 years old. However, just because your child is technically old enough doesn’t mean they’re automatically ready for Snapchat (or TikTok, Instagram, or any of the other platforms).

If your 15-year-old isn’t mature enough for social media, you shouldn’t feel pressure to let them use it. But don’t keep them in the dark just because they’re not ready yet — it’s a good idea to start educating your child on how to safely use social media before you hand them the reins.

Once you've decided it’s time to let your teen use social media, here are some tips to get them going:

Explaining the risks of social media shows your teen why it’s important to behave responsibly online. It also helps them learn to spot danger — an important ingredient for lowering their risk.

We’ve covered many of these dangers, including:

We often think of teens as inevitably drawn to risk, but studies actually reveal that teens are often more cautious than their younger peers, choosing the safer option when given the information needed to make that choice.

To equip your teen with the ability to make safe choices on social media, teach them about:

Think of these tips as starting points. You’ll want to continuously check in with your child once they start using social media on their own.

As your child matures, it may be reasonable to give them increasing leeway in when and how often they use social media (within reason). But when they’re first starting out, it’s a good idea to create more stringent boundaries to help them learn appropriate limits.

Utilize the parental controls on the social media apps your child uses, as well as any built into their device.

The American Psychological Association recommends that parents monitor social media for all kids under 15, and depending on your child’s maturity level, it may be necessary to do so for longer. Here are some ways to stay involved:

Did you know? BrightCanary is a great way to give your child independence without compromising on safety because you get alerts when there’s a red flag … without having to look at everything your child does online.

By being proactive, parents can introduce social media to their child in a way that encourages them to be responsible and stay safe. Parents should educate their child on the risks of social media, teach them tips for staying safe, and remain involved in their child’s online activity.

BrightCanary gives you real-time insights to keep your child safe online. The app uses advanced technology to monitor them on the apps and websites they use most often. Download on the App Store today and get started for free.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

😕 9 in 10 teens have been cyberbullied, study says: If your child is online, there’s a good chance they’ve dealt with harassment. Cyberbullying is defined as willful and repeated harm inflicted through electronic devices, typically through social media, gaming platforms, or chat environments. Researchers surveyed nearly 2,700 middle and high school students in the U.S. and found that cyberbullying is widespread among adolescents, and it can lead to serious psychological harm.

The most common forms of cyberbullying reported by adolescents were:

The study found that cyberbullying of any type (no matter how subtle) could contribute to trauma symptoms, such as PTSD, anxiety, and emotional distress. “What mattered most was the overall amount of cyberbullying: the more often a student was targeted, the more trauma symptoms they showed,” lead researcher Sameer Hinduja said in a news release.

Kids might struggle to talk about cyberbullying because they fear social repercussions, like getting in trouble with their friend group or having their device taken away. If you haven’t already, talk to your child about what to do if someone makes them uncomfortable online and how to deal with a bully. For more tips, check out our guides on how to deal with cyberbullying through texting and what to do if you find out your child is the bully.

🤦 Instagram Teen Accounts still show sensitive content: Meta’s Teen Accounts are meant to give added protections to teen users, including limiting the ability for strangers to contact them and filter out sensitive content. But users report receiving recommended content that promoted eating disorders, explicit acts, and hate content, despite using Teen Accounts.

“The danger they face isn’t just bad people on the internet — it’s also the app’s recommendation algorithm, which decides what your kids see and demonstrates the frightening habit of taking them in dark directions,” writes Geoffrey A. Fowler of The Washington Post.

This is just another frustrating reminder that social media companies’ solutions aren’t foolproof and can fail in the places they’re meant to protect kids. This is also why a growing number of experts advocate for more parental oversight on their kids’ devices and regular online safety discussions. It’s still a good idea to set parental controls, but monitoring doesn’t stop there — it’s an ongoing series of conversations and check-ins.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Not all screen time is created equal. While it’s easy for kids to fall into endless scrolling mode, screens can also be tools for connection, creativity, and fun. Here are five conversation-starters to help your tween or teen think about healthier, more intentional ways to use their devices this summer:

🍿 Need ideas for family movie night? Check out this list of new kids shows and movies coming to Netflix this month.

📵 “Teachers don’t have to fight an impossible battle against tech. Students talk to each other between classes. The cafeteria has the sound of conversation. Teachers cover material faster. Cyberbullying has fallen.” Gilbert Schuerch, a veteran high school teacher in Harlem, NYC, shares what happened at his school after they banned phones for a year.

🤯 Did you know that teens use an average of 40 apps per week? That’s a lot to keep up with. We’re working on an easier way to stay informed — stay tuned for news from BrightCanary.

Much like YouTube, Netflix is a great place to find quality entertainment for kids. (Hello, Carmen Sandiego reboot!) But without the right settings, Netflix can expose your child to inappropriate content and even potential interactions with strangers.

Fortunately, Netflix parental controls give you a way to monitor your child’s viewing and reduce those risks. Here’s what you need to know about how to make Netflix safe for your child.

Even though Netflix is packed with great content, it also comes with potential risks:

Wondering how to set up Netflix parental controls? Here are some steps you can take:

It might seem easier to let your child use your Netflix profile, but adult profiles don’t have the same protections for kids. A Kids profile is ideal for kids ages 12 and under. Netflix Kids filters content, allows you to use parental controls, and disables access to Netflix Games.

Once their Kids profile is created, you can:

Netflix Kids profiles cap out at PG, so it’s appropriate for most teens to transition to a regular profile. Without parental controls, there are still steps you can take to help them view safely:

Follow these steps to set parental controls on Netflix:

For additional information on how to use Netflix parental controls, visit this guide.

Netflix is a great resource for child-friendly shows if you take appropriate steps to make your child’s viewing experience safe, such as setting up a Kids profile, using parental controls, and monitoring your child’s viewing.

BrightCanary helps parents monitor what their kids search on popular platforms, including streaming services like Netflix, as well as social media, text messages, and internet browsers. Download BrightCanary today to get started for free.

If you’ve just binged Adolescence on Netflix and are newly alarmed by the manosphere’s influence on teen boys, you’re not alone. The manosphere is a network of online groups — including incels, pick-up artists, and the Red Pill community — that promote masculinity, misogyny, and anti-feminism.

These movements are growing in popularity among adolescents, and their hateful ideologies and violent rhetoric pose a real threat to kids.

This guide breaks down the manosphere meaning, the risks it poses, and how parents can talk to their teens about the dangers of online misogyny.

The manosphere is a loosely connected group of websites, social media influencers, and online communities (such as subreddits) that claim to promote men’s issues — but often do so through a lens of sexism and hate.

The manosphere includes several distinct communities:

“Incel” is a mashup of “involuntary celibate.” Men who self-identify as incels are unable to find a sexual partner, despite feeling entitled to one, and blame women for their loneliness.

Inceldom is permeated with self-pity, resentment, misogyny, racism, and sexual objectification. These communities frequently endorse violence and harassment toward women and “sexually successful” men, as well as promoting self-harm and suicide.

MGTOW advocates avoiding all romantic relationships in order to remain independent and focus on one’s own goals. The MGTOW community is steeped in the same anti-feminism and misogyny as the rest of the manosphere, including violence, hatred, and online harassment of women.

While some in the MRM advocate for legitimate issues like custody rights or men’s mental health, many others use MRM to promote anti-feminist and misogynistic views.

Pickup artists share strategies to manipulate or coerce women into sex. Although their focus on sexual success has made PUAs the object of derision from incels and MGTOW, they share much of the sexism, sexual objectification, and misogyny of these groups.

In the manosphere, “taking the red pill” means accepting that feminism has led to societal biases against men. The Red Pill community advocates for regressive gender roles.

The Red Pill community references the 1999 film The Matrix, in which taking the blue pill is choosing to remain ignorant of the “true” nature of existence, and the red pill means accepting reality, no matter how harsh or unfair.

Teenage boys are engaging with the manosphere at alarming rates. There are several paths they might take into the manosphere:

Yes. Parents should be concerned about the manosphere — especially if they have a teenage boy.

The movements involved in the manosphere spout sexism, hate, misogyny, and violent rhetoric. These groups have been accused of radicalizing boys into extreme misogyny and violence against women, and many are on the watchlists of advocacy groups working to combat hate and extremism, like the Southern Poverty Law Center and the Anti-Defamation League.

Helping your child recognize and reject the manosphere is possible. Here’s how:

Work to create an environment where your child is comfortable coming to you to discuss what they encounter online. Openly discuss the concept of gender roles, toxic versus healthy masculinity, and the dangers of misogyny and the manosphere.

Help your child learn to spot bias, false narratives, and extreme ideology. Teach them to question what they see on the internet and to engage in online spaces in a way that’s aligned with their values.

Kids don’t always recognize red flags themselves. Use a monitoring app like BrightCanary to supervise their activity and see if they engage with manosphere content.

The manosphere is a collection of online communities that promote masculinity while spreading misogyny and anti-feminist ideologies. These groups have been accused of radicalizing boys into hatred and violence against females. Parents should educate their children on the dangers of the manosphere and help them develop the skills to reject it.

BrightCanary helps parents monitor their child’s digital activity — including Google, YouTube, and social media — to catch warning signs early. Download the app and start your free trial today.

Snapchat, Instagram, and TikTok are the most popular social media apps for teens. But which is safer for kids? In this article, we break down the pros and cons of these platforms, what parents should know about online safety, and how BrightCanary helps parents stay in the loop.

| Feature | Snapchat | TikTok | |

| Best for | First social media app | Peer-based chat and interaction | Content discovery and entertainment |

| Parental controls | More robust than other platforms, but can be tricky to set up | With Family Center, parents can see who their teen is messaging and set privacy limits | With Family Pairing, parents can control messages, set time limits, and more |

| Messaging risks | DMs allow contact with strangers | Disappearing messages + pressure to respond | Less peer interaction, but Live chat risk |

| Content moderation | Algorithms and filters, but inappropriate content can still get through | Algorithms and filters, but inappropriate content can still get through | Algorithms, filters, and risk of exposure to harmful trends and feedback loops |

| Safety rating for kids | ⭐⭐⭐ | ⭐⭐ | ⭐⭐ |

Snapchat is an integral part of many teens’ social circles. Here’s what to consider when deciding if Snapchat right for your child:

Instagram's emphasis on self-expression and the variety of ways users can connect with friends make the app a hit with kids. Here are the pros and cons of letting your child use Instagram:

TikTok is a social media app built around short-form content, and it’s one of the hottest apps for teens. Here are some pros and cons of letting kids use TikTok:

Snapchat, Instagram, and Tiktok all have their pros and cons for kids, but Instagram stands out when it comes to safety.

Instagram’s more robust parental controls and Teen Accounts make it the best choice as a first platform for kids who want to try social media with their parent’s support.

But even though Instagram is slightly better than the others, there are still risks associated with the platform. Regardless of what social media your child uses, here’s what we recommend:

There’s no one-size-fits-all answer when choosing between Instagram, Snapchat, or TikTok for your child. But with strong privacy settings and the best parental controls, Instagram is typically the better platform for kids starting social media.

It’s vital that parents take an active role in their child’s social media activity on all platforms. To monitor your child on social media, start your free BrightCanary trial today.

Adolescence on Netflix has emerged as the platform’s most popular offering of all time. It follows a 13-year-old boy, Jamie, who’s accused of murdering a classmate, and provides searing commentary on the ways toxic internet culture and unchecked screen time can impact children.

Let’s take a look at seven valuable lessons Adolescence provides on parenting in the digital age.

If Jamie’s parents had stepped in to support him when he was struggling socially online — and certainly when he started visiting hateful online forums in the “manosphere,” such as those promoting Andrew Tate — his story may well have ended very differently.

The thing about your child’s online activity is that it’s right there for you to see, but you have to be looking. It’s vital to stay involved in your child’s online activity so you can spot early red flags and step in before things escalate.

When Jamie hints to his dad that he’s being bullied, Eddie brushes it off. Similarly, his mother is worried about him spending too much time on his computer, but Eddie dismisses her concerns.

If you notice red flags in your child’s online behavior, such as evidence of cyberbullying, spending excessive amounts of time online, or messaging with someone they shouldn’t, don’t ignore it.

Act quickly to address the situation and support your child to develop healthier online habits.

Once Jamie starts viewing extreme videos on YouTube, the algorithm began feeding him increasingly disturbing material. Educate yourself and your child on the risks of algorithms and help them periodically reset theirs by blocking, unfollowing, or pausing certain content.

Want to know what your child is thinking about? Take a peek at their internet history and you’ll get a decent idea. In Adolescence, Jamie’s early internet history paints a picture of a lonely boy who’s struggling socially and is desperate to make friends and fit in. Then, it shows him progressing down a rabbit hole of digital misogyny until he’s ultimately radicalized against women and toward violence.

It’s important to check in — not to spy, but to understand what’s going on beneath the surface.

As Jamie’s social struggles grow, so does his screen time. He starts escaping online as a way to avoid the real world. His parents notice, but ultimately chalk it up to normal teenage behavior.

However, research tells us there are consequences to excessive screen time, including aggressive behavior and even violence. It’s important to set reasonable screen time limits for your child’s age and enforce them through parental controls and monitoring.

In the show, it’s revealed that Jamie was cyberbullied by peers, including the girl he ultimately murders. While it’s important not to blame the victim, it’s also important to acknowledge the role that being bullied played in Jamie’s ultimate radicalization.

Parents should talk to their children about cyberbullying, be on the lookout for signs, and step in if they spot a problem.

A key thread of the show Adolescence is highlighting what Jamie’s parents might have done differently, including not shying away from talking to Jamie when they started to notice trouble.

We need to empower our children to safely and healthily navigate online spaces and that includes talking with them about difficult topics.

Here are some conversation starters:

Netflix’s Adolescence offers invaluable lessons for parents, including the importance of talking to their child about cyberbullying, why parents should monitor their child’s online activity, and why they shouldn’t shy away from difficult discussions.

BrightCanary can help you monitor your child online. The app uses advanced technology to scan their internet activity and alerts you if there’s an issue. Download BrightCanary on the App Store and get started for free today.

Andrew Tate, a social media personality known for promoting misogyny and toxic masculinity, has become a surprisingly influential figure among teens — especially boys. Despite being arrested and accused of serious allegations, he has been embraced by prominent podcasters and media figures within the “manosphere,” a collection of online communities that promote masculinity and anti-feminism.

So, why are kids talking about him, and what can parents do about it? This guide explains Andrew Tate’s appeal, outlines the risks, and provides age-appropriate tips for discussing his influence with your child.

Andrew Tate is a former competitive kickboxer, entrepreneur, and online influencer whose notoriety grew through his provocative and often misogynistic social media content.

He had 4.6 million Instagram followers before he was banned for violating the platform’s terms of service. He was also banned on several other platforms, including Facebook and TikTok. His account on X (formerly Twitter) was later restored when Elon Musk bought the platform.

Tate brands himself as a self-made millionaire and the “King of Toxic Masculinity.” Here are a few examples of his content:

Is your child following problematic influencers online? Here’s how to monitor their online activity so you can talk about it.

Andrew Tate and his brother Tristan were arrested in Romania on December 29, 2022, for suspected human trafficking, sexual assault, and involvement in organized crime. Recently, the Tate brothers were allowed to leave Romania after prosecutors lifted their travel restrictions. The brothers remain under investigation.

Earlier this year, Andrew Tate’s ex-girlfriend, Brianna Stern, filed a lawsuit accusing Andrew of assaulting her during their relationship.

Andrew Tate’s popularity surged when his videos started circulating around TikTok, a platform whose users typically skew younger. Tate resonates with tween and teen boys who want to emulate his image as a self-made entrepreneur.

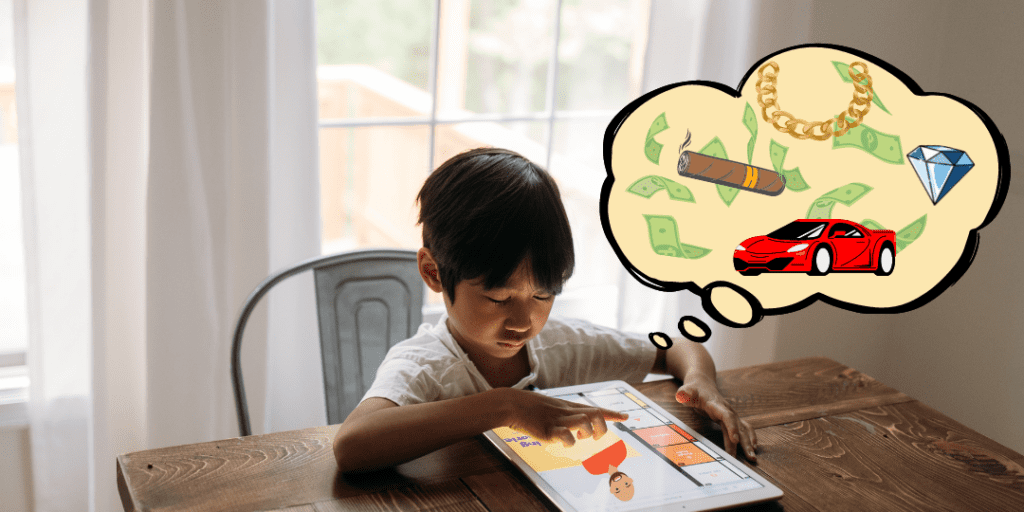

Tate frequently posts outward signals of success, often posing with a cigar in front of one of his several luxury cars. Without evidence, Tate claimed that he was the world’s first trillionaire. His brand is largely built around an image of fast living, easy money, and pliable women. Tate has said that men who read books are dull-witted.

Tate has also displayed a talent for “shock marketing,” taking controversial and deeply offensive positions in order to generate a response. As a result, many of his performances have gone viral. In one of his online courses, Tate advises students to aim for 40% “haters” and controversy.

He sells the idea that he can teach young men how to make money, attract girls, and break societal molds to achieve personal fulfillment.

Parents, caregivers, and teachers say they are seeing harmful comments, discussions, and behavior patterns becoming more and more common among boys and young men. Educators in the UK have grown increasingly concerned that Tate’s noxious brand of masculinity has infiltrated the British school system.

The popularity of the Netflix show Adolescence, which explores the effects of the manosphere and masculinity on teenage boys, has also raised concerns about Andrew Tate’s influence — in the show, the protagonist is drawn into misogynistic communities online that share similar sentiments as Andrew Tate’s brand.

Tate’s messages can normalize sexism, promote power imbalances in relationships, and distort healthy views of masculinity and success. Teachers and mental health professionals report a rise in boys parroting his views, leading to:

Your child’s age and maturity level will determine how deep you dive into the topic. Here are some ideas to start a conversation with them about Tate and what he represents.

It’s best to first ask your child what they know about Andrew Tate. That way, you can get a sense of what they’ve heard, what they already know, and where you can clear up any misinformation.

Tate has a reputation for mistreating women, so this may be a good time to remind your child to treat everyone with respect. Here are some questions to get the conversation going.

Depending on the child’s age, talking about coercive power over another person can be tricky. Always take your child’s maturity level into consideration when discussing heavy topics. Here are some things you can say:

Human trafficking is a tough topic to tackle with a young child. Here are some ways to frame it.

You can talk about sexual assault more candidly with older children, but when children are young, this is a tricky topic to discuss in an age-appropriate manner. For young children, you can discuss body autonomy, personal space, and consent.

Young Children:

Keep it simple. Talk about kindness, fairness, and treating others the way they want to be treated. Use examples from their daily life.

Tweens:

Introduce the concept of influencers and online personalities. Ask what they think makes someone trustworthy and talk about why some people say shocking things just to get attention.

Teens:

Go deeper into misogyny, media literacy, and power dynamics. Discuss what healthy relationships look like and how toxic influencers manipulate emotions to build followings.

Because social media platforms can amplify harmful content, it’s crucial to know what your child is seeing. You can:

BrightCanary helps you supervise your child’s activity across platforms like YouTube, Instagram, Google, and text messages. You’ll get updates if they encounter harmful content — including extremist messages or explicit material.

Andrew Tate’s influence on teens is part of a larger conversation about toxic masculinity, online algorithms, and youth vulnerability. Parents don’t need to panic—but they do need to be proactive.

These aren’t easy topics to broach with your child, but it’s important to start the conversation with them. That way, if they see something confusing or disturbing online, now or in the future, they’ll feel comfortable coming to you to ask the hard questions.