As a parent, you want your child to surround themselves with good influences. That’s true not only for who they spend time with in real life, but also for the people and ideas they’re exposed to on social media.

If you or your child are concerned about the content appearing in their feed, one beneficial step you can take is to help them reset their social media algorithm. Here’s how to reset your child’s algorithm on TikTok, Instagram, and other platforms.

Social media algorithms are the complex computations that operate behind the scenes of every social media platform to determine what each user sees.

Everything on your child’s social media feed is likely the result of something they liked, commented on, or shared. (For a more comprehensive explanation, check out our Parent’s Guide to Social Media Algorithms.)

Social media algorithms have a snowball effect. For example, if your child “likes” a cute dog video, they’ll likely see more of that type of content. However, if they search for topics like violence, adult material, or conspiracy theories, their feed can quickly be overwhelmed with negative content.

Therefore, it’s vital that parents actively examine and reset their child’s algorithm when needed, and also teach them the skills to evaluate it for themselves.

Research clearly demonstrates the potentially negative impacts of social media on tweens and teens. How it affects your child depends a lot on what’s in their feed. And what’s in their feed has everything to do with algorithms.

Helping your child reset their algorithm is a wonderful opportunity to teach them digital literacy. Explain to them why it’s important to think critically about what they see on social media, and what they do on the site influences the content they’re shown.

Here are some steps you can take together to clean up their feed:

Resetting all of your child’s algorithms in one fell swoop can be daunting. Instead, pick the app they use the most and tackle that first.

If your kiddo follows a lot of accounts, you might need to break this step into multiple sessions. Pause on each account they follow and have them consider these questions:

If the answer “yes” to any of these questions, suggest they unfollow the account. If they’re hesitant — for example, if they’re worried unfollowing might cause friend problems — they can instead “hide” or “mute” the account so they don’t see those posts in their feed.

On the flip side, encourage your child to interact with accounts that make them feel good about themselves and portray positive messages. Liking, commenting, and sharing content that lifts them up will have a ripple effect on the rest of their feed.

After you’ve gone through their feed, show your child how to examine their settings. This mostly influences sponsored content, but considering the problematic history of advertisers marketing to children on social media, it’s wise to take a look.

Every social media app has slightly different options for how much control users have over their algorithm. Here's what you should know about resetting the algorithm on popular apps your child might use.

To get the best buy-in and help your child form positive long-term content consumption habits, it’s best to let them take the lead in deciding what accounts and content they want to see.

At the same time, kids shouldn't have to navigate the internet on their own. Social platforms can easily suggest content and profiles that your child isn't ready to see. A social media monitoring app, such as BrightCanary, can alert you if your child encounters something concerning.

Here are a few warning signs you should watch out for as you review your child's feed:

If you spot any of this content, it’s time for a longer conversation to assess your child’s safety. You may decide it’s appropriate to insist they unfollow a particular account. And if what you see on your child’s feed makes you concerned for their mental health or worried they may harm themselves or others, consider reaching out to a professional.

Algorithms are the force that drives everything your child sees on social media and can quickly cause their feed to be overtaken by negative content. Regularly reviewing your child’s feed with them and teaching them skills to control their algorithm will help keep their feed positive and minimize some of the negative impacts of social media.

Just by existing as a person in 2023, you’ve probably heard of social media algorithms. But what are algorithms? How do social media algorithms work? And why should parents care?

At BrightCanary, we’re all about giving parents the tools and information they need to take a proactive role in their children’s digital life. So, we’ve created this guide to help you understand what social media algorithms are, how they impact your child, and what you can do about it.

Social media algorithms are complex sets of rules and calculations used by platforms to prioritize the content that users see in their feeds. Each social network uses different algorithms. The algorithm on TikTok is different from the one on YouTube.

In short, algorithms dictate what you see when you use social media and in what order.

Back in the Wild Wild West days of social media, you would see all of the posts from everyone you were friends with or followed, presented in chronological order.

But as more users flocked to social media and the amount of content ballooned, platforms started introducing algorithms to filter through the piles of content and deliver relevant and interesting content to keep their users engaged. The goal is to get users hooked and keep them coming back for more.

Algorithms are also hugely beneficial for generating advertising revenue for platforms because they help target sponsored content.

Each platform uses its own mix of factors, but here are some examples of what influences social media algorithms:

Most social media sites heavily prioritize showing users content from people they’re connected with on the platform.

TikTok is unique because it emphasizes showing users new content based on their interests, which means you typically won’t see posts from people you follow on your TikTok feed.

With the exception of TikTok, if you interact frequently with a particular user, you’re more likely to see their content in your feed.

The algorithms on TikTok, Instagram Reels, and Instagram Explore prioritize showing you new content based on the type of posts and videos you engage with. For example, the more cute cat videos you watch, the more cute cat videos you’ll be shown.

YouTube looks at the creators you interact with, your watch history, and the type of content you view to determine suggested videos.

The more likes, shares, and comments a post gets, the more likely it is to be shown to other users. This momentum is the snowball effect that causes posts to go viral.

There are ways social media algorithms can benefit your child, such as creating a personalized experience and helping them discover new things related to their interests. But the drawbacks are also notable — and potentially concerning.

Since social media algorithms show users more of what they seem to like, your child's feed might quickly become overwhelmed with negative content. Clicking a post out of curiosity or naivety, such as one promoting a conspiracy theory, can inadvertently expose your child to more such content. What may begin as innocent exploration could gradually influence their beliefs.

Experts frequently cite “thinspo” (short for “thinspiration”), a social media topic that aims to promote unhealthy body goals and disordered eating habits, as another algorithmic concern.

Even though most platforms ban content encouraging eating disorders, users often bypass filters using creative hashtags and abbreviations. If your child clicks on a thinspo post, they may continue to be served content that promotes eating disorders.

Although social media algorithms are something to monitor, the good news is that parents can help minimize the negative impacts on their child.

Here are some tips:

It’s a good idea to monitor what the algorithm is showing your child so you can spot any concerning trends. Regularly sit down with them to look at their feed together.

You can also use a parental monitoring service to alert you if your child consumes alarming content. BrightCanary is an app that continuously monitors your child’s social media activity and flags any concerning content, such as photos that promote self-harm or violent videos — so you can step in and talk about it.

Keep up on concerning social media trends, such as popular conspiracy theories and internet challenges, so you can spot warning signs in your child’s feed.

Talk to your child about who they follow and how those accounts make them feel. Encourage them to think critically about the content they consume and to disengage if something makes them feel bad.

Algorithms influence what content your child sees when they use social media. Parents need to be aware of the potentially harmful impacts this can have on their child and take an active role in combating the negative effects.

Stay in the know about the latest digital parenting news and trends by subscribing to our weekly newsletter.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

⚖️ Is this Big Tech’s Big Tobacco moment? A landmark social media addiction trial is happening right now in Los Angeles. The trial centers on a 20-year-old woman who alleges that endless scrolling and other design features worsened her depression and suicidal thoughts. Snap and TikTok settled before the trial; Meta and YouTube are fighting the claims. Some observers are calling this Big Tech’s Big Tobacco moment — a reference to the tobacco litigation in the ‘90s that exposed internal documents, led to warning labels, and reshaped public health policy.

Meta CEO Mark Zuckerberg and Instagram chief Adam Mosseri have testified so far. Internal documents shown in court suggest Meta knew minors were using its apps below the age minimum, the company prioritized maximizing time spent scrolling, and safety recommendations from experts were sometimes disregarded. Meta disputes the characterization, arguing the documents are cherry-picked and outdated.

What’s striking is that Meta’s own internal research found that parental supervision tools did not meaningfully curb teens’ compulsive use. Even when parents use the tools the platforms provide, behaviors don’t significantly change — a finding that reinforces something we’ve talked about often: screen time limits and parental controls are not set-it-and-forget-it solutions.

They’re tools. Helpful and necessary ones. But tools alone don’t teach judgment, emotional regulation, or resilience.

The timing of the trial is especially notable. The day after Adam Mosseri testified that heavy social media use may be “problematic” but not clinically addictive, a new longitudinal study published in Nature found that teens who struggled to describe their feelings or avoid unpleasant emotions were more vulnerable to developing social media addiction over time.

What does it all mean? This trial is ongoing. Researchers and lawmakers around the world are increasingly worried about compulsive use. Hundreds of families and school districts are suing major platforms. And more bellwether cases are coming. If juries consistently find that addictive design harmed minors, the financial and regulatory consequences could be enormous.

For parents, this is a reminder that:

We designed BrightCanary to help parents stay involved and curious in their children’s digital lives. Because technology safety is a skill, not a setting.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Believe it or not, we’re about halfway through the academic year. This is a great time to zoom out and reset goals — both academic and personal. These conversation-starters help teens connect their daily habits to their bigger ambitions.

🧍♀️ What is the internet like for a 15-year-old girl? In this evocative essay, an anonymous teen describes being inundated with misogyny online. (Language warning.) It’s a sobering reminder that algorithms don’t just show content — they shape culture.

🧸 The villain of Toy Story 5 is … tablets. Pixar’s most nostalgic franchise is confronting “iPad kid” culture head-on. The new trailer shows Woody, Buzz, and the gang competing with iPads for kids’ attention. Art imitates life, after all. What do you think about the trailer?

👾 Discord is rolling out age verification for users. What does it mean, and why is your teen so upset about it? We explain.

I’ve written a lot about how social media is detrimental to kids’ mental health. But witnessing the effort some teens in my life put into selfies motivated me to explore the impact these platforms have on young people’s self-esteem in particular. Does the pressure to be perfect online hurt the way they feel about themselves? I discovered the answer is a solid (and, frankly, unsurprising) yes.

Heightened attention to physical appearance and wavering self-esteem is normal for teens, due in part to developing bodies and an increased awareness of social comparison. Here’s how social media has supercharged this:

Social media prompts unhealthy comparisons in users of all ages. But adolescents' prefrontal cortexes aren’t fully formed, so they process videos and images they see online in a particularly harmful way, literally changing their still-developing brains.

Teens are bombarded with curated, heavily edited images online. Research suggests that these unrealistic beauty standards can significantly change their perception of attractiveness, including how they rank themselves in comparison.

It’s not just viewing altered images that’s a problem. Using filters and editing tools to maximize their own physical attractiveness can also lead to lower self-image. This is particularly stark among teens of color due to racial biases in social media beauty filters. Often modeled on white people, filters reinforce racist ideals of attractiveness.

This conversation often focuses on girls, but boys are also harmed. In one study, nearly every boy reported being exposed to content about appearance such as building muscle and having a certain jawline. Research shows that the more time boys spend on social media, the lower their body satisfaction.

Another way young boys are impacted is that they’re frequently fed a narrow idea of what it means to be male. Exposure to content insisting they must build muscle and have lots of money to impress girls is associated with anxiety, feelings of isolation, and low self-esteem in boys.

While self-esteem around physical appearance takes a particular hit, it’s not the only area that suffers. Constant comparison with others’ social lives and achievements creates feelings of not measuring up.

Here are some signs that may indicate your teen’s self-esteem is suffering due to social media:

Here’s how to help your teen’s self-esteem survive social media:

Social media algorithms are like echo chambers, amplifying the number of image-focused posts teens are exposed to. In fact, two in three boys report being fed content that promotes stereotypes about masculinity without seeking it. Help your teen periodically reset their algorithm.

Adolescents with strong offline relationships exhibit higher self-esteem. Encourage your teen to hang with their friends in person.

Help your teen understand the interaction between social media and self-image. Give them opportunities to process those feelings and encourage them to pull back or take a break from social media when it makes them feel bad.

Adults aren’t immune to the vicious cycle of social media comparison. But seeing you negatively compare yourself to what you see online sets a harmful example for your child.

This is an instance where we need to fake it till we feel it, folks. Work out your own social media-induced insecurities with a friend or therapist and keep that business away from your impressionable offspring.

Overall, there’s a societal acceptance of body dissatisfaction in teens (especially girls). This creates a dangerous environment for teens because their feelings of inadequacy over what they see online are easily overlooked as typical.

Monitor what your child does online and how it makes them feel, and don’t dismiss your instincts when you suspect something is wrong.

BrightCanary helps you keep an eye on social media’s impact on your teen.

You get:

Exposure to heavily edited images, unrealistic beauty standards, and unhealthy portrayals of gender roles on social media negatively impact teens’ self-esteem. You can help by keeping an eye on your child’s activity online, resetting their algorithm, teaching them digital literacy, and modeling a healthy relationship with social media.

BrightCanary helps you monitor your child’s activity on social media by monitoring everything they type across all apps. Download today to get started with a free trial.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🎰 Gambling is becoming alarmingly common among boys: A new report from Common Sense Media is a wake-up call for parents: 36% of boys ages 11–17 gambled in the past year. And we aren’t talking about slots or poker — the report looked at sports betting apps, loot boxes, skin cases, gacha-style rewards inside video games, and social media feeds that normalize betting. Nearly one in four boys have engaged in gaming-related gambling, and most spent real money doing it. Some stats:

While many boys describe gambling as “low-stakes” or just part of bonding with friends or family (one-third have gambled with family members), 27% of boys who gamble report negative effects like stress or conflict. The report also highlights a major loophole: while gambling is illegal for minors, in-game gambling mechanics often aren’t regulated the same way, making it easy for kids to spend (or lose) real money.

What parents can do: Start conversations early, recognize that gambling comes in many forms, set clear rules around spending and games, monitor influences (friends, online activity, and games), and watch for warning signs like secrecy or emotional changes.

🤖 The risks of AI in schools may outweigh the benefits: A new study from the Brookings Institution suggests that while AI tools are being rapidly adopted in classrooms, the risks currently outweigh the benefits — especially for kids’ cognitive and social development. Researchers warn of a “doom loop” where students offload thinking to AI, weakening problem-solving and learning skills over time. There are also concerns about kids developing social and emotional habits through chatbots designed to agree with them, making real-world disagreement and collaboration harder.

UNICEF recommends that parents talk to kids early about what AI is, warn against sharing personal information with AI tools, watch for signs of overuse or behavioral changes, and stay involved in how AI is used for school and beyond. Not sure where to start? Check out our free AI safety toolkit for parents (plus a free code for BrightCanary — send it to another parent!).

📵 Why screen time limits alone aren’t enough anymore: The American Academy of Pediatrics says it’s time to rethink how we manage kids’ screen use. New guidance emphasizes that time limits alone don’t address the real issue: digital platforms are intentionally designed to keep kids engaged through autoplay, notifications, and algorithmic feeds.

Screen time doesn’t tell the whole story anymore. Instead of rigid rules, parents are encouraged to focus on how screens are used, what content kids are engaging with, and how digital life affects sleep, learning, and mental health. Think less stopwatch, more strategy. BrightCanary is designed to help parents stay informed about their child’s activity across all the apps they use — so you know not only what apps your kiddo is using, but also what they encounter. Here’s how to start monitoring (without breaking trust).

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Many kids don’t think they’re gambling … even when they absolutely are. Your goal is to help kids recognize risks before habits form. These conversation-starters can help you open the door without judgment:

📱 TikTok gets an American makeover: TikTok officially has US-based owners. So, the app isn’t going anywhere — but the experience won’t stay the same. Experts say changes will likely show up first in moderation and data practices, not features. If your child uses TikTok, use the Family Pairing feature to set guardrails around their use.

🧹 YouTube takes down major AI slop channels: Following a report showing massive growth in low-quality AI-generated content, YouTube appears to have removed several top “AI slop” channels with millions of subscribers.

🪪 Discord rolls out global age verification: Starting next month, Discord will require face scans or ID for full access. Accounts default to a teen-safe experience unless verified as adult — with stricter filters and protections baked in.

According to a survey by the Pew Research Center, 64% of teens report using generative AI chatbots like ChatGPT for everything from homework help to companionship. But a startling concern is emerging among experts. Early research suggests that overreliance on generative AI could lead to cognitive atrophy and the loss of brain plasticity. Or, as the kids say: brain rot.

As a parent who is determined to teach my kids how to use AI responsibly, I’ve been watching this issue closely. Here’s what to know about how overusing AI impacts the brain and how to protect your child’s cognitive abilities in the face of this new technology.

Generative AI is in its infancy, and so is the research on this topic. But cognitive offloading is likely to blame for AI’s impact on kids’ cognitive health.

Cognitive offloading happens when people use external tools or resources to reduce mental effort. On the one hand, this process can help people accomplish tasks faster. On the other hand, all of that offloading can be harmful for developing brains.

Experts suggest cognitive offloading erodes critical thinking and reasoning skills.

When AI always provides the answers, kids miss out on the opportunity to develop foundational life skills like problem-solving and deep thinking.

For example, learning to write is deeply intertwined with learning to think. However, offloading writing tasks degrades students’ ability to organize and express their thoughts.

When kids offload tasks to AI without doing any leg work, their ability to perform independent research and analyze materials decreases. Students end up with only a superficial understanding of information — they can state the what, but don’t grasp the why or how.

Research has shown that younger users demonstrate a higher dependence on AI tools when compared to older users, and that the corresponding decline in their critical thinking is also greater.

The brain is particularly malleable during childhood and adolescence, making kids and teens especially vulnerable to the impacts of AI.

Because younger children are more likely to anthropomorphize, or assign human properties to inanimate objects, experts suggest that even simple praise from an AI chatbot can greatly change their behavior.

The sooner you start teaching your child to use AI smartly, the more you can buffer its effect on their brain.

To help your child gain AI literacy, teach them:

AI isn’t inherently harmful. The key is using it to support thinking, not replace it. Encourage your child to:

BrightCanary helps you monitor how your child engages with AI by scanning everything they type on their iPhone or iPad. Use it to:

Overreliance on generative AI may lead to a decline in cognitive skills such as critical thinking, reasoning, and the ability to analyze and understand information. Because their brains are especially malleable, children and teens are particularly vulnerable to the impacts of AI on the brain. It’s important to teach your child AI literacy, show them how to use the tool responsibly, and monitor how they use it.

BrightCanary helps you monitor your child’s activity on the apps they use the most, including all AI platforms. Download today to get started for free.

It will come as no surprise to parents that YouTube is all the rage with kids. In fact, recent research suggests that nine out of 10 kids use YouTube, and kids under 12 favor YouTube over TikTok. With all of YouTube’s popularity, how can you make the platform safer for your child? Read on to learn how to set parental controls on YouTube.

As the name implies, YouTube is a platform for user-generated content. While this creates an environment ripe for creativity, it also means there’s a little bit of everything, including videos featuring violent and sexual content, profanity, and hate speech.

Because YouTube makes it easy for kids to watch multiple videos in a row, there’s always the chance your child may accidentally land on inappropriate content. In addition, the comments section on YouTube videos are often unmoderated and can be full of toxic messages and cyberbullying.

Due to the risks, it’s important that parents monitor their child’s YouTube usage, discuss the risks with them, and use parental controls to minimize the chance they’re exposed to harmful content.

YouTube offers a variety of options for families looking to make their child’s viewing experience as safe as possible. Here are some important steps parents can take:

A supervised account will allow you to manage your child’s YouTube experience on the app, website, smart TVs, and gaming consoles.

There are three content setting options to choose from:

Along with content settings, here are some additional YouTube parental controls to explore:

Parents will also be able to set specific restrictions on YouTube Shorts, the platform's short-form video experience similar to TikTok. Soon, parents can set time limits on Shorts, as well as custom reminders for bedtime and taking screen time breaks. As of this writing, this feature isn't yet available.

For step-by-step instructions for setting up parental controls, refer to this comprehensive guide by YouTube.

While YouTube offers an impressive array of parental control settings, you have to manually review your child’s content and watch history in order to catch any concerning content.

BrightCanary is a parental monitoring app that fills in the gaps. Here’s how BrightCanary helps you supervise your child’s YouTube activity:

For parents looking for additional peace of mind, YouTube Kids provides curated content designed for children from preschool through age 12.

For households with multiple children, parents can set up an individual profile for each child, so kids can log in and watch videos geared toward their age. YouTube Kids also allows parents to set a timer of up to one hour, limiting how long a child can use the app.

Parents should be aware that switching to YouTube Kids isn’t a perfect solution. There’s still a chance that inappropriate content may slip through the filters.

In fact, a study by Common Sense Media found that 27% of videos watched by kids 8 and under are intended for older audiences. And for families concerned about ads, YouTube Kids still has plenty of those — targeted specifically toward younger children. Keeping an eye on what your child is watching and talking to them about inappropriate videos and sponsored content is still a good idea, even with YouTube Kids. Fortunately, you can also monitor YouTube Kids with BrightCanary.

It’s also worth noting that kids under 12 who have a special interest they want to pursue may find YouTube Kids limiting. A child looking to watch Minecraft instructional videos or do a deep dive into space exploration, for example, can find a lot more options on standard YouTube — plenty of which are perfectly appropriate for kids, even if they aren’t specifically geared toward them. It’s cases like this where parental controls and active monitoring are especially useful.

YouTube is a popular video platform with plenty to offer kids. It’s not without risks, though. Parents should monitor their child’s use and take advantage of parental controls to ensure a safe, appropriate viewing experience.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤖 More than 20% of YouTube is now AI slop: If your child’s YouTube feed feels … weird lately, you’re not imagining it. A new report from video editing firm Kapwing found that 21% of the first 500 YouTube Shorts shown to a brand-new account were AI-generated, and 33% qualified as “brain rot” content: hyper-stimulating, low-effort videos designed to farm views rather than inform or entertain. And it’s not just Shorts: The Guardian reports that nearly 1 in 10 of the fastest-growing YouTube channels globally now post only AI-generated videos.

Algorithms don’t care if content is junk — they care if it keeps kids watching. This is a good moment to talk with your child about how algorithmic recommendation systems work, why “popular” doesn’t always mean “good,” and how to recognize content that’s meant to hook, not help.

🤝 Teens prefer AI chatbots that feel like “best friends” — and that’s a red flag: New research raises concerns about AI chatbots designed to sound like emotionally supportive humans. Researchers found that most adolescents prefer AI that communicates like a “best friend,” rather than systems that make it clear they’re not human. Teens who preferred their AI BFF reported higher stress and anxiety, and lower-quality relationships with family and peers — indicating that they may be more emotionally vulnerable when it comes to befriending AI.

The authors argue that clear boundaries, repeated reminders that AI isn’t human, and stronger AI literacy should be treated as core safety features, not optional add-ons. If your child uses AI chatbots like ChatGPT or Polybuzz, reinforce that they shouldn’t replace real relationships or emotional support.

📱 Kids are spending over an hour of the school day on their phones: According to new research published in JAMA, American teens ages 13–18 spend an average of 70 minutes of the school day on their phones — mostly social media apps like TikTok, Instagram, and Snapchat. They also spent an average of nearly 15 minutes each day on gaming apps and almost 15 minutes on video apps such as YouTube, all during school hours.

If your school district isn’t one of the growing numbers of schools banning phones, experts recommend keeping phones out of reach during class time, such as in lockers or pouches. At the very least, have your child turn off their phone when they get to school or use Apple Screen Time to set Do Not Disturb limits. The goal isn’t punishment. You’re helping kids protect their attention while they’re still learning how.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Use these conversation-starters to spark meaningful discussions this week about attention and connection:

✍️ TikTok signed a deal creating a new U.S.-based joint venture backed by Oracle and other American investors. It’s still unclear whether U.S. users will need to migrate to a new app, but ByteDance says it won’t control U.S. user data or the algorithm.

📵 We’re one month into Australia’s social media ban for kids under 16. Some teens report feeling “free” and more present, while others quickly found workarounds using fake birthdays or switched to messaging apps like WhatsApp and Discord, the BBC reports.

💬 Character.AI and Google have agreed to settle lawsuits brought by families of teens who harmed themselves after interacting with AI chatbots. Character.AI has since banned users under 18 from open-ended chats.

📍 A Texas father used phone-based parental controls to track and help rescue his 15-year-old daughter after she was kidnapped. It’s a sobering reminder that safety tools matter — particularly in situations where time is of the essence.

Teen crime in the U.S. is historically low, but that statistic masks a troubling trend parents can’t afford to ignore. In recent years, there’s been a disturbing uptick in violence linked to social media, from fight compilations and “stomp outs” to gang activity and assaults coordinated online.

This trend raises a critical question: does social media promote violence among teens? In this article, we’ll break down how social media and violence interact, what the research says about teen behavior, and steps parents can take to reduce their child’s exposure and risk.

Violence among teens is on the rise on social media. After a pandemic-era spike, youth violence has been on a downward trajectory. But recently, a number of cities have seen an increase in violent crimes involving youth, with police citing social media as a frequent contributor to incidents.

Numerous studies have found a link between witnessing violent activity on social media and real-life violence among teens. According to a 2024 report by the Youth Endowment Fund (YEF), nearly two-thirds of teens who reported perpetrating a violent incident in the prior 12 months preceding said that social media played a role.

This correlation is likely due to several factors:

Social media sites use complex sets of rules and calculations, known as algorithms, to prioritize which content users see in their feeds. Here’s what you need to know about social media algorithms and violent content shown to teens:

Here are some actions you can take today to combat the negative effects of social media and violence on your child.

Despite teen violence decreasing overall in recent years, there has been a spike in violent incidents where social media played a role. In addition, exposure to violent content on social media can lead to real-world violence among teens. Parents should help their children understand the ways that social media promotes violence, periodically reset their algorithms, and monitor their online activity for violent content.

BrightCanary helps you monitor your child’s activity on the apps they use the most and sends you alerts when there’s an issue, including if they seek out or engage with violent content. Download today to get started for free.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

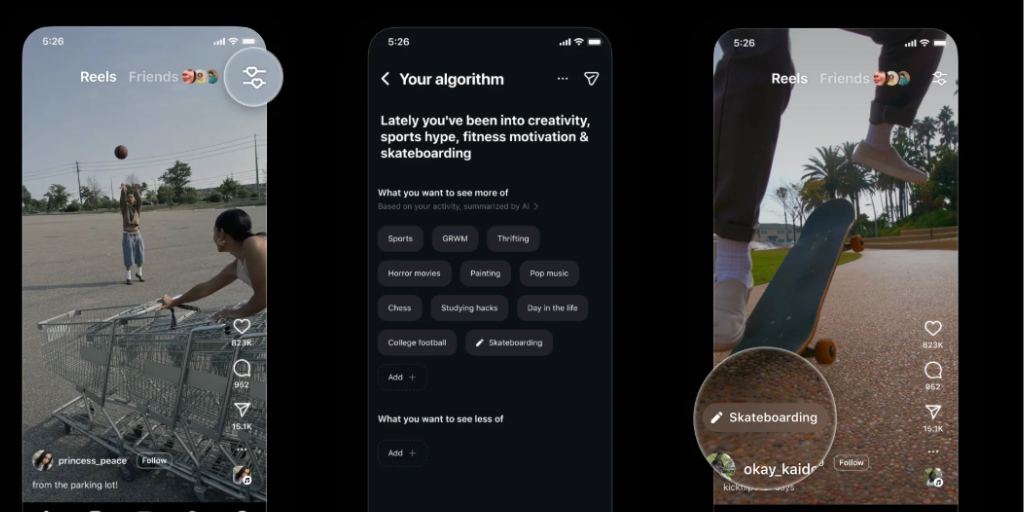

👉 Instagram will let your child pick what shows up in Reels: Instagram is doing something pretty unusual for a social media platform: explaining what’s under the hood. With a new feature called “Your Algorithm,” users can now see a summary of their recent interests and choose topics they want to see more or less of, like dialing up “jiu jitsu” and dialing down “AI cat videos.”

For parents, this product update is also a conversation-starter with your teen. Social media algorithms aren’t neutral. They learn from behavior, reward attention, and quietly shape what kids see day after day. This feature offers a rare moment to pause and scroll and ask:

Why do you think Instagram thinks this is your interest?

How do videos like this make you feel after watching them for a while?

What would you want to see more of (or less of) if you had the choice?

Our take: Tools like this don’t “fix” social media, but they do help kids understand that feeds are designed to hook you based on your interests. The more teens understand how algorithms work, the better equipped they are to use platforms intentionally instead of getting pulled along for the ride. For more on this, browse our parent’s guide to social media algorithms, and learn how to reset your child’s algorithm on popular platforms.

🎁 Thinking about a smartphone for the holidays? Read this first: If a phone is on your child’s holiday wishlist, new research suggests it’s worth waiting. A large study published in Pediatrics found that kids who got their first smartphone before age 13 had significantly worse health outcomes than peers without phones:

Additionally, a new study from the American Psychological Association now directly ties short form video content with significantly diminished mental health and poor attention spans.

The median age for getting a phone in the U.S. is now 11, which means many kids are entering middle school with a powerful device and very few guardrails. However, the takeaway from experts isn’t panic: it’s constraints. Use parental controls like Apple Screen Time to set restrictions on device use, and use a monitoring app like BrightCanary to stay informed about what your child encounters online.

One simple, high-impact step? Keep phones out of bedrooms overnight. It’s not a cure-all, but it’s one of the easiest ways to protect sleep and manage device boundaries, even if your child already has a phone.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

A few questions to help kids think critically about feeds, phones, and habits:

📰 We were included in Wirecutter’s roundup of best parental control apps! Check us out under "Other parental control apps worth considering."

🚫 “It was kind of scary, because social media is so present in my life, and to think it could be taken away like that so suddenly felt weird.” Australia’s social media ban kicked in last week, effectively banning teens under age 16 from using Instagram, YouTube, TikTok, and other major platforms. Here’s how teens are responding.

🤖 Researchers warn that popular AI tools are offering dieting advice, tips for hiding disordered eating, and even generating hyper-personalized “thinspiration” images. Experts say this content can be especially dangerous for vulnerable teens — and much harder to spot than traditional social media posts.