Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

Today, the House overwhelmingly voted to pass a bill that would effectively ban TikTok in the United States. The bill now heads to the Senate, where its future is less certain. The measure, H.R. 7521, would ban applications controlled by foreign adversaries of the United States that pose a clear national security risk.

For years, US officials have dubbed TikTok a national security threat. China’s intelligence laws could enable Beijing to snoop on the user information TikTok collects. Although the US government has not publicly presented evidence that the Chinese government has accessed TikTok user data, the House vote was preceded by a classified briefing on national security concerns about TikTok's Chinese ownership.

If H.R. 7521 is passed, ByteDance will have 165 days to sell TikTok. Failure to do so would make it illegal for TikTok to be available for download in U.S. app stores. On the day of the vote, TikTok responded with a full-screen pop-up that prompted users to dial their members of Congress and express their opposition to the bill. In a post on X, TikTok shared: “This will damage millions of businesses, deny artists an audience, and destroy the livelihoods of countless creators across the country.”

"It is not a ban,” said Representative Mike Gallagher, the Republican chairman of the House select China committee. “Think of this as a surgery designed to remove the tumor and thereby save the patient in the process."

The bottom line: The bill passed the House Energy and Commerce Committee unanimously, which means legislators from both parties supported the bill. Reuters calls this the “most significant momentum for a U.S. crackdown on TikTok … since then President Donald Trump unsuccessfully tried to ban the app in 2020.” The TikTok legislation's fate is less certain in the Senate. If the bill clears Congress, though, President Biden has already indicated that he would sign it.

If your child uses TikTok, it’s natural that they may have questions about the ban (especially if they dream of becoming a TikTok influencer). Nothing is set in stone, and it’s entirely possible that TikTok would simply change ownership. However, this is a good opportunity to chat with your kids about the following talking points:

Set your child’s account to private, limit who can message them, and limit reposts and mentions. With a few simple steps, you can make Instagram a safer place for your kid. Here’s how to get it done.

Yikes — you found out that your child has been sending concerning videos, images, or messages to someone else. We break down some of the reasons kids send inappropriate messages and how to approach them.

🏛️ An update on Florida’s social media ban: as expected, Governor Ron DeSantis vetoed a bill that would have banned minors from using social media, but signaled that he would sign a different version anticipated from the Florida legislature.

📵 Nearly three-quarters (72%) of U.S. teens say they feel happy or peaceful when they don’t have their smartphones — but 44% say they feel anxious without them, according to Pew Research Center.

📖 Do digital books count as screen time? The benefits of reading outweigh screen time exposure, according to experts.

🗺️ How can parents navigate the challenges of technology and social media? Set limits, help your child realize how much time they spend on tech, and model self-restraint. Check out these tips and more via Psychology Today.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

A Florida bill that bans minors from using social media recently passed the House and Senate. The bill, HB1, is now on Governor Ron DeSantis’ desk. He’ll have until March 1 to veto the legislation or sign it into law.

DeSantis has previously said that he didn’t support the bill in its current form, which bars anyone younger than 16 years old from creating new social media accounts — and closes existing accounts for kids 16 and younger. (DeSantis has called social media a “net negative” for young people, but said that, with parental supervision, it could have beneficial effects.) Unlike online safety bills passed in other states, HB1 doesn’t allow minors to use social media with parental permission: if you’re a minor, you can’t have an Instagram.

Even if DeSantis vetoes the bill, the fact that such an aggressive bill passed both the House and Senate with bipartisan support signals that the conversation about online safety legislation is reaching a tipping point.

The Kids Online Safety Act (KOSA), which implements social media regulations at the federal level, also recently reached a major milestone: an amended version gained enough supporters to pass the Senate. If it moves to a vote, it would be the first child safety bill to get this far in 25 years, since the Children's Online Privacy Protection Act passed in 1998.

If passed, KOSA would make tech platforms responsible (aka have a “duty of care”) for preventing and mitigating harm to minors on topics ranging from mental health disorders and online bullying to eating disorders and sexual exploitation. Users would also be allowed to opt-out of addictive design features, such as algorithm-based recommendations, infinite scrolling, and notifications.

In a previous iteration of KOSA, state attorneys general were able to enforce the duty of care. However, some LGBTQ+ groups were concerned that Republican AGs would use the law to take action against resources for LGBTQ+ youth. The amended version leaves enforcement to the Federal Trade Commission — a move that led a number of advocacy groups, including GLAAD, Human Rights campaign, and The Trevor Project — to state they wouldn’t oppose the new version of KOSA if it moves forward. (So, not an endorsement, but not-not an endorsement.)

What’s next? As of this publication, DeSantis has not signed or vetoed Florida’s social media ban. Plus, KOSA has yet to be introduced to the Senate for a vote, and it’s flying solo — there is no companion bill in the House, which would give the House and Senate time to consider a measure simultaneously.

However, the fallout from January’s Senate Judiciary Committee — in which lawmakers grilled tech CEOs about their alleged failure to stamp out child abuse material on their platforms — may build momentum for future online safety legislation. We’ll keep our eyes peeled.

Spotify offers everything from podcasts to audiobooks — and with all of that media comes content concerns. The good news: both Spotify Kids and Spotify parental controls allow kids to enjoy their tunes while keeping their ears clean.

If you remember watching the pirate-themed anime series One Piece, you might be excited about the recently released live-action remake now streaming on Netflix and eager to share your love of the show with your kids. But is One Piece for kids?

🔒 Did you know that 90% of caregivers use at least one parental control? That’s according to a new survey from Microsoft.

📱 Social media is associated with a negative impact on youth mental health — but a lot of the research we have tends to focus on adults. In order to really understand cause and effect, researchers need to talk to teens about how they use their phones and social networks. Read more via Science News.

🛑 Meta announced the expansion of the Take It Down program, which is “designed to help teens take back control of their intimate images and help prevent people — whether it’s scammers, ex-partners, or anyone else — from spreading them online.”

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

Odds are high that your child is currently involved in at least one group chat if they own a smartphone.

From social media to text messages, group chats are the modern equivalent of cliques. However, just like cliques that cluster next to lockers and gossip that spreads through whispers, group chats come with their own set of issues. It’s crucial for parents to understand this digital landscape so they can guide and support their kids through the ups and downs.

When posting on social media, teens have to negotiate the dynamics of different audiences seeing their posts. But group chats can feel more private and protected, allowing kids to share inside jokes and video calls with a smaller group of friends. As opposed to passively scrolling through a feed, these more active types of behavior can support greater perceptions of social support and belonging. Being part of a group chat, and keeping up with it, can help teens express their identity and feel closer to their friends.

At the same time, group chats come with risks.

We’re big proponents of staying involved in your child’s digital life. That includes setting boundaries around device usage and regularly monitoring their text threads and social media inboxes.

It’s also important to keep the lines of communication open. Ask your kid who they’re messaging, and let them know they can come to you when problems arise. You can also use a text monitoring service like BrightCanary to keep tabs on their messages and step in when they encounter anything concerning.

You know your child best. Check in with them, start the conversation about personal safety, and discuss when it’s time to leave a chat — especially if things turn harmful or make them feel bad.

Since 2018, Instagram users have had the option to create a list of Close Friends, and use it to limit who could see their Stories. Recently, Instagram expanded this option to include posts and Reels — we break down why we love this for parents.

It’s a familiar scene of modern parenting: your kid, hunched over their iPhone, furiously texting. You, dying to know what they’re saying. But should parents read their child’s text messages? If you decide to monitor your kid’s text messages on iPhone, how do you do it?

🏛️ The problems with social media got a lot of attention late last month around the Senate Judiciary Committee hearing, in which lawmakers grilled five tech CEOs about concerns over the effect of technology on youths. Following the 3.5 hour hearing, some experts say that the momentum will help pass rules to safeguard the internet’s youngest users, while others say congressional gridlock will keep potential legislation in stasis.

💼 One takeaway from the Senate hearing: don’t mess with the APA because they will fact-check your claims. After Meta CEO Mark Zuckerberg claimed social media isn’t harmful to mental health, Mitch Prinstein, PhD, chief science officer of the American Psychological Association, clapped back and accused Zuckerberg of cherry-picking from the APA’s data.

🤖 How can AI help give teens protection and privacy on social media? Afsaneh Razi, assistant professor of information science at Drexel University, writes about how machine learning programs can identify unsafe conversations online (the same approach that BrightCanary takes!).

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

Last week, New York City Mayor Eric Adams issued a health advisory about social media due to its impact on children. Mayor Adams designated social media an “environmental health toxin,” stating, “Companies like TikTok, YouTube, [and] Facebook are fueling a mental health crisis by designing their platform with addictive and dangerous features. We can not stand by and let big tech monetize our children's privacy and jeopardize their mental health.”

The health advisory aligns with findings from a recent survey published by Common Sense Media, which examined the state of kids and families in America in 2024. Based on responses from about 1,220 children and teens aged 12–17 nationwide, more adolescents are concerned about their mental health today than were previous generations.

Some notable statistics from the survey:

Social media isn’t the only factor impacting the youth mental health crisis. In a conversation with Education Week, Sharon Hoover — co-director of the National Center for School Mental Health — pointed to a range of factors that could be contributing to declining mental health among kids and teens, such as housing insecurity and food insecurity. These issues were exacerbated by the pandemic, to the point that living in an area with more severe COVID-19 outbreaks was deemed a risk factor for youth mental health symptoms.

Last year, the U.S. Surgeon General issued a health advisory warning that social media is a concern for adolescents. Excessive social media use is associated with depression and anxiety, as well as downstream effects from negative impacts on sleep quality. The key word here is “excessive” — it’s important for parents to set guardrails around the level of access kids have online, including how much time they spend on social media (and screens in general).

Here are some places to start:

Social media monitoring refers to supervising your child’s activity on social networks, such as Instagram and TikTok. The most effective plan for monitoring a child’s social media accounts employs a mix of approaches. Here are some options to explore.

A frustrating number of parental control settings are designed in such a way that kids can easily bypass or even change them, rendering them all but useless. Luckily, there are options which allow parents to set boundaries and have some peace of mind.

👀 Do social media insiders let their kids use platforms like Instagram and TikTok? Parents working at large tech companies said they did not trust that their employers and their industry would prioritize child safety without public and legal pressure, the Guardian reports.

📱 Meta has rolled out a few updates for teen users: new “nighttime nudges” will remind teens to go to sleep when they use Instagram late at night, and Instagram will restrict strangers from sending unsolicited messages to teens who don’t follow them. Meta will also allow guardians to allow or deny changes in default privacy settings made by teens.

🏛️ The Florida House has passed a bill to ban social media accounts for users under age 16. The bill doesn’t list which platforms would be affected, but it targets any social media site that tracks user activity, allows children to upload material and interact with others, and uses addictive features designed to cause excessive or compulsive use.

📍 With just a few pieces of information, this TikToker can pinpoint your exact location — and it’s a great lesson in online safety.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

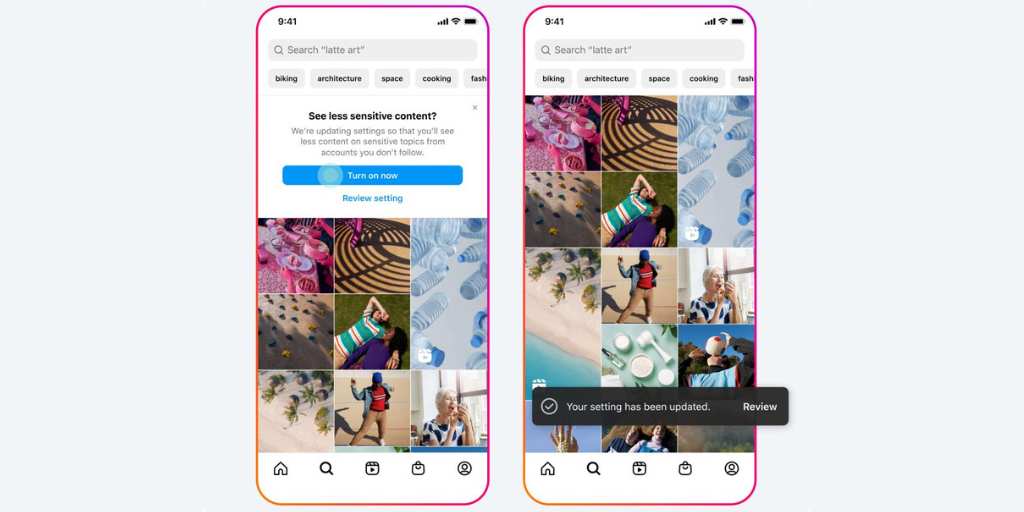

In light of mounting claims that companies aren’t doing enough to protect the mental well-being of young people, Meta recently announced that it will restrict the type of content that teenagers can see on Facebook and Instagram.

The updated settings are designed to “give teens more age-appropriate experiences on our apps.” Meta will default all teen users to the most restrictive content settings, which make it more difficult for people to come across potentially sensitive content or accounts. Teens will receive a prompt to update their privacy settings and restrict who can contact them. Meta will also prevent teen users from seeing content that references self-harm, eating disorders, or nudity, even from people they follow.

In October, a bipartisan group of 42 attorneys general announced that they’re suing Meta, alleging that the company’s products are hurting teenagers and contributing to mental health problems. New York Attorney General Letitia James said, “Meta has profited from children’s pain by intentionally designing its platforms with manipulative features that make children addicted to their platforms while lowering their self-esteem.”

In November, Meta whistleblower Arturo Bejar told lawmakers that the company was aware of the harm its products cause young users, but failed to fix the problems. Meta and other tech companies are incentivized to keep young users on their platforms — a recent study found that social media platforms (including Facebook, Instagram, and YouTube) generated $11 billion in advertising revenue from U.S. users younger than 18 in 2022 alone.

Our take: Even before this announcement, Meta already had protections in place for younger users. (We’re fans of Instagram’s Family Center.) However, many parents aren’t aware of these features, and it makes a lot of sense to automatically implement protections that are developed in alignment with experts in adolescent development. While we wish those protections were instituted sooner (before everyone started suing Meta), we’re still calling this a win.

At the same time, the success of these features depends on two things: whether or not your child listed their age correctly in their account, and the level of supervision parents have over their children’s accounts. If your child created their own online account, double-check that they’ve listed their age correctly.

And if you don’t already have a parental monitoring practice in place, now’s the time to start. We always suggest having regular tech check-ins with your kids to go through their phone together, creating space to discuss internet safety issues, and using a monitoring tool like BrightCanary to get alerts if your child encounters anything inappropriate.

So, you found out that your kid saw something definitely meant for adults. Don’t panic! Here’s how to talk to kids about inappropriate content they may encounter online.

Platforms that allow users to interact are prime places for predators to solicit kids. From YouTube comments to linked social accounts, there are still several ways strangers can talk to your kids that parents should know.

⚖️ The Washington Post covers the states looking to pass online safety bills in 2024, including California, Minnesota, Maryland, and New Mexico. In Florida, a bill that could ban minors from using social media is up for legislative consideration today.

📱 A new study says that almost half of British teens feel addicted to social media. Out of 7,000 respondents, 48% said they agreed or strongly agreed with the statement, “I think I am addicted to social media.” A higher proportion of girls agreed compared to boys (57% vs. 37%).

📖 How is growing up in public shaping kids’ self-esteem and identity? Pamela B. Rutledge, Ph.D., discusses how parents should actively engage with kids' digital activities as guides, not intruders or spies. She also reviews Devorah Heitner's new book about kids coming of age in a digital world: Growing Up in Public.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

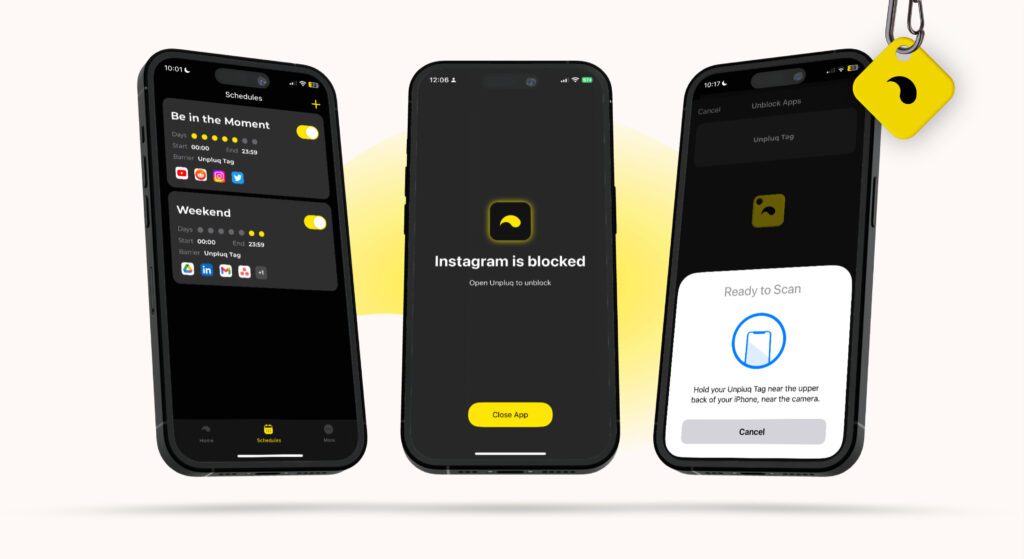

Does this sound familiar? You’re about to go to bed, but you quickly check Instagram — only to find yourself an hour later, still scrolling and sending parenting memes to friends. Or maybe your teenager, despite trying various apps and settings to curb screen time, inevitably gravitates back to their phone when they should be studying.

It’s a scene that plays out in households throughout the country, a testament to the addictive allure of social media and modern technology for both parents and kids. Enter: Unpluq. Founded in 2020, Unpluq promises to help you reclaim 78 minutes of your day from screens. It combines a screen time management app with a unique physical tag, which acts as a key to access blocked apps. That action is meant to change your screen time habits, making scrolling or opening apps a conscious choice.

We emailed with Caroline Cadwell, Unpluq CEO and co-founder, about the habit loops that keep us scrolling, how parents can model appropriate digital behavior for their kids, and the future of screen time management.

What inspired you to create Unpluq, and how do you believe it can play a role in fostering a healthier digital environment at home?

I realized that I was always connected and overworked. Walking my dog? Responding to a Slack message. Dinner with my partner? I just need to do one more thing on the computer. My cofounders, Tim and Jorn, had similar struggles. Tim lost sleep to endless scrolling and watching short videos before bed. Jorn was distracted from his studies. There was no intentionality to it. We didn't have control of our time.

We noticed that existing tools like Screen Time on iOS and Digital Wellbeing on Android were too easy to bypass. With Tim’s background in interaction design and our understanding of Rational Override Theory — basically, making the things you don’t want to do, harder to do — we developed the Unpluq Tag.

It acts as a physical, wireless key to your addictive apps. By using physical interruption, we’ve been able to tap into our brain’s ability to make conscious decisions over automatic, addictive behavior.

As parents and as adults, we're constantly setting examples for the kids around us. But trillions of dollars have been poured into keeping your attention on that screen. We know that more time with our devices makes us unhappy, and we don't want that for the next generation — but it is so hard to break the behavior pattern without effective tools.

Many people equate mindfulness with reducing screen time, but in today's digital age, complete disconnection isn't always feasible. How does Unpluq strike a balance between necessary connectivity and mindful detachment?

Life goes fast with smartphones. We constantly have to process so much information at once. Some of us wish we could go back to the Nokia 5160 era, but we still rely on our phones for essential tasks like banking, work, and socializing.

That's why leaving our phones in another room, using airplane mode, and deleting social media apps aren't solutions that work for people. "Digital detox" is a popular phrase, but it’s unsustainable. How many people do you know who announced they were leaving Facebook for a while, who cropped back up again a few days later?

What Unpluq allows you to do is add a layer of intention to how you use your phone, and that makes it a lasting habit change. It makes it harder to slip into unwanted patterns, encouraging lasting habit changes and allowing you to follow through with your intention to read a book or spend quality time with your kids.

In an interview with GeekWire, you described Unpluq as something that helps people “overcome what has been engineered against the very biology of being human.” This is such a fascinating comment, particularly when considering research that shows babies react negatively when they see their parents engrossed in their phones. Can you expand more on your statement and explain how you believe Unpluq can help people be more present for their families?

For the past decade, as smartphones become more commonplace, we’ve seen a rise in mental health issues — especially with young adults. Rates of anxiety, depression, and suicide have increased. It’s no surprise that these outcomes strongly correlate with screen time.

The attention economy, fueled by features like the “endless scroll” and “like button,” exploits the parts of our brain associated with reward and pleasure. It’s no shock that these technologies and features can be labeled addictive. It’s unreasonable to tell a human brain, programmed to seek reward, to stop seeking it.

Since the launch of Unpluq, what feedback have you received from parents?

The happiest feedback I've gotten from families using Unpluq is that as parents, they were able to set a good example. They were able to be present with their children.

One customer said he's been more present at dinner, when previously, he was still engaged with work. Another said, “My son, 13, is a big fan of Unpluq. He used to scroll TikTok and Instagram for hours and hours — now he does his homework.”

It's pretty universal that parents want to be the best they can for their kids, to raise them as well as they can, and to set them up for success. Much like any other important life skill, parents have to teach their kids what responsible phone and internet usage looks like, how to self-regulate, and how to moderate our intake. By and large, we’re failing to do so because we lack effective tools.

A friend is an elementary school teacher. She asked her class what their parents could do better, and roughly 80% of the kids said some version of "stop using the phone and pay attention to me when I'm talking." It's heartbreaking — but understandable, too.

What has surprised you most about your work with Unpluq?

The lasting change that Unpluq helps people accomplish has surprised and delighted me. Most people continue to use it long-term, saving an average of one hour and 22 minutes a day. That means, in a year, they've saved 35 waking days of their life. How incredible is it to gain an extra month every year, all in your control?

Learn more about how Unpluq works. This interview has been edited for length and clarity.

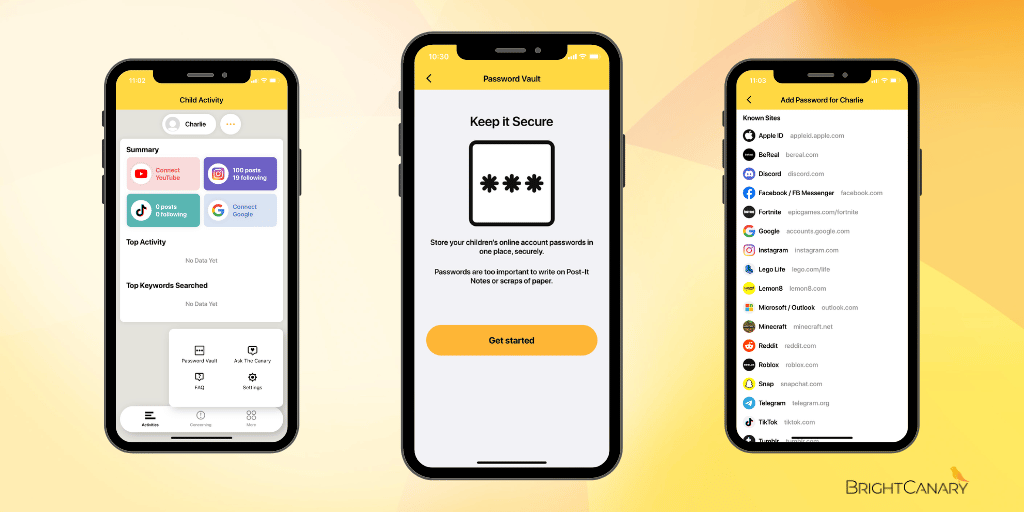

How do you manage your child’s passwords? Are they scattered on scraps of paper, saved on your kid’s phone, or reused on multiple sites? While these methods are convenient, they aren’t necessarily safe or secure. In the digital age, one of the most significant challenges parents face is ensuring their kids stay safe online. The best password manager for families is one that's convenient, easy to use, and secure. Here’s how a password manager can help you protect your child’s digital journey.

Also known as a password book, a password manager is a digital tool that securely stores passwords in one place. You can find password managers in web browsers, such as Safari and Google Chrome. They’re also available through online tools and apps, such as LastPass and the Password Vault located in the BrightCanary app.

Password managers store your passwords in an encrypted form, which ensures they’re only accessible to you. You only need to remember one master password to unlock your password vault, which gives you access to all your other passwords.

For example, parents who use BrightCanary set a code to unlock their Password Vault and access their child’s saved passwords. You can also use Apple Face ID to unlock the Password Vault, which makes reviewing and managing your child’s logins even easier.

Some password managers automatically save and fill passwords in the appropriate login fields. For example, Google Chrome suggests, saves, and auto-fills secure passwords when you’re logged into your Google account.

Other password managers, like BrightCanary’s Password Vault, simply store passwords in one place. It’s like an ultra-secure notebook — when your child creates a new account, just add their username and password to the Password Vault in the BrightCanary app.

Even if you feel like you have a good handle on your passwords, it’s helpful for parents to keep track of their child’s logins with a password manager. Here’s why:

A password manager definitely has its perks, but there are some downsides to consider before you start using one:

Password managers are safe if they encrypt your information, which means it’s coded in a way that makes it nearly impossible for anyone to access without the master password. For example, BrightCanary and 1Password use military-grade encryption to protect your data.

Why would you use a password manager instead of just writing your passwords down on a piece of paper? Physical records are susceptible to being lost or stolen, especially if you travel with your password book. If you were to lose your phone, someone would need to know your master password in order to unlock your vault; in comparison, a scrap of paper is easy to misplace.

It’s also much harder to manage and update a paper list as compared to a digital Password Vault. Whether it’s on your phone or on your desktop, a password manager allows you to review, update, and add or remove passwords with just a few taps.

You may be familiar with password managers such as 1Password and LastPass. These tools are strong, secure, and offer robust features like auto-filling passwords and even storing credit card information.

But when it comes to the best password managers for families, BrightCanary’s Password Vault tops the list because it combines the safety and security of a password manager with the robust online monitoring features you expect from BrightCanary.

With BrightCanary, you can monitor your child’s online activity on YouTube, Google, Instagram, and TikTok. The Password Vault is located directly within the BrightCanary app, which means you can log your child’s passwords and review what they’re watching and searching for — all in one place.

Your child’s login information is always protected, encrypted, and anonymized. Plus, the Password Vault is as simple as it is secure: just set your master passcode, add your child’s login information for popular platforms such as Discord and Roblox, and go.

It’s pretty normal to have a variety of online accounts. That means password management is a necessity — but keeping track of your passwords and your child’s can be a hassle. That’s one of the reasons BrightCanary’s Password Vault is so convenient: you can keep your child’s passwords in the same app you use to monitor their online activity. Nothing beats being prepared, and that includes keeping tabs on your child’s online safety. To start using the Password Vault today, download BrightCanary in the App Store.