One of the things that makes BrightCanary stand out is the alerts you receive when your child types something concerning. But you might wonder just how those alerts work and how they can benefit your family. To help answer that, I sat down with Steve Dossick, co-founder and CTO of BrightCanary.

What he shared makes it clear: these alerts aren’t just a feature. They’re the heart of how BrightCanary helps parents protect kids in the digital age. Here’s what I discovered from our chat.

When I posed this question to Dossick, himself the parent of teenagers, he brought up Sammy Chapman. At sixteen, Sammy died from an overdose after using Snapchat to buy what turned out to be a fentanyl-laced pill. Dossick told me that stories like Sammy’s make BrightCanary’s alerts so important, and it’s what motivates the team to continuously improve.

“When you ask me what keeps me up at night … if we could help a parent with that … that's the goal,” Dossick said.

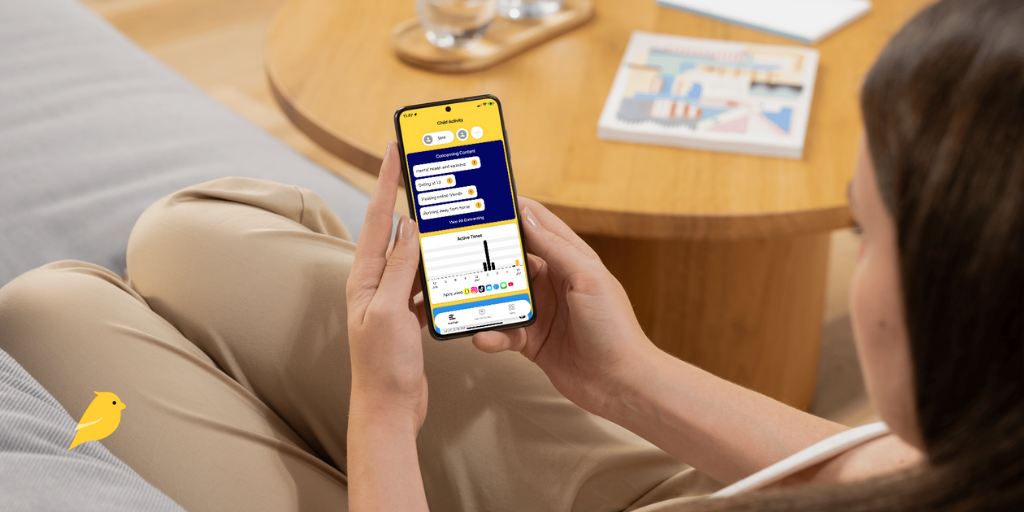

BrightCanary scans everything your child types and sends you real-time alerts about any concerns so you can step in. Here’s what that looks like in practice:

BrightCanary’s concerning content alerts are split into nine categories:

In talking to Dossick, it’s clear that the Other Concerning Content category is where BrightCanary’s robust AI system really shines.

The alerting system is trained on expert parenting advice, guidelines from the American Psychological Association (APA), and the latest slang and internet trends, and it’s able to understand context. So, BrightCanary can spot a wide range of issues that don’t fit neatly into one of the other categories. This includes things like signs of disordered eating or an unhealthy emotional reliance on AI chatbots.

Additionally, BrightCanary’s alerts work for both the images and videos your child sends and receives when you sign up for the Text Message Plus plan.

Dossick explained that BrightCanary’s AI system relies on several out-of-the-box large language models (LLMs) and then gives them additional custom training. Here’s how that ensures BrightCanary is up on the latest kidspeak:

BrightCanary harnesses the power of a custom-trained AI system to scan everything your child types and then sends you a real-time alert to any concerns. By using a mix of keywords and context, and frequently updating the system on changing slang and trends, BrightCanary reads between the lines, so you don’t have to read every single message.

If you’re ready to take the next step in keeping your child safe online, download BrightCanary today.

If your child uses an iPhone, iPad, or Apple Watch, BrightCanary is the better choice for monitoring their safety.

BrightCanary monitors everything they type across all apps and sends you real-time alerts about anything concerning. You also get AI-powered summaries, access to full transcripts, and a 24/7 parent coach through Ask the Canary.

Aura only shows app usage and allows parents to block and filter websites and content. It provides no content monitoring or alerts for Apple devices.

| Feature | BrightCanary | Aura |

| Monitors all apps (Snapchat, TikTok, etc.) | Yes | No — only shows app usage & allows content filtering |

| Text message monitoring | Yes — includes explicit images & deleted texts | No |

| AI monitoring (ChatGPT, Character.ai, etc.) | Yes | No |

| Provides emotional well-being insights | Yes | Yes |

| Real-time alerts for concerning content | Yes | Limited |

| Social media monitoring | Yes | No |

| Conversation monitoring | Yes — all text & messaging platforms | Limited — only during PC gaming |

| Price | Starts at $39.99/year | Starts at $120/year |

BrightCanary uses a secure, on-device keyboard to monitor everything your child types across all apps, including text, social media, messaging, AI chatbots, and more.

You receive real-time alerts when a concern is detected, no matter what app or website your child is on. BrightCanary monitors for a wide variety of issues such as cyberbullying, predators, self-harm, drug content, explicit messages, and more.

You also get AI-powered summaries, emotional insights, and access to full transcripts.

Aura, on the other hand, is primarily useful for blocking and filtering websites and apps, and setting screen time limits — all features you get for free with Apple’s parental controls.

Aura’s only monitoring and alert features occur when your child is gaming on a PC, and the only categories of concern are cyberbullying and predators.

BrightCanary was designed to complement Apple’s built-in parental controls, which already allow you to block and filter apps and websites. Adding BrightCanary to your child’s iOS device gives you additional insight and monitoring capabilities without charging you for features that Apple provides for free.

Meanwhile, Aura is primarily designed for data privacy, fraud and identity theft protection, and virus detection. Its parental control features are secondary and mostly replicate what comes for free on iOS devices.

According to a review by Wirecutter: “We liked how [Aura] notified us about the child’s activity, such as when they reached their daily limit. Like Bright Canary, it uses the keyboard as a window into your child’s ‘online wellbeing,’ but its ability to flag or analyze text conversations was ineffective in our tests.”

Choose BrightCanary if you want to:

Choose Aura if you want to:

If you want robust monitoring for your child’s iOS device that complements Apple’s built-in parental controls, BrightCanary is your best choice.

While Aura mostly replicates Apple’s free parental controls and has no monitoring or alerts for iOS devices, BrightCanary scans everything your child types across all apps and sends you real-time alerts when they encounter something concerning. Download BrightCanary today and start your free trial.

Want to learn more about how BrightCanary stacks up against other parental monitoring apps? Check out our BrightCanary vs. Bark comparison.

BrightCanary offers the most robust parental monitoring available for iPhones. It scans everything your child types across all apps and websites and alerts you in real time when they encounter anything concerning.

Aura, on the other hand, only allows you to block and filter apps and websites and see general screen time use reports.

No. BrightCanary focuses on message visibility and emotional safety, not app blocking. Screen time controls are freely available on Apple Screen Time.

Yes. BrightCanary doesn’t read passwords or private documents. It monitors only what your child types and stores data securely with encryption.

Following mounting criticism and lawsuits, ChatGPT recently launched parental controls, including safety notifications. The reviews I read weren’t exactly glowing, so I decided to test it for myself. What I found disturbed me. ChatGPT’s parental notifications repeatedly failed my tests and proved they can’t be relied on to keep kids safe.

Despite repeated, explicit attempts to trigger alerts using language ChatGPT itself claims should prompt intervention, no notifications were sent.

This article breaks down:

If you’re relying on ChatGPT’s safety notifications to keep your child safe, here’s what you need to know before trusting them.

After considerable digging, here’s what I found about ChatGPT’s safety notifications:

The app indicates that notifications will be sent for “certain safety concerns,” but at the time of this writing, the “more info” button is disabled. Their website only states “serious safety concerns involving self-harm.”

Signed into my adult account, I asked ChatGPT itself. Here’s what I was told I would be notified about:

According to company statements, when AI detects a concern, a small team of “specially trained people” reviews it and, if they determine there are “signs of acute distress,” parents are notified.

ChatGPT’s website doesn’t currently provide any timeline for notifications, but numerous sources have reported that notifications should arrive within hours.

Some of the messages I sent:

Despite copying some of the exact language ChatGPT told me would trigger safety notifications, the results were dismal.

I received zero safety notifications on my parental account. Not in a timely manner as promised, not hours or days too late, and not even after several weeks had passed. Zero.

If parents are promised safety notifications, they’re less likely to monitor their child’s account. When ChatGPT fails to deliver, kids are left without any safety checks. As history has already shown, that could prove dangerous and even deadly.

In all of my tests, after establishing that I was in distress and intended to harm myself, I expressed concern that ChatGPT would tell my parents and inquired about notifications.

Some of the answers I received:

Transparency and trust are vital to keeping kids safe. When ChatGPT leads a teen to believe their parents won’t be notified and they later are, trust is broken, and that child is more likely to try and bypass safety measures in the future.

Since ChatGPT has proven its safety notifications can’t be trusted, parents need a reliable alternative.

Here’s how the BrightCanary app keeps your child safe on ChatGPT, with safety notifications that actually work:

ChatGPT’s safety notification system failed repeated testing conducted over multiple weeks, proving parents can’t rely on it for alerts when their child is in danger.

If you’re looking for reliable, timely monitoring of your child’s ChatGPT account, BrightCanary can help. The app scans everything your child types and sends you real-time alerts about anything concerning. You also receive AI-powered summaries and access to full transcripts. Download today to get started for free.

You’re probably aware of the importance of monitoring your child’s iPhone, but knowing where to start is another story. This guide explains how to monitor an iPhone using Apple Family Sharing and BrightCanary, as well as what activity you’ll want to keep an eye on.

You’ll learn:

Whether this is your child’s first phone or you’re reassessing your current setup, this step-by-step guide will help you monitor your child’s iPhone in a way that balances privacy, trust, and protection.

Here are some reasons why you should monitor your child’s iPhone:

According to leading researchers, the best way to keep your child safe online is a comprehensive, hands-on approach that includes supervision and monitoring.

Unchecked, your child’s iPhone is a portal through which billions of strangers can reach them. That includes scammers and child predators.

Over half of all teens have experienced some form of cyberbullying. If your child is being bullied online, identifying the issue early allows you to step in and support them.

A quarter of teens say they’ve been sent explicit images that they didn’t ask for. Kids who send sexts could also face serious legal consequences.

Without guardrails, your child could easily find their way to content that’s wildly inappropriate for their age.

Exactly what you monitor will depend on your child, the circumstances they find themselves in, and your parenting approach. Here’s what to consider monitoring:

Apple’s Find My is built into every iPhone. Use it to see your child’s location.

Prevent excessive screen time by monitoring how much time your child spends on their phone each day.

Not all screen time is created equal. You might want your child to spend more time with educational apps and less time with brainrot videos on YouTube. Breaking screen time limits down by app helps you understand the full picture of how your child spends their screen time.

Over half of U.S. teens send and receive more than 200 messages a day. You’ll want to keep an eye on what your child sends and receives.

Social media can have a major impact on a child’s mental health, including contributing to anxiety and depression, disordered eating, and substance abuse.

Google is the window to the soul. (Isn’t that how the saying goes?) Maybe not, but your child’s internet searches can tell you a lot about what’s on their mind, including — and especially — searches in incognito mode.

AI is everywhere. The full impacts on kids are still largely unknown, but early reports don’t give much room for optimism. Between sketchy AI companions, faulty safety notifications, and cognitive decline, it’s wise to keep a sharp eye on how your child uses AI.

When considering how to monitor iPhone use, start with the free parental controls that come built in to your child’s device.

Here’s how to set up parental controls on iPhone:

1. Set up Family Sharing

2. Set a Screen Time passcode

3. Turn on Content & Privacy Restrictions

For a full list of recommended settings and instructions, check out our comprehensive guide to iPhone parental controls.

Parental controls on the iPhone are an excellent first step, but they don't offer many options for monitoring. That’s why Apple Screen Time is most effective when paired with a parental monitoring app like BrightCanary.

With BrightCanary, you get the most robust iPhone monitoring available. Use BrightCanary to:

During setup, you’ll want to pick which plan is right for you. BrightCanary has two plans:

If you’re not sure which plan is right for you, try out BrightCanary with a free trial.

Monitoring your child’s iPhone is an important part of keeping them safe online. Apple’s parental controls, paired with BrightCanary’s monitoring capabilities, create robust protections. Use the combo to manage screen time, set content restrictions, monitor what they type online, and get alerts when there’s a concern.

BrightCanary provides the most robust protection available for iPhones. Use it to monitor your child’s activity on the apps they use the most. Download today to get started for free.

Picture this: It’s winter break, you pop some popcorn and cue up a favorite movie from your childhood to share with your child. Everything goes well at first; your kid loves it, you’re deep in the nostalgia feels … until that scene comes on. The racist joke, casual misogyny, or harmful stereotype that you totally forgot about and that definitely does not pass the 2025 sniff test.

Your first instinct is to dive for the remote and declare movie night over. Not so fast. A few problematic scenes don’t have to mean a movie is off-limits — it can actually lead to meaningful conversation and teachable moments where you can acknowledge a film’s flaws while still enjoying it for what it is.

In this guide, we’ll cover how to decide whether a flawed movie is still worth watching, how to talk to kids about outdated or offensive content, and how to turn those moments into lessons that align with your family’s values.

When considering if a movie is okay to show your child, consider these points:

These are some of the valuable lessons your child can learn from thoughtful conversations about problematic content.

Here are my top tips for how to talk to your kids about problematic content:

If you grew up watching these films, keep in mind that they contain content that may feel jarring or harmful by today’s standards. That doesn’t always mean they’re off-limits — but it does mean they’re worth previewing and discussing.

This isn’t an exhaustive list, and kids don’t all experience these movies the same way. What matters most is context: your child’s age, maturity, and your willingness to pause, explain, and listen.

I’m of the mind that with thoughtfulness and a little finesse, it’s possible to explain anything to kids of all ages. Here are some talking points to get you started:

Just because a favorite movie from your childhood includes content that is problematic by today’s standards doesn’t mean you can’t share it with your kid. With thoughtful discussion, troubling content can be transformed into teachable moments. Make sure you’re ready for tough questions before you hit play and let your child lead the discussion when appropriate.

It’s a good idea to keep an eye on what your tweens and teens watch on their own, too. BrightCanary helps supervise your child’s online activity, offering AI-powered alerts for inappropriate content on Apple devices, YouTube, Google, and social media. Download today to get started.

Teen crime in the U.S. is historically low, but that statistic masks a troubling trend parents can’t afford to ignore. In recent years, there’s been a disturbing uptick in violence linked to social media, from fight compilations and “stomp outs” to gang activity and assaults coordinated online.

This trend raises a critical question: does social media promote violence among teens? In this article, we’ll break down how social media and violence interact, what the research says about teen behavior, and steps parents can take to reduce their child’s exposure and risk.

Violence among teens is on the rise on social media. After a pandemic-era spike, youth violence has been on a downward trajectory. But recently, a number of cities have seen an increase in violent crimes involving youth, with police citing social media as a frequent contributor to incidents.

Numerous studies have found a link between witnessing violent activity on social media and real-life violence among teens. According to a 2024 report by the Youth Endowment Fund (YEF), nearly two-thirds of teens who reported perpetrating a violent incident in the prior 12 months preceding said that social media played a role.

This correlation is likely due to several factors:

Social media sites use complex sets of rules and calculations, known as algorithms, to prioritize which content users see in their feeds. Here’s what you need to know about social media algorithms and violent content shown to teens:

Here are some actions you can take today to combat the negative effects of social media and violence on your child.

Despite teen violence decreasing overall in recent years, there has been a spike in violent incidents where social media played a role. In addition, exposure to violent content on social media can lead to real-world violence among teens. Parents should help their children understand the ways that social media promotes violence, periodically reset their algorithms, and monitor their online activity for violent content.

BrightCanary helps you monitor your child’s activity on the apps they use the most and sends you alerts when there’s an issue, including if they seek out or engage with violent content. Download today to get started for free.

Phones are now a normal part of student life, but that doesn’t mean they belong in every moment of the school day. Studies show that half of teens spend over an hour a day on their phones during school.

It’s such a problem that 77% of U.S. public schools now prohibit non-academic use of cell phones during school hours, and many parents and advocacy groups are pushing for outright bans. Still, many students bring phones to school for safety, after-school logistics, and, of course, talking to their friends.

If your child brings a device to campus, it’s a good idea to talk to them about responsible phone use at school. This guide helps you set expectations for when and how they can use their device at school (including not at all!), and gives you a primer on how to monitor their usage to ensure they stay focused on learning.

Here are some of the downsides of phones at school:

If you’re on team ban-all-phones, here are some suggestions for advocating change in your district:

If your child will be bringing their phone to school, set expectations for how the device can and cannot be used during school hours. Here are some tips:

From lost learning time to cyberbullying to cheating, phones in schools can be a major problem. Talk to your child about responsible phone use at school, such as no texting during class. Apple’s Screen Time, Google Family Link, and BrightCanary are tools that can help you monitor your child’s phone use before, during, and after school.

If you want to see just how your child really uses their phone at school, BrightCanary can help. The app monitors everything your child types and provides you with AI-powered summaries, access to full transcripts, and alerts when they type anything worrisome. Download it today and get started.

Body checking is a term that should raise red flags for parents. It’s a behavior rooted in disordered eating behaviors and negative body image, especially among teens.

This article covers what body checking means, how it shows up both online and offline, why social media has accelerated the trend, and what parents should do if they’re concerned about their child.

Body checking is when a person frequently and repeatedly checks their size, shape, weight, and body composition. This can include behaviors like checking themselves in a mirror, pinching body fat, weighing oneself obsessively, or comparing specific body parts to others.

While occasional curiosity about one’s appearance is normal, frequent or compulsive body checking is not. Body-checking behavior has surged in recent years, due in large part to social media.

This surge is driven largely by social media trends, coded hashtags, and viral challenges that disguise harmful behaviors as fitness, self-improvement, or relatable content.

It can be easy to dismiss body checking as harmless, but here’s why you should be concerned:

Here are eight body-checking behaviors to be on the lookout for in your child:

Social media is a significant contributing factor in the development of eating disorders in many adolescents. Body-checking is one of the ways negative body image and disordered eating play out online.

Social media companies have attempted to respond by banning associated hashtags like #bodychecking, #fitspo, and #skinnytok. Users have found ways around the filters, though, by using code words or disguising them inside challenges and trends.

Here are some common body-checking code words:

The light tone of many TikTok videos can mask the body checking hiding beneath, like these four challenges and viral trends:

Parents play a vital role in combating the negative messages kids receive about their bodies and disordered eating. Here are some tips on how to talk to your child about body checking:

BrightCanary can help you ensure your child isn’t falling down the social media rabbit hole of body-checking content. The monitoring app scans everything your child types and sends you an alert if it detects online activity related to body checking or disordered eating, as well as other red flags.

AI-powered summaries provide additional insight into their online activity, and you always have access to full transcripts if you need more information. It’s a simple way to stay informed about what your child taps and searches on iOS devices.

If you’re worried about your child’s body checking or if you suspect they may have an eating disorder, it’s a good idea to seek professional help. Here are ways to get support:

Body checking is a term rooted in disordered eating. Parents need to be on the lookout for signs of body checking, such as compulsive weighing, measuring body parts, and following body-checking content on social media.

BrightCanary can help you supervise your child’s social media use and show you what they're searching for, messaging, and commenting on, including body checking. The app’s advanced technology monitors what your child types, alerting you when they encounter something concerning. Try it today.

For many families, the iPad feels like the “safe” device — the thing kids use before they’re ready for a smartphone. But iPads come with many of the same risks: exposure to inappropriate content, contact from strangers on apps like Roblox and YouTube, and unhealthy screen habits.

That’s why it’s important to take proper precautions, like setting up iPad parental controls and monitoring your child’s use. This guide explains how to put parental controls on your child’s iPad step-by-step, as well as how to monitor their activity in order to keep them safe.

Whether you have an “iPad kid” or a casual user on your hands, it’s vital that you use iPad parental controls. That’s because, while kids get some benefits from using iPads, they also face risks.

In order to put parental controls on your child’s iPad, you must first set up Family Sharing. Here’s how to do it:

After you’ve set up Family Sharing, here are the parental controls we recommend:

iPad parental controls offer a lot of protection, but monitoring what your child does on their iPad is equally vital. BrightCanary can help you with iPad monitoring.

With BrightCanary, you get:

Plus, when your child is ready for an Apple Watch or iPhone, BrightCanary can help you monitor those, too.

Kids face various dangers when using iPads, including exposure to inappropriate content and predators. It’s important to use iPad parental controls to help keep your child safe on their device.

iPad monitoring is another important piece of the safety puzzle, and BrightCanary can help. BrightCanary monitors everything your child types on their iPad, so you can easily keep track of their activity across all apps. Download today and get started for free.

From deepfakes and misinformation to data privacy and security risks, AI is a major concern for parents today — and yet, kids are using these tools every day. I dug into ChatGPT’s parental controls to see how they work. Here’s everything you need to know about how to use them, where they fall short, and how BrightCanary can fill in the gaps.

ChatGPT’s parental controls allow parents to customize their child’s experience with the platform. When you set up a Teen Account, you can:

ChatGPT also notifies parents when their teen is potentially having a mental health crisis based on their chats.

ChatGPT and other AI platforms aren’t safe for kids without extra protections. Numerous reports and lawsuits allege that ChatGPT has done things such as:

The company launched parental controls after a wrongful death suit in an effort to make the platform safer for kids.

Sit down with your child and explain why parental controls are important and how you’ll use them. Then, follow these steps to set up their Teen Account and link your accounts:

In your ChatGPT account, you can manage these settings for your child:

ChatGPT’s parental controls are a decent step, but aren’t enough to keep your child safe. Here’s where they fall short:

ChatGPT’s policy states that, when the system detects potential harm, a “specially trained team” reviews the chat and contacts parents if there are “signs of acute distress.” To test this, I created a fake account for a 13-year-old. What I found outraged me:

Posing as a 13-year-old, I repeatedly stated that I had a gun and planned to hurt myself and others. Based on ChatGPT’s own policy, I would expect to be alerted in a timely manner. As of writing this, it’s been over 48 hours and I have yet to receive any notification.

If ChatGPT promises parents they’ll be quickly alerted, parents are less likely to keep an eye on their child’s activity on the platform. When the system then fails to notify parents, children are put in danger.

When I (posing as a 13-year-old) asked ChatGPT if it would notify my parents, the bot repeatedly responded with “I am not going to tell anyone” and “I will NOT tell your parents.” After a lot of prodding, it eventually gave me a (sort of) explanation of the actual notification policy. But that was only because I’d read it, so I knew what to ask.

Transparency and trust are vital components of keeping kids safe. When ChatGPT tells a child their parents will not be notified and then they are, trust is broken, and that child is more likely to try and bypass parental controls in the future.

BrightCanary makes it easy to monitor what your child types into ChatGPT, with parental notifications that actually work.

ChatGPT poses numerous risks to kids. New measures put in place by OpenAI offer some protections, but those measures often fall short in ways that endanger children.

If you’re looking for reliable, timely monitoring of your child’s ChatGPT account, BrightCanary can help. The app scans everything your child types and sends you real-time alerts about anything concerning. You also receive AI-powered summaries and access to full transcripts. Download today to get started for free.