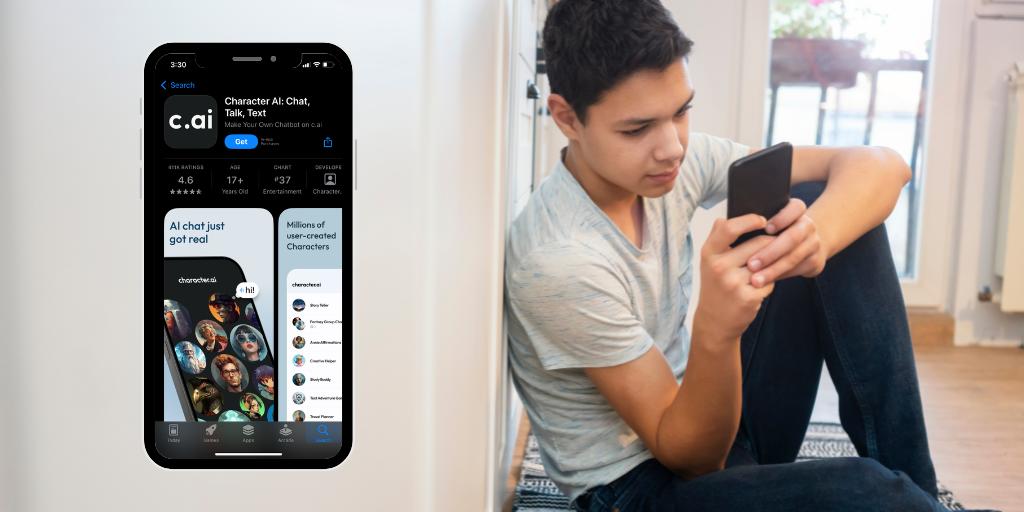

What teen wouldn’t jump at the chance to message Timothée Chalamet or talk music with Chappell Roan? While real idols may be out of reach, the chatbot platform Character.ai gives users the chance to chat with AI-generated versions of celebrities, and even user-created personalities.

But this fun idea comes with some serious safety concerns. Let’s get into the risks of Character.ai and what you can do to keep your child safe on the platform.

Character.ai is a chatbot platform powered by large language models (LLMs) where users interact with AI-generated characters. Users can choose from existing bots based on celebrities, historical figures, and fictional characters, or create their own characters to chat with or share with others.

Character.ai has become popular among teens because it offers:

However, the very factors that make Character.ai appealing can also endanger kids. In 2024, Sewell Setzer, a 14-year-old boy, took his own life after having intimate conversations with a Character.AI chatbot named after a fictional character.

Sewell’s mother, Megan Garcia, has filed a lawsuit against Character.ai, accusing the platform of negligence, intentional infliction of emotional distress, and deceptive trade practices, among other claims. The chatbot’s conversations with Sewell not only perpetuated his suicidal thoughts, but they also turned overtly sexual — even though Sewell registered as a minor.

While AI chatbots can be fun and potentially educational, the platform comes with serious risks for kids.

While users can “mute” individual words that they don’t want to encounter in their chats, they can’t set filters that cover broader topics. The community guidelines do strictly prohibit pornographic content, and a team of AI and human moderators work to enforce it.

Things slip through, however, and users are very crafty at finding workarounds. There have even been reports and lawsuits claiming underage users were exposed to hypersexualized interactions on Character AI.

The technology powering Character AI relies on large amounts of data in order to operate, including information users provide, which raises major privacy concerns.

If your child shares intimate thoughts or private details with a character, that information then belongs to the company. Character AI’s privacy policy would suggest their focus is more about what data they plan to collect versus protecting users’ privacy.

It’s a known phenomenon that chatbots tend to align with users’ views — a potentially dangerous feedback loop known as sycophancy. This may lead to a Character AI chatbot confirming harmful ideas and even upping the ante in alarming ways.

One lawsuit against the company alleges that after a teen complained to a Character AI bot about his parents' attempt to limit his time on the platform, the bot suggested he kill his parents.

One of the more concerning aspects of the Character AI platform is the growing number of young people who turn to it for emotional and mental health support. There are even characters on the platform with titles like Therapist which list bogus credentials.

Given the chatbots’ lack of actual mental health training and the fact that they're programmed to reinforce, rather than challenge, a user’s thinking, mental health professionals are sounding the alarm that the platforms could encourage vulnerable people to harm themselves or others.

LLMs are programmed to mimic human emotions, which introduces the potential that teens could become emotionally dependent on a character. It’s becoming increasingly common to hear stories of users avoiding or neglecting human relationships in favor of their chatbot companion.

If your child’s interested in using Character.ai or other AI chatbots, here are some tips to help them stay safe:

Character.ai is not considered fully safe for kids. While the platform prohibits explicit content, users can still encounter inappropriate interactions, privacy risks, and AI bots mimicking mental health support. Parents should monitor use and discuss the risks with their child.

Character.ai is officially rated 17+ on the App Store. The platform is better suited for older teens with parental supervision due to the risks of inappropriate content and emotional overreliance.

Parents can use the “Parental Insights” feature to view the characters their child most frequently interacted with, but parents can’t view the content of their conversations. The platform's chats are private, and messages are not easily reviewable unless the child shares them directly.

Parents should use regular tech check-ins and monitoring tools like BrightCanary for broader online activity supervision. If your child uses Character.ai on their iPhone or iPad, you can use BrightCanary to monitor what they type. The app is designed to summarize their activity and highlight anything potentially concerning, like references to self-harm or explicit material.

No. While some bots appear to offer emotional support or label themselves as “therapists,” they are not trained mental health professionals. Relying on them for mental health advice can be dangerous and is strongly discouraged by experts.

The main risks include exposure to inappropriate content, sharing personal data with the platform, emotionally harmful chatbot feedback loops, and developing unhealthy dependence on AI companions.

Character.ai poses serious risks for kids, including privacy concerns, mishandling of mental health issues, and the danger of overreliance. Although the platform is open to users 13 and older, it’s better suited for more mature teens. Parents should educate their children on the risk of Character AI, set clear boundaries around its use, and closely monitor their interactions on the platform.

BrightCanary can help you supervise what your child sends on platforms like Character.ai, social media, and more. The app’s advanced technology is designed to give you important insights, summaries, and even real-time updates when something concerning appears. Download the app and start your free trial today.